Systematic co-variation of monophthongs across speakers of New Zealand English

Supplementary materials: Analysis script

James Brand1, Jen Hay1,2, Lynn Clark1,2, Kevin Watson1,2 & Márton Sóskuthy3

1New Zealand Institute for Language, Brain and Behaviour, Univeristy of Canterbury, NZ

2Department of Linguistics, Univeristy of Canterbury, NZ

3Department of Linguistics, The University of British Columbia, CA

Corresponding author: James Brand

Email: james.brand@canterbury.ac.nz

Website: https://jamesbrandscience.github.io

13 July, 2021

Document outline

This document provides the code used in the analyses of the Brand, Hay, Clark, Watson and Sóskuthy (2020) manuscript, submitted to the Journal of Phonetics. It contains the various analysis steps reported in the paper, as well as additional analyses that the authors completed but were not considered central to the manuscript’s core research questions, they are included here in case readers are interested.

Whilst every attempt has been made to make the code transparent, clear and comprehensible to all readers, regardless of your proficiency with using R or the statistical procedures applied in the analyses, if there are questions/queries/issues that do arise, please do contact the corresponding author (contact details at the top of the document).

Note that in the project repository, large and computationally expensive processes, such as the GAMM modelling, have been pre-run and important data stored in the Data folder. This has been done to ensure the compilation of this file is achieved relatively quickly and can be hosted online (i.e. via GitHub and OSF), in addition to allowing others to have quick access to all the required data. These steps are included in this file and can be run on your own computer to reproduce all the original files. When pre-run steps have been carried out, they are identifiable in the .Rmd file by the chunks having an eval=FALSE argument. If you are running these chunks, please ensure you have sufficient memory avialable (I require 13.18GB to store the Analysis folder, when all models are saved).

cat(paste0("Start time:\n", format(Sys.time(), "%d %B %Y, %r")))## Start time:

## 13 July 2021, 05:23:11 pmstart_time <- Sys.time()Analysis steps

The document covers a number of steps that we completed, all of which can be reproduced by using the code and data in the project repository (https://github.com/nzilbb/Covariation_monophthongs_NZE). In order to orientate the reader, we provide a brief written outline of what the steps are.

Load in the data and provide summaries of the how it is structured.

Apply a new normalisation procedure (Lobanov 2.0) to the formant measurements.

Run a series of GAMMs that model the normalised values (per formant and per vowel), with fixed effects of speaker year of birth, gender and speech rate. These models will then be used to extract the by-speaker random intercepts, which we use as estimates of how innovative a speaker’s realisations of each vowel are in terms of F1/F2, whilst keeping the fixed effects constant.

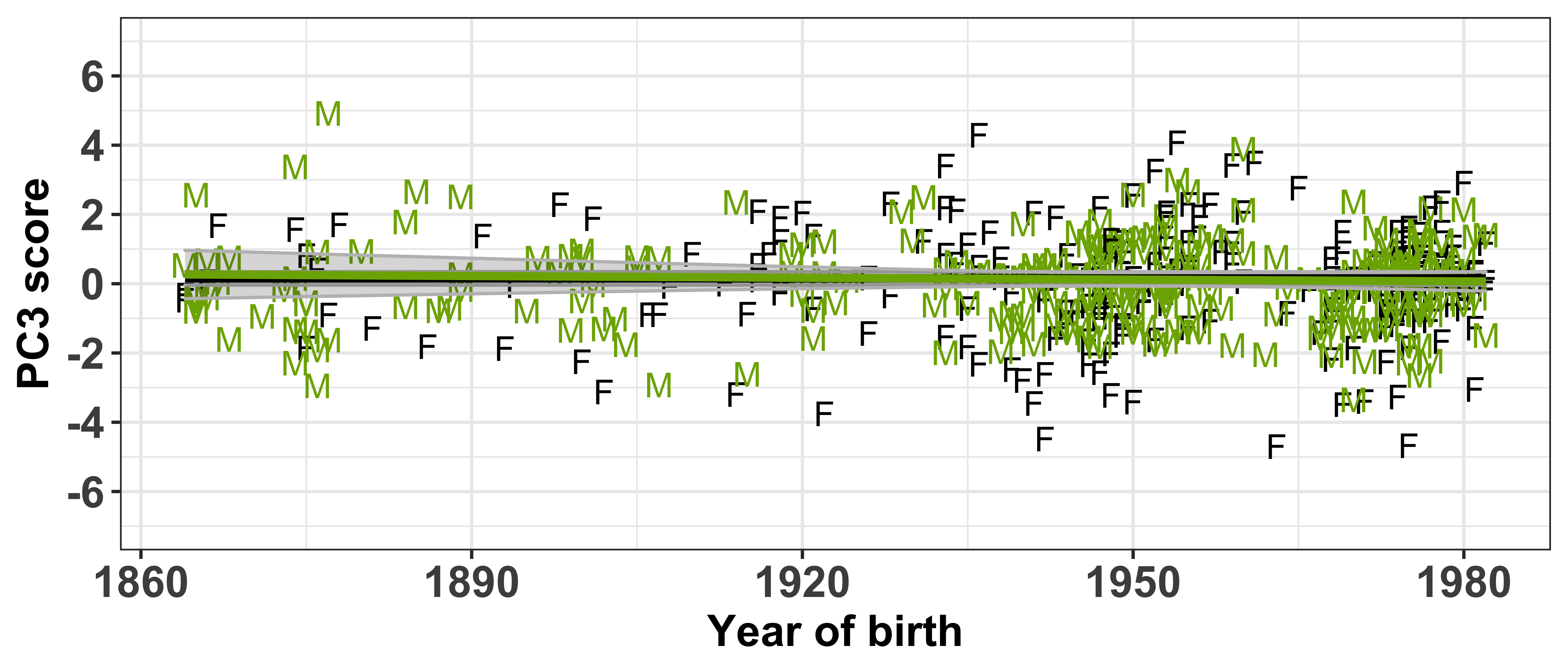

Run a principal components analysis (PCA) on the speaker intercepts data. Then inspect the eigen values of each of the principal components (PCs), this will allow us to determine which PCs account for sufficient variance to be meaningfully interpreted.

Extract the PCA scores from the PCs, which give each individual speaker a value for each PC, the more extreme (i.e. high or low) this value, the more the speaker contributes to the PC’s formation. This will allow us to identify which speakers are representative of the PCs.

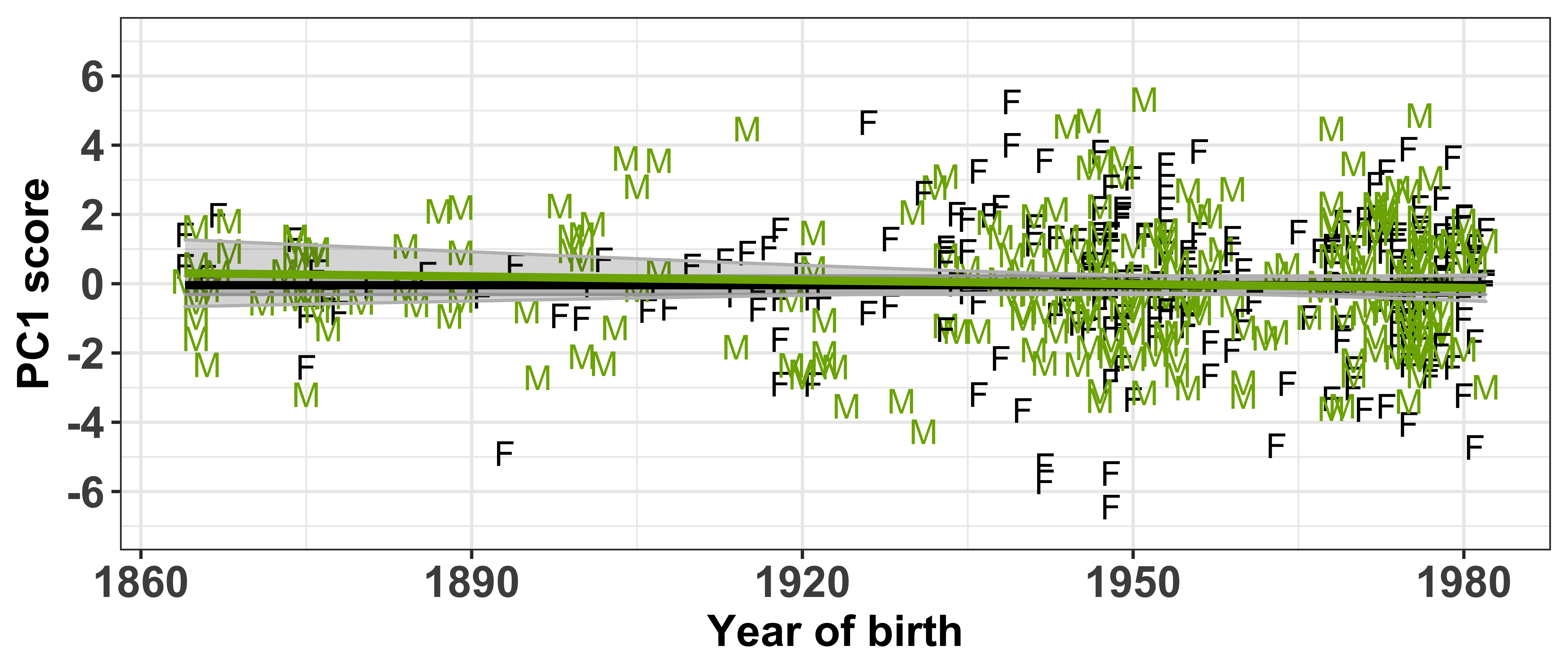

Assess if any of the PCs can be explained by the fixed-effects from the GAMM fitting procedure, i.e. is there a relationship between the PCA scores and factors such as year of birth or gender. We will provide examples of speaker vowel spaces to assist in the interpreation of the PCs in terms of F1/F2 space (the Shiny app allows for exploration of all speakers, so we recommend that as the optimal tool for understanding speaker variation https://onze.shinyapps.io/Covariation_shiny/).

Following on from the previous inspection of the variables, our interpretation for how they co-vary together within a more theoretical framework (as explained in the paper), was driven by our understanding of the directions of change in F1/F2. To demonstrate this we will run additional GAMMs predicting F1/F2 by the PCA scores. Then visualise how these changes map onto change in New Zealand English.

Pre-requisites

Purpose: Install libraries and load data

In order for the code in this document to work, the following packages are required to be installed and loaded into your R session. If you do not have any of the packages installed, you can run install.packages("PACKAGE NAME") which should resolve any warning messages you might get (change “PACKAGE NAME” to the required package name, e.g. install.packages("tidyverse")).

A large portion of the code in this document is written in a tidy way, this means that it (tries to) always use the tidyverse functions when possible, if you are new to using R or are more familiar with R’s base packages, see http://tidyverse.tidyverse.org/ for a full reference guide.

Similarly, if there are any functions that you are not familiar with/would like more information on (or the arguments to those functions), simply press F1 whilst your cursor is clicked anywhere on the name of the function, this will bring up the help page in RStudio (note this will only work if you are using the .rmd version of this file and not the .html).

For more general information on R Markdown documents and how they work see https://rmarkdown.rstudio.com/index.html

##Libraries

The following libraries are required for the document to be run.

library(lme4) #mixed-effects models

library(rms) #fitting restricted cubic splines

library(cowplot) #plotting functions

library(tidyverse) #lots of things

library(kableExtra) #outputting nice tables

library(factoextra) #pca related things

library(ggrepel) #more plotting things

library(gganimate) #animation plotting

library(lmerTest) #p values from lmer models

library(DT) #interactive data tables

library(mgcv) #gamms

library(itsadug) #additional gamm things

library(scales) #rescale functions

library(gifski) #needed to generate gif

library(circlize) #chord diagram

library(PerformanceAnalytics) #correlation figure

#this is important for reproduction of any stochastic computations

set.seed(123)

#check information about R session, this will give details of the R setup on the authors computer at the time of running. If any of the outputs are not reproduced as in the html file produced from this markdown document (see repository), there may be differences in the package versions or setup on your computer. You can update packages by running utils::update.packages()

sessionInfo()## R version 4.0.3 (2020-10-10)

## Platform: x86_64-apple-darwin17.0 (64-bit)

## Running under: macOS Big Sur 10.16

##

## Matrix products: default

## BLAS: /Library/Frameworks/R.framework/Versions/4.0/Resources/lib/libRblas.dylib

## LAPACK: /Library/Frameworks/R.framework/Versions/4.0/Resources/lib/libRlapack.dylib

##

## locale:

## [1] en_NZ.UTF-8/en_NZ.UTF-8/en_NZ.UTF-8/C/en_NZ.UTF-8/en_NZ.UTF-8

##

## attached base packages:

## [1] stats graphics grDevices utils datasets methods base

##

## other attached packages:

## [1] PerformanceAnalytics_2.0.4 xts_0.12.1

## [3] zoo_1.8-9 circlize_0.4.13

## [5] gifski_1.4.3-1 scales_1.1.1

## [7] itsadug_2.4 plotfunctions_1.4

## [9] mgcv_1.8-36 nlme_3.1-152

## [11] DT_0.18 lmerTest_3.1-3

## [13] gganimate_1.0.7 ggrepel_0.9.1

## [15] factoextra_1.0.7 kableExtra_1.3.4

## [17] forcats_0.5.1 stringr_1.4.0

## [19] dplyr_1.0.7 purrr_0.3.4

## [21] readr_1.4.0 tidyr_1.1.3

## [23] tibble_3.1.2 tidyverse_1.3.1

## [25] cowplot_1.1.1 rms_6.2-0

## [27] SparseM_1.81 Hmisc_4.5-0

## [29] ggplot2_3.3.5 Formula_1.2-4

## [31] survival_3.2-11 lattice_0.20-44

## [33] lme4_1.1-27.1 Matrix_1.3-4

##

## loaded via a namespace (and not attached):

## [1] TH.data_1.0-10 minqa_1.2.4 colorspace_2.0-2

## [4] ellipsis_0.3.2 htmlTable_2.2.1 GlobalOptions_0.1.2

## [7] base64enc_0.1-3 fs_1.5.0 rstudioapi_0.13

## [10] farver_2.1.0 MatrixModels_0.5-0 fansi_0.5.0

## [13] mvtnorm_1.1-2 lubridate_1.7.10 xml2_1.3.2

## [16] codetools_0.2-18 splines_4.0.3 knitr_1.33

## [19] jsonlite_1.7.2 nloptr_1.2.2.2 broom_0.7.8

## [22] cluster_2.1.2 dbplyr_2.1.1 png_0.1-7

## [25] compiler_4.0.3 httr_1.4.2 backports_1.2.1

## [28] assertthat_0.2.1 cli_3.0.0 tweenr_1.0.2

## [31] htmltools_0.5.1.1 quantreg_5.86 prettyunits_1.1.1

## [34] tools_4.0.3 gtable_0.3.0 glue_1.4.2

## [37] Rcpp_1.0.6 cellranger_1.1.0 jquerylib_0.1.4

## [40] vctrs_0.3.8 svglite_2.0.0 conquer_1.0.2

## [43] xfun_0.24 rvest_1.0.0 lifecycle_1.0.0

## [46] polspline_1.1.19 MASS_7.3-54 hms_1.1.0

## [49] sandwich_3.0-1 RColorBrewer_1.1-2 yaml_2.2.1

## [52] gridExtra_2.3 sass_0.4.0 rpart_4.1-15

## [55] latticeExtra_0.6-29 stringi_1.6.2 checkmate_2.0.0

## [58] boot_1.3-28 shape_1.4.6 rlang_0.4.11

## [61] pkgconfig_2.0.3 systemfonts_1.0.2 matrixStats_0.59.0

## [64] evaluate_0.14 htmlwidgets_1.5.3 tidyselect_1.1.1

## [67] magrittr_2.0.1 R6_2.5.0 generics_0.1.0

## [70] multcomp_1.4-17 DBI_1.1.1 pillar_1.6.1

## [73] haven_2.4.1 foreign_0.8-81 withr_2.4.2

## [76] nnet_7.3-16 modelr_0.1.8 crayon_1.4.1

## [79] utf8_1.2.1 rmarkdown_2.9 jpeg_0.1-8.1

## [82] progress_1.2.2 grid_4.0.3 readxl_1.3.1

## [85] data.table_1.14.0 reprex_2.0.0 digest_0.6.27

## [88] webshot_0.5.2 numDeriv_2016.8-1.1 munsell_0.5.0

## [91] viridisLite_0.4.0 bslib_0.2.5.1 quadprog_1.5-8Data

Purpose: Understand the structure of the dataset

All data has been made available to reproduce the results, the data file should be located in a folder called data within the folder/directory this file is saved in. We will store the data as an R data frame called vowels_all. Note the data is saved as a .rds file, this is essentially the same as a normal .csv file, but is more efficient when working in R. If you wish to reuse the data in a different format, it is recommended that you load in the data and then export it to your preferred format, e.g. using the write.csv() function for .csv files.

#load in the data

vowels_all <- readRDS("Data/ONZE_vowels_filtered_anon.rds")We can inspect the data in different ways, to ensure that the correct file has been loaded and for general understanding of how the data is structured.

Variables

Let’s inspect the variables…

We should have 10 variables.

Definitions of each variable are given below (factors are represented as fct with the number of unique levels also provided e.g. fct, 2 represents a factor with 2 unique values, numeric variables are represented as num, with the smallest and largest values provided, e.g. num, (1857-1988)):

| Variable | Description | Class |

|---|---|---|

| Speaker | The speaker ID (format: corpus_gender_distinctnumber, e.g. IA_f_001 | fct (481) |

| Transcript | The transcript number of the speaker, e.g. IA_f_001-01.trs | fct (2523) |

| Corpus | The sub-corpus the data comes from, i.e. either MU, IA, Darfield or CC | fct (4) |

| Gender | The gender of the speaker, i.e. either F for female or M for male | fct (2) |

| participant_year_of_birth | The year the participant was born in e.g. 1864 | num (1864-1982) |

| Word | The word form of the token, this is anonymised (format: word_distinctnumber, e.g. word_00002 | fct (14632) |

| Vowel | The vowel of the token, using Well’s notation, e.g. FLEECE | fct (10) |

| F1_50 | The raw F1 of the vowel in Hz, taken at the mid-point, e.g. 500 | num (58-999) |

| F2_50 | The raw F2 of the vowel in Hz, taken at the mid-point, e.g. 1500 | num (320-3453) |

| Speech_rate | The speech rate in syllbales per second for the transcript, e.g. 1.7929 | num (0.1365-15.2451) |

Next, we can generate some summary information about the dataset.

Token counts

There are 10 different vowels in the data, a summary of the number of tokens per vowel is given below.

Originally, we extracted 12 vowels, comprising the 10 summarised below, but also SCHWA and FOOT, these were removed during the data cleaning stage, SCHWA was removed as we are only analysing stressed tokens and the number of speakers with low N tokens for FOOT would have led to large loss in the number of speakers in the data.

| Vowel | N Tokens | % |

|---|---|---|

| NURSE | 16891 | 4.1 |

| START | 21217 | 5.1 |

| GOOSE | 26432 | 6.4 |

| THOUGHT | 28201 | 6.8 |

| TRAP | 32284 | 7.8 |

| LOT | 35228 | 8.5 |

| FLEECE | 49757 | 12.0 |

| STRUT | 50907 | 12.3 |

| DRESS | 69925 | 16.9 |

| KIT | 83837 | 20.2 |

| Total | 414679 | 100.0 |

Sub-corpora

The ONZE dataset comprises four different sub-corpora:

MU - Mobile Unit

IA - Intermediate Archive

Darfield - Canterbury Regional Survey

CC - Canterbury Corpus

Below is a summary of the demographic information for each of the sub-corpora.

| Corpus | N Tokens | % | N Speakers | Female | Male | Year of Birth Range |

|---|---|---|---|---|---|---|

| MU | 59059 | 14.2 | 54 | 13 | 41 | 1864 - 1904 |

| IA | 97620 | 23.5 | 54 | 29 | 25 | 1891 - 1963 |

| Darfield | 57527 | 13.9 | 25 | 14 | 11 | 1918 - 1980 |

| CC | 200473 | 48.3 | 348 | 169 | 179 | 1926 - 1982 |

Speakers

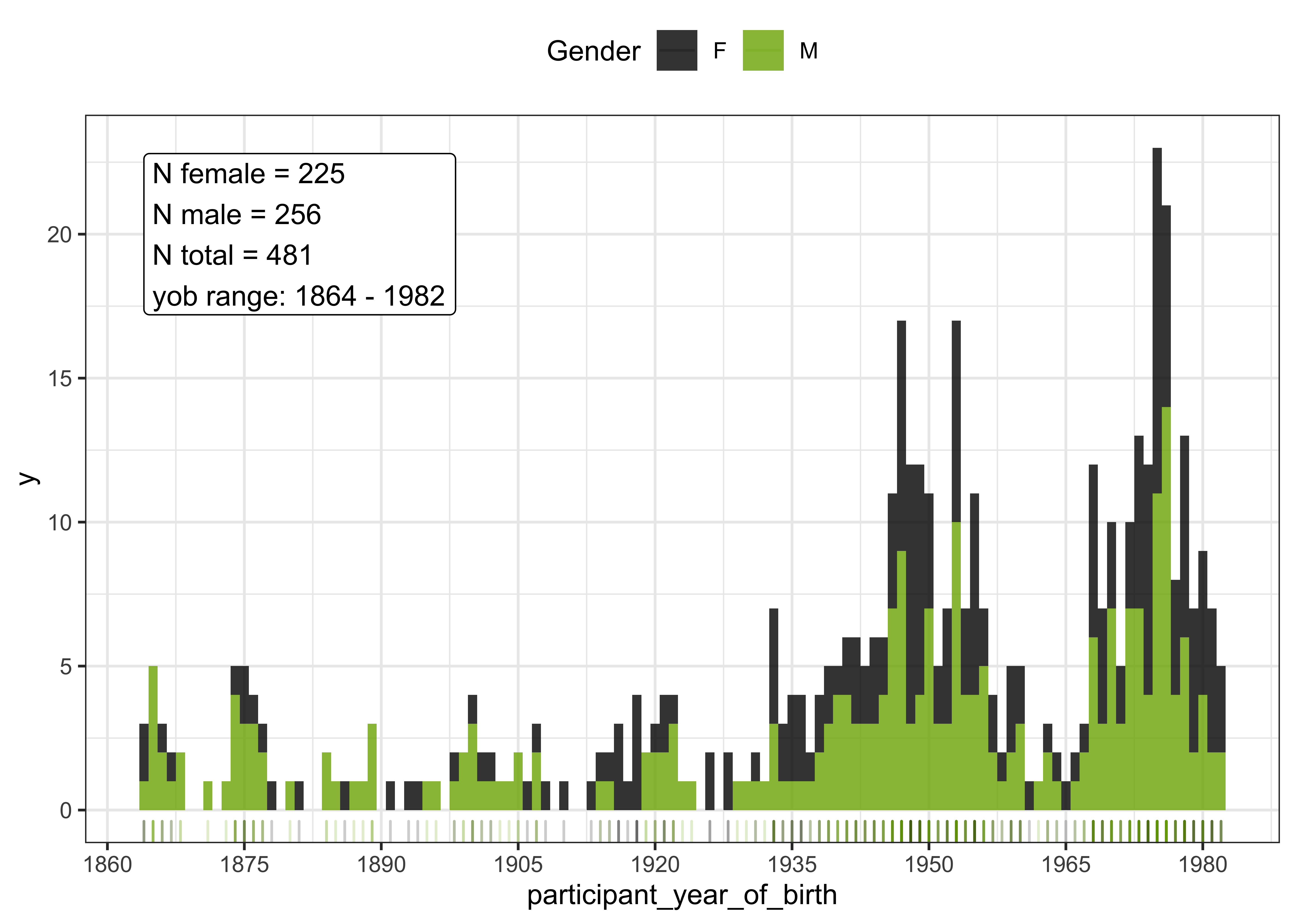

The distribution of speakers by gender is given in the histogram below.

#histogram of the speakers by year of birth and gender

vowels_all %>%

select(Speaker, Gender, participant_year_of_birth) %>%

distinct() %>%

ggplot(aes(x = participant_year_of_birth, fill = Gender, colour = Gender)) +

geom_histogram(aes(position="identity"),

binwidth=1,

alpha = 0.8, colour = NA) +

geom_rug(alpha = 0.2) +

scale_x_continuous(breaks = seq(1860, 1990, 15)) +

scale_fill_manual(values = c("black", "#7CAE00")) +

scale_color_manual(values = c("black", "#7CAE00")) +

geom_label(data = vowels_all %>% filter(participant_year_of_birth > 1863 & participant_year_of_birth < 1983) %>% select(Speaker, Gender, participant_year_of_birth) %>% distinct() %>% group_by(Gender) %>% summarise(n = n()), aes(x = 1864, y = 20, label = paste0("N female = ", n[1], "\nN male = ", n[2], "\nN total = ", sum(n), "\nyob range: ", min(vowels_all$participant_year_of_birth), " - ", max(vowels_all$participant_year_of_birth))), hjust=0, inherit.aes = FALSE) +

theme_bw() +

theme(legend.position = "top")

Below we provide summary information about each of the speakers token counts per vowel. This table comprises all speakers in the dataset and can be ordered and searched like a spreadsheet.

Normalisation

We will now normalise the raw F1 and F2 values.

Here, we introduce an adapted version of the Lobanov (1971) normalisation method, which we refer to as Lobanov 2.0. Explanations of the formula for each of the methods (Lobanov and Lobanov 2.0) are given below. Please refer to the paper for reasons why this adapted version was preferred to Lobanov’s original normalisation method.

Lobanov formula:

\[ \begin{equation} F_{lobanov_i} = \frac{(F_{raw_i}-\mu_{raw_i})}{\sigma_{raw_i}} \end{equation} \]

- \(i\) = either F1 or F2

- \(F_{lobanov_i}\) = the normalised value in \(i\)

- \(F_{raw_i}\) = the raw formant measurement value in \(i\)

- \(\mu_{raw_i}\) = the mean formant value calculated across all raw values in \(i\)

- \(\sigma_{raw_i}\) = the standard deviation calculated across all raw values in \(i\)

In plain English, the formula subtracts the mean formant value of a speaker from the raw individual formant value, then divides that by the standard deviation of the formant values.

e.g. if a speaker has a raw F1 of 400hz, a mean F1 of 500hz and a standard deviation of 70hz, this would give a Lobanov normalised value of (400-500)/70 = -1.43.

Lobanov 2.0 formula:

\[ \begin{equation} F_{lobanov2.0_i} = \frac{(F_{raw_i}-\mu_{(\mu_{vowel_1},\cdots,\mu_{vowel_n})})}{\sigma_{(\mu_{vowel_1},\cdots,\mu_{vowel_n})}} \end{equation} \]

- \(i\) = either F1 or F2

- \(F_{lobanov2.0_i}\) = the normalised value in \(i\)

- \(F_{raw_i}\) = the raw formant measurement value in \(i\)

- \(\mu_{(\mu_{vowel_1},\cdots,\mu_{vowel_n})}\) = the mean taken from the mean formant value calculated per vowel in \(i\)

- \(\sigma_{(\mu_{vowel_1},\cdots,\mu_{vowel_n})}\) = the standard deviation taken from the mean formant value calculated per vowel in \(i\)

In plain English, the formula subtracts the mean of means formant value of a speaker (calculated as the mean of means, where a mean for each vowel is calculated, then the mean taken of those means) from the raw individual formant value, then divides that by the standard deviation of the mean of mean values.

e.g. if a speaker has a raw F1 of 400hz, a mean of means F1 of 550hz and a standard deviation (for the mean of means) of 70hz, this would give a Lobanov 2.0 normalised value of (400-550)/70 = -2.14.

Implementation

The primary difference between the formula for this adapted version and Lobanov’s original formula, is that each of the vowels has a mean formant value calculated, then a mean of those means is taken as the mean in the formula. The motivation for doing this is that the data we are normalising contains speakers with varying numbers of tokens across the different vowels. Lobanov’s method is suited (and designed) based on balanced data, where an equal number of tokens per vowel are normalised.

When normalising with unbalanced numbers of tokens per vowel, the calculation of \(\mu_{raw_i}\) (the mean of all the raw formant values), can be skewed by tokens that have a much larger count in a certain vowel.

Therefore, we first calculate means for each of the individual vowels (per speaker, per formant), then calculate the mean based on those means. This approach allows for tokens in vowel categories to be weighted equally regardless of how many tokens there are, making the normalisation more reliable for this type of dataset.

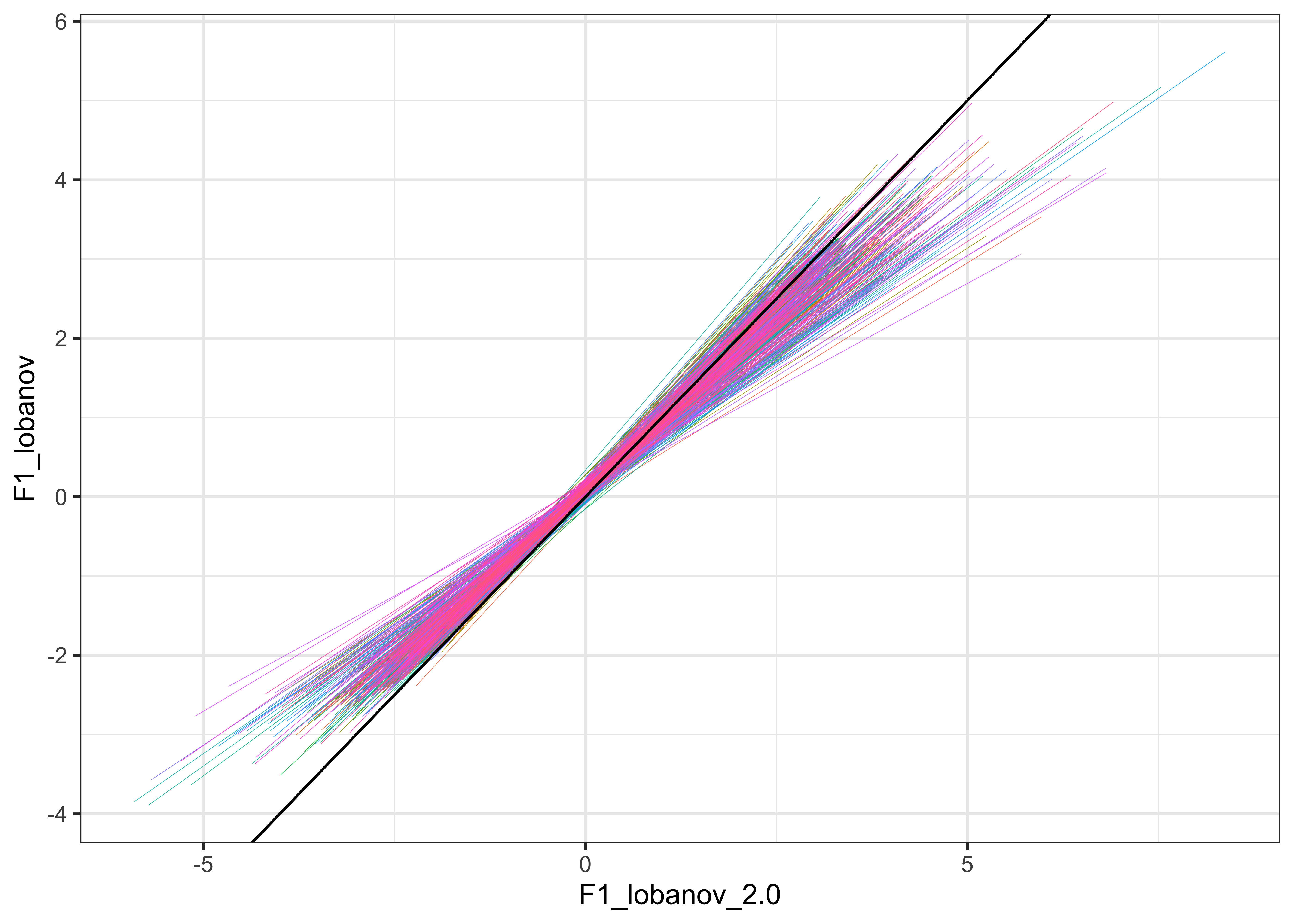

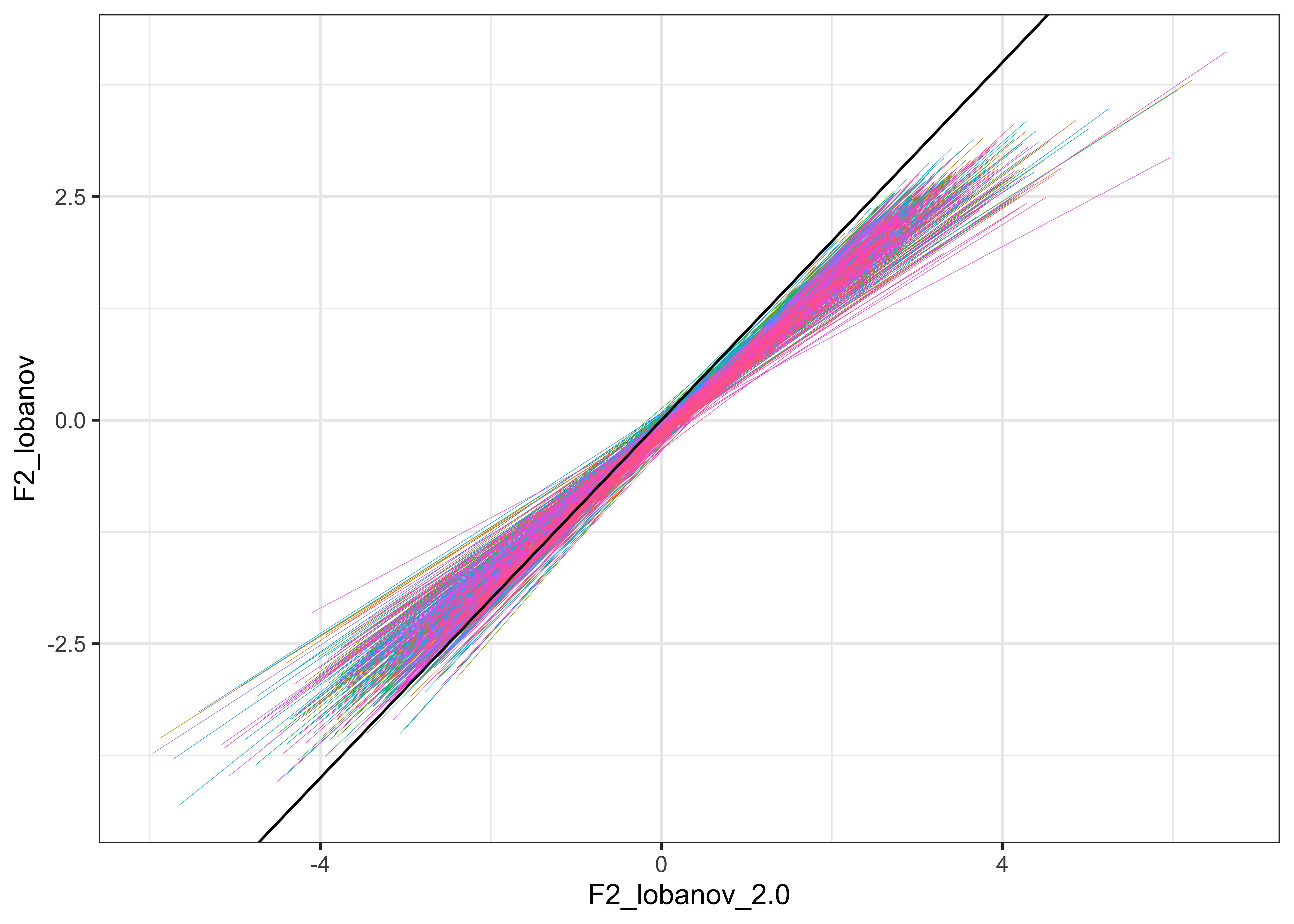

For visualisation purposes, we plot the normalised values for F1 and F2 against each other in the plots below (Lobanov 2.0 is on the x axis and Lobanov on the y axis, with coloured lines representing each speaker, the black line represents where the values would be if they were equal, i.e. if Lobanov 2.0 = Lobanov)

#standard Lobanov normalisation - calculate means across all vowels per speaker

summary_vowels_all_lobanov <- vowels_all %>%

group_by(Speaker) %>%

dplyr::summarise(mean_F1_lobanov = mean(F1_50),

mean_F2_lobanov = mean(F2_50),

sd_F1_lobanov = sd(F1_50),

sd_F2_lobanov = sd(F2_50),

token_count = n())

#Lobanov 2.0 - calculate means per vowel and per speaker

summary_vowels_all <- vowels_all %>%

group_by(Speaker, Vowel) %>%

dplyr::summarise(mean_F1 = mean(F1_50),

mean_F2 = mean(F2_50),

sd_F1 = sd(F1_50),

sd_F2 = sd(F2_50),

token_count_vowel = n())

#get the mean_of_means and sd_of_means from the the speaker_summaries, this will give each speaker a mean caculated from the means across all vowels, as well as the standard deviation of the means

summary_mean_of_means <- summary_vowels_all %>%

group_by(Speaker) %>%

dplyr::summarise(mean_of_means_F1 = mean(mean_F1),

mean_of_means_F2 = mean(mean_F2),

sd_of_means_F1 = sd(mean_F1),

sd_of_means_F2 = sd(mean_F2)

)

#combine these values with the full raw dataset, then use these values to normalise the data with both the Lobanov and the Lobanov 2.0 method

vowels_all <- vowels_all %>%

#add in the data

left_join(., summary_mean_of_means) %>%

left_join(., summary_vowels_all[, c("Speaker", "Vowel", "token_count_vowel")]) %>%

left_join(., summary_vowels_all_lobanov) %>%

#normalise the raw F1 and F2 values with Lobanov

mutate(F1_lobanov = (F1_50 - mean_F1_lobanov)/sd_F1_lobanov,

F2_lobanov = (F2_50 - mean_F2_lobanov)/sd_F2_lobanov,

#normalise with Lobanov 2.0

F1_lobanov_2.0 = (F1_50 - mean_of_means_F1)/sd_of_means_F1,

F2_lobanov_2.0 = (F2_50 - mean_of_means_F2)/sd_of_means_F2) %>%

#remove the variables that are not required

dplyr::select(-(mean_of_means_F1:sd_of_means_F2), -(mean_F1_lobanov:sd_F2_lobanov))

#remove the previous summary data frames

rm(summary_vowels_all_lobanov, summary_vowels_all, summary_mean_of_means)

#inspect the relationship between the two normalised values

vowels_all %>%

ggplot(aes(x = F1_lobanov_2.0, y = F1_lobanov, colour = Speaker)) +

geom_smooth(method = "lm", size = 0.1, show.legend = FALSE) +

geom_abline(slope=1, intercept=0) +

theme_bw()

vowels_all %>%

ggplot(aes(x = F2_lobanov_2.0, y = F2_lobanov, colour = Speaker)) +

geom_smooth(method = "lm", size = 0.1, show.legend = FALSE) +

geom_abline(slope=1, intercept=0) +

theme_bw()

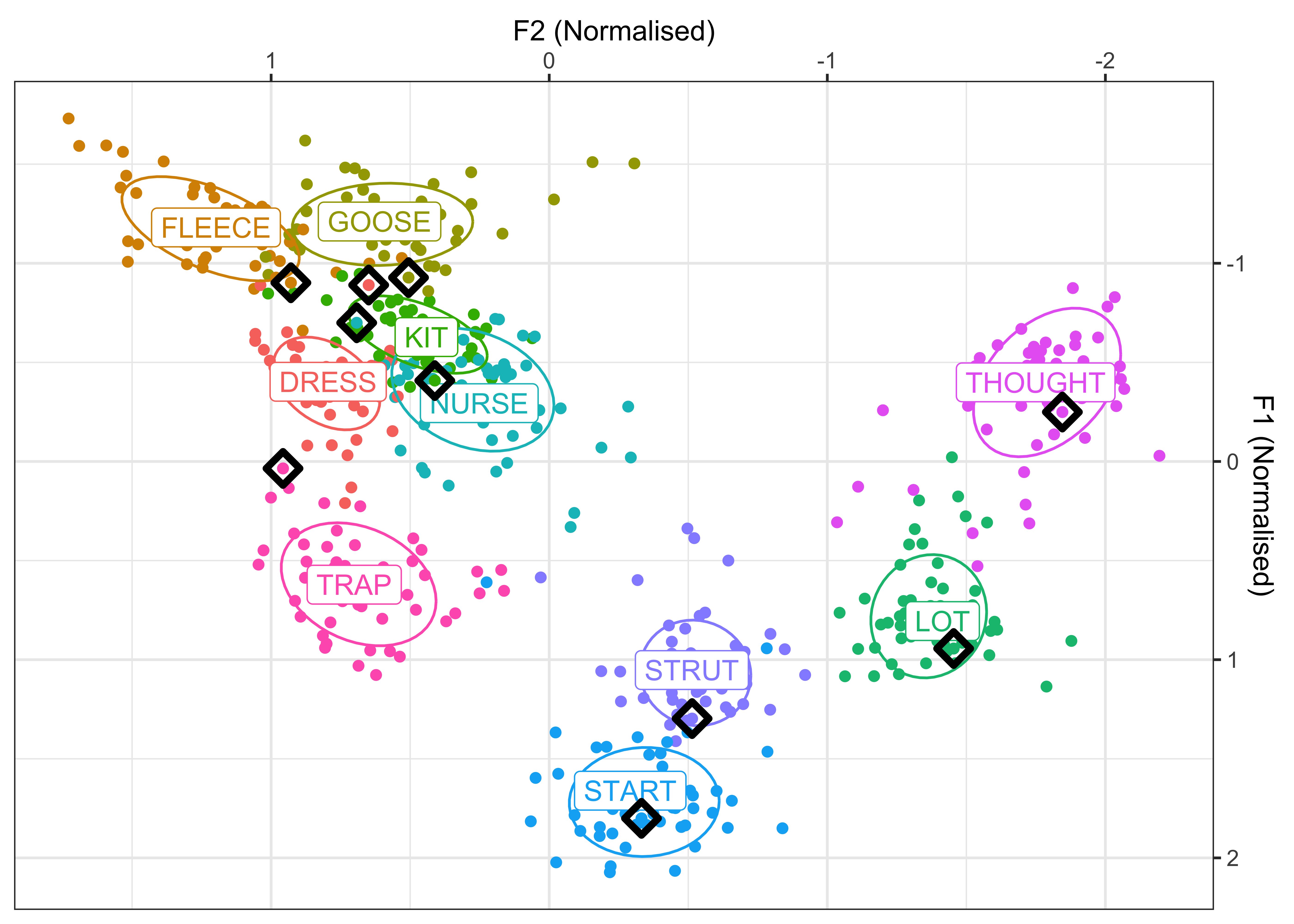

Make a plot for Figure 1 in the manuscript, of speakers born between 1900-1930. This will show the normalised vowel space and individual speaker means for the 10 vowels. There will also be ellipses to show the variation in the vowel productions and the black points indicate the individual vowel means, calculated across the sample of speakers.

#calculate individual speaker means for each vowel

vowel_means_example <- vowels_all %>%

filter(participant_year_of_birth %in% c(1900:1930)) %>%

group_by(Speaker, Vowel, Gender, participant_year_of_birth) %>%

summarise(mean_F1 = mean(F1_lobanov_2.0),

mean_F2 = mean(F2_lobanov_2.0))

vowel_means_example1 <- vowels_all %>%

filter(participant_year_of_birth %in% c(1900:1930)) %>%

group_by(Vowel) %>%

summarise(mean_F1 = mean(F1_lobanov_2.0),

mean_F2 = mean(F2_lobanov_2.0))

vowel_means_example_plot <- vowel_means_example %>%

ggplot(aes(x = mean_F2, y = mean_F1, colour = Vowel)) +

geom_point() +

stat_ellipse(level = 0.67) +

geom_label(data = vowel_means_example1, aes(label = Vowel)) +

geom_point(data = vowel_means_example %>% filter(Speaker == "CC_f_326")) +

geom_point(data = vowel_means_example %>% filter(Speaker == "CC_f_326"), colour = "black", size = 3, shape = 5, stroke = 2) +

scale_x_reverse(name = "F2 (Normalised)", position = "top") +

scale_y_reverse(name = "F1 (Normalised)", position = "right") +

theme_bw() +

theme(legend.position = "none")

vowel_means_example_plot

ggsave(plot = vowel_means_example_plot, filename = "Figures/vowel_means_example.png", dpi = 400)GAMM modelling

In order to analyse co-variation in the dataset, we first must extract a measure of how the speakers vocalic variables differ from one another. To achieve this, we first run a series of Generalised Additive Mixed Models (GAMMs), from which we can extract the by-speaker random intercepts. This is done using the mgcv and itsadug packages, if you are unfamiliar with this form of analysis, see (Winter and Wieling, (2016))[https://academic.oup.com/jole/article/1/1/7/2281883] or (Sóskuthy, (2017))[https://arxiv.org/abs/1703.05339], for further information about why we chose the by-speaker intercepts, please refer to the manuscript or see Drager and Hay (2012)

In total there will be 20 separate models (10 vowels x 2 formants) that will be fitted, each of which we will extract the random intercepts from the random effect of Speaker, as well as the model summary.

Fitting procedure

Each of the models will use the data from one of the 10 vowels (in the Vowel variable) and will have either the F1_lobanov_2.0 or the F2_lobanov_2.0 variable as the dependent/predicted measure.

All models will be fit uniformly, i.e. with the same fixed and random effects structures.

The fixed effects are:

An interaction between

participant_year_of_birthandGenderparticipant_year_of_birthGenderSpeech_rate

The random effects are:

SpeakerWord

The participant_year_of_birth variable is modeled with a smooth term with 10 knots, this is to account for the non-linear ‘wiggliness’ of the effect.

To run the models in an efficient way and store the by-speaker intercepts, we use a for loop to iterate through each of the vowels, extracting the intercepts from each model and adding them to a data frame.

A for loop works by iterating over each value in a series, here we will loop through each value in our Vowels variable and extract the relevant information.

e.g the for loop will start with DRESS, run the GAMM for F1, extract the by-speaker intercepts from that model, it will then run the GAMM for F2, extract the speaker intercepts from this model, then add the 2 sets of intercepts to a data frame (gam_intercepts.tmp). The loop will then move on to the next vowel, FLEECE and do exactly the same process. The loop will finish once all vowels have been ‘looped’ through.

This will result in a data frame comprising:

494 rows (one row per speaker)

1 column identifying the speaker name

20 additional columns identifying the variable being modeled (e.g. F1_DRESS), the numeric values here represent the by-speaker intercepts from that variable’s model

Note, this process takes several hours (six and half hours on my machine) to complete. The output has been stored in files in the GAMM_output folder, for quick reference. Please see those files or load them in to your R session for the rest of the analysis if you do not run the following code chunk.

The intercepts are saved as gamm_intercepts.csv, the model summaries can be found in the model_summaries sub-folder, where each model summary is stored as a .rds file, e.g. gam_summary_F1_DRESS.rds contains the model summary for F1_DRESS.

#update the Gender variable to allow for conrast coding

vowels_all$Gender <- as.ordered(vowels_all$Gender)

contrasts(vowels_all$Gender) <- "contr.treatment"

vowels_all <- vowels_all %>%

arrange(as.character(Speaker))#create a data frame to store the intercepts from the models, this will initially contain just the speaker names

gam_intercepts.tmp <- vowels_all %>%

dplyr::select(Speaker) %>%

distinct()

#loop through the vowels

cat(paste0("Start time:\n", format(Sys.time(), "%d %B %Y, %r\n")))

for (i in levels(factor(vowels_all$Vowel))) {

#F1 modelling

#run the mixed-effects model on the vowel, i.e. if i = FLEECE this will model F1 for FLEECE

gam.F1 <- bam(F1_lobanov_2.0 ~

s(participant_year_of_birth, k=10, bs="ad", by=Gender) +

s(participant_year_of_birth, k=10, bs="ad") +

Gender +

s(Speech_rate) +

s(Speaker, bs="re") +

s(Word, bs="re"),

data=vowels_all %>% filter(Vowel == i),

discrete=T, nthreads=2)

#extract the speaker intercepts from the model and store them in a temporary data frame

gam.F1.intercepts.tmp <- as.data.frame(get_random(gam.F1)$`s(Speaker)`)

#assign the model to an object

assign(paste0("gam_F1_", i), gam.F1)

#save the model summary

saveRDS(gam.F1, file = paste0("/Users/james/Documents/GitHub/model_summaries/gam_F1_", i, ".rds"))

cat(paste0("F1_", i, ": ", format(Sys.time(), "%d %B %Y, %r"), " ✅\n")) #print the vowel the loop is up to for F1, as well as the start time for the model

#F2 modelling

#run the mixed-effects model on the vowel, i.e. if i = FLEECE this will model F2 for FLEECE

gam.F2 <- bam(F2_lobanov_2.0 ~

s(participant_year_of_birth, k=10, bs="ad", by=Gender) +

s(participant_year_of_birth, k=10, bs="ad") +

Gender +

s(Speech_rate) +

s(Speaker, bs="re") +

s(Word, bs="re"),

data=vowels_all %>% filter(Vowel == i),

discrete=T, nthreads=2)

#extract the speaker intercepts again, storing them in a separate data frame

gam.F2.intercepts.tmp <- as.data.frame(get_random(gam.F2)$`s(Speaker)`)

#assign the model to an object

assign(paste0("gam_F2_", i), gam.F2)

#save the model summary

saveRDS(gam.F2, file = paste0("/Users/james/Documents/GitHub/model_summaries/gam_F2_", i, ".rds"))

#rename the variables so it clear which one has F1/F2, i.e. this will give F1_FLEECE, F2_FLEECE

names(gam.F1.intercepts.tmp) <- paste0("F1_", i)

names(gam.F2.intercepts.tmp) <- paste0("F2_", i)

#combine the intercepts for F1 and F2 and store them in the intercepts.tmp_stress data frame

gam_intercepts.tmp <- cbind(gam_intercepts.tmp, gam.F1.intercepts.tmp, gam.F2.intercepts.tmp)

cat(paste0("F2_", i, ": ", format(Sys.time(), "%d %B %Y, %r"), " ✅\n")) #print the vowel the loop is up to for F2 , as well as the start time for the model

}

#save the intercepts as a .csv file

write.csv(gam_intercepts.tmp, "Data/gam_intercepts_tmp_new.csv", row.names = FALSE)Read in pre-run model results

In order to save time running through the GAMM modelling chunk above, the results have been stored in the repository for quick loading. The below code will load the files in to your R session.

#load in model intercepts

gam_intercepts.tmp <- read.csv("Data/gam_intercepts_tmp_new.csv")#make vector containing all .rds filenames from model_summaries folder

model_summary_files = list.files(pattern="*.rds", path = "/Users/james/Documents/GitHub/model_summaries")

#load each of the files with for loop

for (i in model_summary_files) {

cat(paste0(i, ": ", format(Sys.time(), "%d %B %Y, %r"), " ✅\n"))

assign(gsub(".rds", "", i), readRDS(paste0("/Users/james/Documents/GitHub/model_summaries/", i)))

}Understanding the intercepts

In order to better understand these intercepts and how they represent each speaker’s position in relation to the population, it can be helpful to visualise the speaker intercepts in relation to the vowel space.

We interpret the speaker intercepts in terms of how advanced the vowel productions are in relation to other speakers with similar fixed-effects - in other words, if a speaker has a large positive intercept from a model (with a fixed-effects structure as that used in our above modelling procedure), this would indicate that the speaker is producing formant values that are typically larger than other speakers with similar fixed-effects values, e.g. year of birth, gender etc. Likewise, if the intercept is negative, this would indicate that their F values are smaller, the closer the intercept is to 0, the more typical the speaker is (taking into account the fixed-effects).

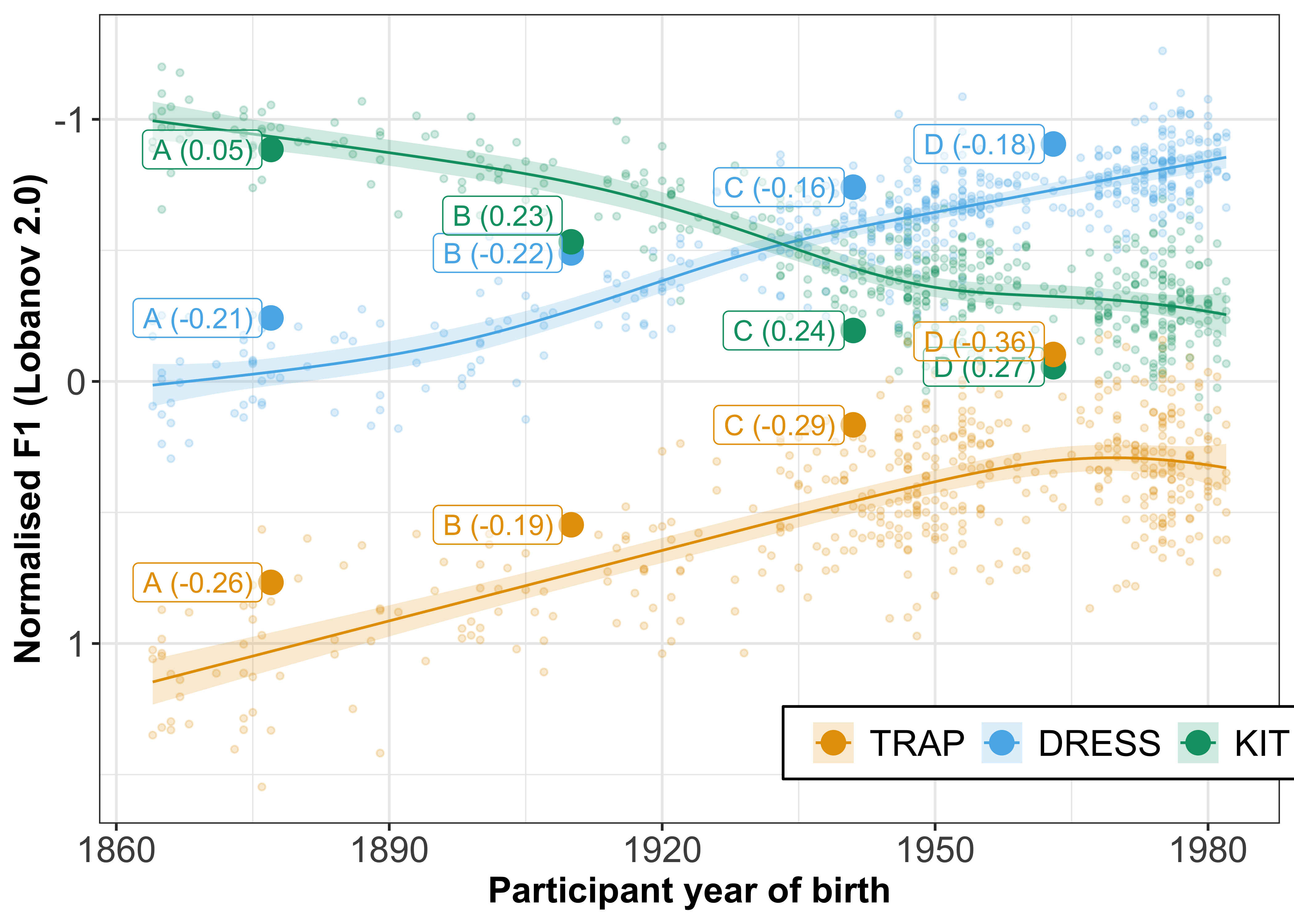

To demonstrate this, we will visualise the change in F1 for three vowels, known to have undergone rapid change in New Zealand English (TRAP, DRESS and KIT). We will plot the participant year of birth on the x axis and normalised F1 on the y axis (reverse scaled to match normal vowel plot conventions). To highlight the change in the three vowels over time, we will also fit a smooth and plot the mean F1 values of each speaker for each of the vowels. Finally, we will highlight 4 speakers who all have intercepts that indicate that they are advanced in these vowel changes, i.e. have negative intercepts (smaller F1) for TRAP and DRESS, but positive intercepts (larger F1) for KIT.

The first chunk calculates mean F1 values / speaker and merges this data with the speaker intercepts from the GAMMs.

The second chunk extracts predictions from the GAMMs for plotting smooths over year of birth. (This code block produces plots that are not included in the RMarkdown output).

vowels_to_plot <- c("KIT", "DRESS", "TRAP")

pred_table <- function (Vowel) {

mod_name <- paste0("gam_F1_", Vowel)

return(cbind(Vowel,

plot_smooth(get(mod_name), view="participant_year_of_birth",

rm.ranef=T, rug=F, n.grid=119)$fv)

)

}

gamm_preds_to_plot <- lapply(vowels_to_plot, pred_table) %>%

do.call(rbind, .)

saveRDS(gamm_preds_to_plot, "Data/Models/gamm_preds_to_plot.rds")gamm_preds_to_plot <- readRDS("Data/Models/gamm_preds_to_plot.rds")We now plot the data.

#make a long version of the intercepts

gam_intercepts.tmp_long <- gam_intercepts.tmp %>%

pivot_longer(F1_DRESS:F2_TRAP, names_to = "Vowel_formant", values_to = "Intercept") %>%

mutate(Formant = substr(Vowel_formant, 1, 2),

Vowel = substr(Vowel_formant, 4, max(nchar(Vowel_formant)))) %>%

left_join(vowels_all %>%

select(Speaker, participant_year_of_birth) %>% distinct()) %>%

left_join(gamm_preds_to_plot %>% mutate(Formant = "F1") %>% select(participant_year_of_birth, Vowel, Formant, fit, ll, ul))

speakers1 <- gam_intercepts.tmp_long %>%

filter(Vowel_formant %in% c("F1_KIT", "F1_DRESS", "F1_TRAP")) %>%

ungroup() %>%

arrange(participant_year_of_birth) %>%

filter(Speaker %in% c("MU_m_348", "IA_f_341", "CC_m_167", "CC_f_297")) %>%

mutate(letters = c(rep("A", 3), rep("B", 3), rep("C", 3), rep("D", 3)),

speakers1 = paste0(letters, " (", round(Intercept, 2), ")"))

F1_speaker_intercepts <- gam_intercepts.tmp_long %>%

filter(Vowel %in% c("KIT", "DRESS", "TRAP"),

Formant == "F1") %>% #choose the vowels

mutate(Vowel = factor(Vowel, levels = c("TRAP", "DRESS", "KIT"))) %>% #order the vowels

ggplot(aes(x = participant_year_of_birth, y = fit, colour = Vowel, fill = Vowel)) +

geom_point(aes(x = participant_year_of_birth, y = fit + Intercept), size = 1, alpha = 0.2) +

geom_line() +

geom_ribbon(aes(ymin=ll, ymax=ul), colour = NA, alpha=0.2) +

geom_point(data = speakers1 %>% mutate(fit = ifelse(speakers1 %in% c("D (-0.36)"), fit - 0.05, fit)), aes(x = participant_year_of_birth, y = fit + Intercept), size = 4) +

geom_label(data = speakers1 %>% mutate(fit = ifelse(speakers1 %in% c("B (0.23)"), fit - 0.1, fit), fit = ifelse(speakers1 %in% c("D (-0.36)"), fit - 0.1, fit)), aes(x = participant_year_of_birth - 1, y = fit + Intercept, label = speakers1), fill = "white", alpha = 0.5, hjust = 1, show.legend = FALSE) +

scale_y_reverse() +

scale_colour_manual(values=c("#E69F00", "#56B4E9", "#009E73")) +

scale_fill_manual(values=c("#E69F00", "#56B4E9", "#009E73")) +

xlab("Participant year of birth") + #x axis title

ylab("Normalised F1 (Lobanov 2.0)") + #y axis title

theme_bw() + #general aesthetics

theme(legend.position = c(0.8, 0.1), #legend position

legend.direction = "horizontal",

legend.background = element_rect(colour = "black"),

axis.text = element_text(size = 14), #text size

axis.title = element_text(face = "bold", size = 14), #axis text aesthetics

legend.title = element_blank(), #legend title text size

legend.text = element_text(size = 14)) #legend text size

F1_speaker_intercepts

ggsave(plot = F1_speaker_intercepts, filename = "Figures/F1_speaker_intercepts.png", width = 10, height = 5, dpi = 400)

#clean up

rm(speakers, speakers1, F1_speaker_intercepts)Visualisation of intercept correlations

Understanding how the intercepts may be correlated to each other is an important first step to how they relate to each other. However, simply using correlations to gain a full understanding of how the covariation is operating, may not be sufficient. We present below an initial outline of the correlations between the intercepts.

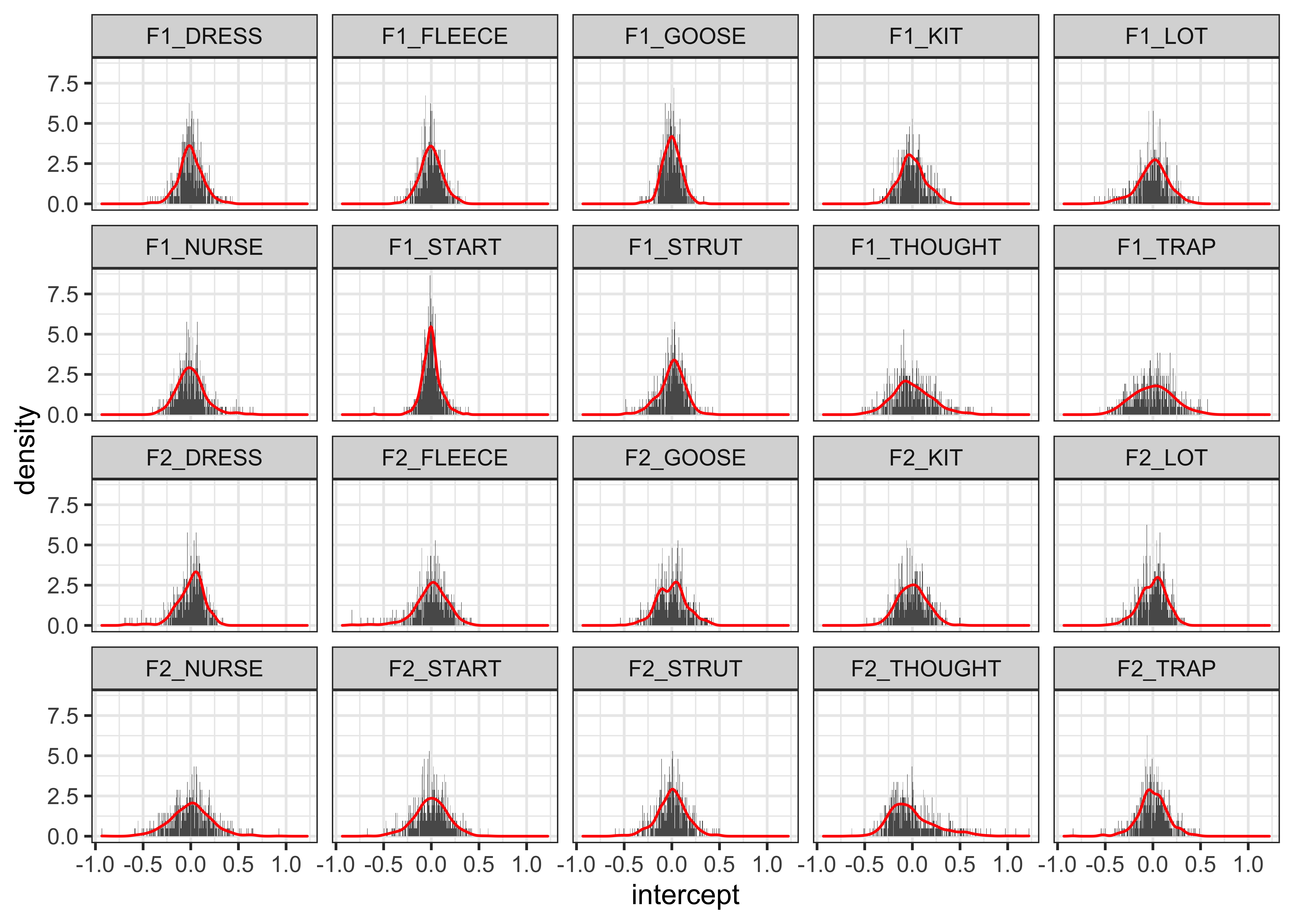

- Checking the distribution of the intercepts

gam_intercepts.tmp %>%

select(-1) %>%

pivot_longer(cols = 1:20, names_to = "variable", values_to = "intercept") %>%

ggplot(aes(x = intercept, y = ..density..)) +

geom_histogram(bins = 500) +

geom_density(outline.type = "upper", colour = "red", bw = "SJ") +

facet_wrap(~variable) +

theme_bw()

- The correlations themselves

intercepts_cor <- cor(gam_intercepts.tmp[-1] %>%

select(starts_with("F1"), starts_with("F2")))

datatable(intercepts_cor) %>%

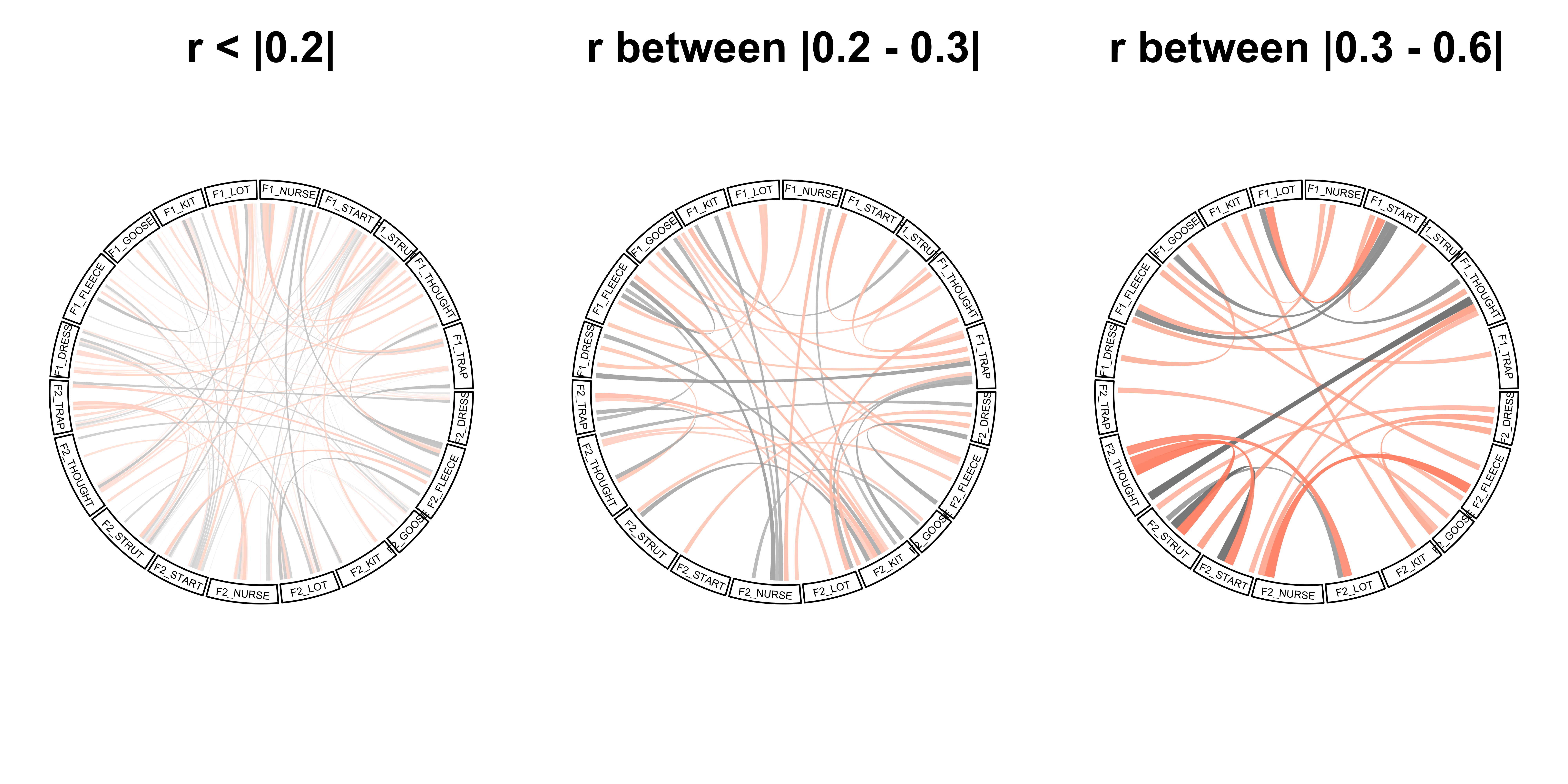

formatRound(columns = 1:20)- A chord plot that visualises the correlations. This plot will give a more simplified representation of the above plot, it connects each of the variables to all the other variables via chords. The black chords indicate a positive correlation, whereas a red chord represents a negative correlation. The size and transparency of the chord indicates the strength of the correlation, with darker wider chords being used for the strongest correlations

The code below adapts the code used in Zuguang Gu’s tutorial (see http://jokergoo.github.io/blog/html/large_matrix_circular.html)

mat <- cor(gam_intercepts.tmp[-1] %>%

select(starts_with("F2"), starts_with("F1")))

diag(mat) = 0

mat[lower.tri(mat)] = 0

n = nrow(mat)

rn = rownames(mat)

group_size = c(rep(1, 20))

gl = lapply(1:20, function(i) {

rownames(mat)[sum(group_size[seq_len(i-1)]) + 1:group_size[i]]

})

names(gl) = names(mat)

group_color = structure(circlize::rand_color(20), names = names(gl))

n_group = length(gl)

col_fun = colorRamp2(c(-1, 0, 1), c("red", "transparent", "black"), transparency = 0.1)

par(mfrow=c(1,3))

# < |0.2|

mat1 <- ifelse(mat < 0.2 & mat > -0.2, mat, 0)

col2 <- col_fun(mat1)

col2 <- ifelse(col2 == "#FFFFFFE6", NA, col2)

chordDiagram(mat, col = col2, grid.col = NA, grid.border = "black",

annotationTrack = "grid", link.largest.ontop = TRUE,

preAllocateTracks = list(

list(track.height = 0.02)

)

)

title("r < |0.2|", cex.main = 2.5, line = -2)

circos.trackPlotRegion(track.index = 2, panel.fun = function(x, y) {

xlim = get.cell.meta.data("xlim")

ylim = get.cell.meta.data("ylim")

sector.index = get.cell.meta.data("sector.index")

circos.text(mean(xlim), mean(ylim), sector.index, col = "black", cex = 0.6,

facing = "inside", niceFacing = TRUE)

}, bg.border = NA)

# |0.2-0.3|

mat1 <- ifelse(mat > 0.3 | mat < -0.3, 0, mat)

mat1 <- ifelse(mat1 < 0.2 & mat1 > -0.2, 0, mat1)

col2 <- col_fun(mat1)

col2 <- ifelse(col2 == "#FFFFFFE6", NA, col2)

chordDiagram(mat, col = col2, grid.col = NA, grid.border = "black",

annotationTrack = "grid", link.largest.ontop = TRUE,

preAllocateTracks = list(

list(track.height = 0.02)

)

)

title("r between |0.2 - 0.3|", cex.main = 2.5, line = -2)

circos.trackPlotRegion(track.index = 2, panel.fun = function(x, y) {

xlim = get.cell.meta.data("xlim")

ylim = get.cell.meta.data("ylim")

sector.index = get.cell.meta.data("sector.index")

circos.text(mean(xlim), mean(ylim), sector.index, col = "black", cex = 0.6,

facing = "inside", niceFacing = TRUE)

}, bg.border = NA)

# > |0.3|

mat1 <- ifelse(mat > 0.3 | mat < -0.3, mat, 0)

col2 <- col_fun(mat1)

col2 <- ifelse(col2 == "#FFFFFFE6", NA, col2)

chordDiagram(mat, col = col2, grid.col = NA, grid.border = "black",

annotationTrack = "grid", link.largest.ontop = TRUE,

preAllocateTracks = list(

list(track.height = 0.02)

)

)

title("r between |0.3 - 0.6|", cex.main = 2.5, line = -2)

circos.trackPlotRegion(track.index = 2, panel.fun = function(x, y) {

xlim = get.cell.meta.data("xlim")

ylim = get.cell.meta.data("ylim")

sector.index = get.cell.meta.data("sector.index")

circos.text(mean(xlim), mean(ylim), sector.index, col = "black", cex = 0.6,

facing = "inside", niceFacing = TRUE)

}, bg.border = NA)

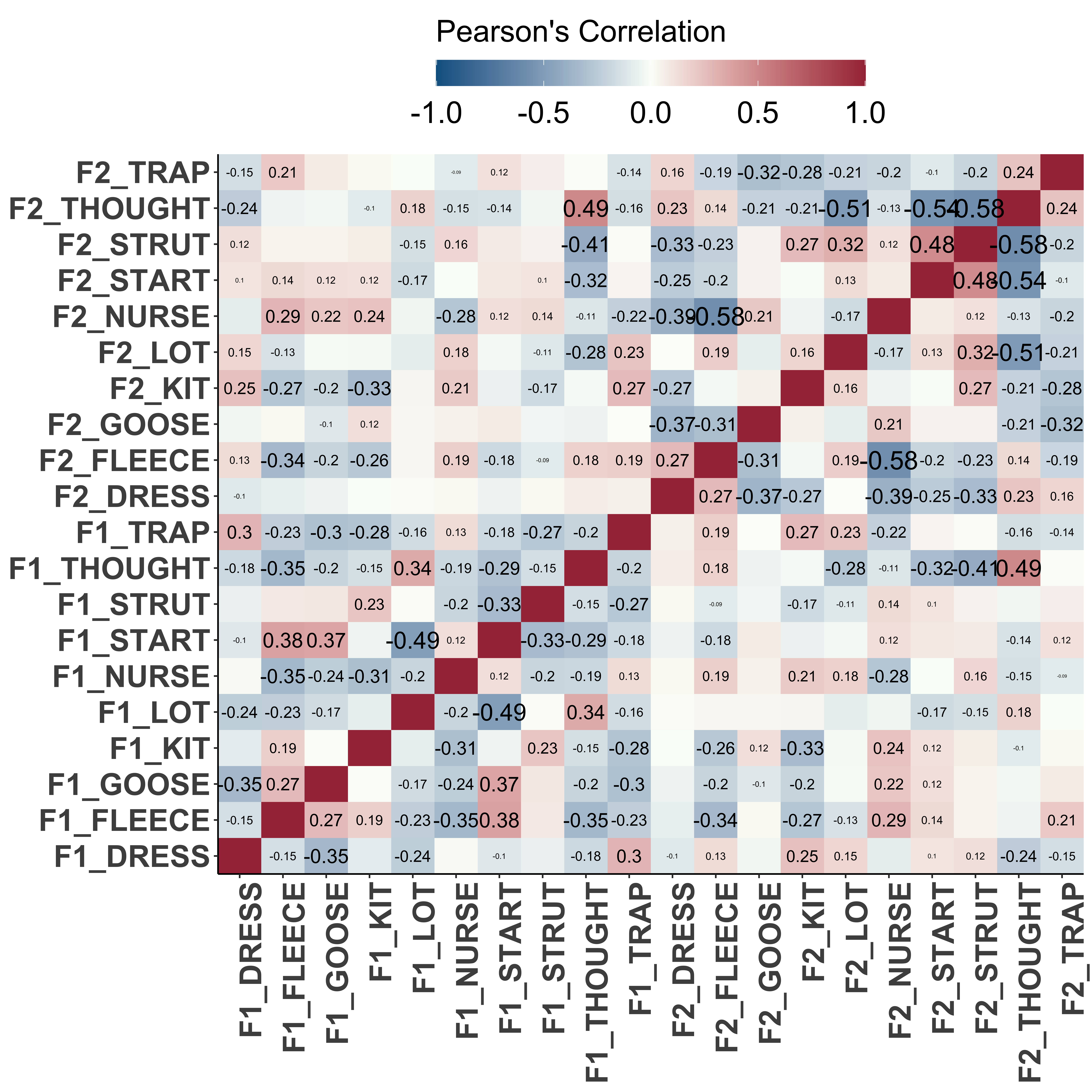

- Heat map of the correlations

cors <- function(df) {

# turn all three matrices (r, n, and P into a data frame)

M <- Hmisc::rcorr(as.matrix(df))

# return the three data frames in a list return(Mdf)

Mdf <- map(M, ~data.frame(.x))

}

formatted_cors <- function(df){

cors(df) %>%

map(~rownames_to_column(.x, var="measure1")) %>%

map(~pivot_longer(.x, -measure1, "measure2")) %>%

bind_rows(.id = "id") %>%

pivot_wider(names_from = id, values_from = value) %>%

mutate(sig_p = ifelse(P < .05, T, F), p_if_sig = ifelse(P <.05, P, NA), r_if_sig = ifelse(P <.05, r, NA))

}

formatted_cors(gam_intercepts.tmp[-1]) %>%

ggplot(aes(x = measure1, y = measure2, fill = r, label=round(r_if_sig,2))) +

geom_tile() +

geom_text(aes(size = abs(r_if_sig)), show.legend = FALSE) +

labs(x = NULL, y = NULL, fill = "Pearson's Correlation") +

# map a red, white and blue color scale to correspond to -1:1 sequential gradient

scale_fill_gradient2(mid="#FBFEF9",low="#0C6291",high="#A63446", limits=c(-1,1)) +

theme_classic() +

# remove excess space on x and y axes

scale_x_discrete(expand=c(0,0)) +

scale_y_discrete(expand=c(0,0)) +

# change global font to roboto

theme(axis.text = element_text(size = 20, face = "bold"),

axis.text.x = element_text(angle = 90, hjust = 1),

legend.position = "top",

legend.key.width = unit(2, "cm"),

legend.title = element_text(size = 20),

legend.text = element_text(size = 20)) +

guides(fill = guide_colourbar(title.position = "top"))

- Table of correlations with significance

formatted_cors(gam_intercepts.tmp[-1]) %>%

filter(r != 1) %>%

mutate(absolute_r = abs(r)) %>%

arrange(-absolute_r) %>%

group_by(r) %>%

mutate(id = row_number()) %>%

ungroup() %>%

filter(id == 1) %>%

datatable() %>%

formatRound(c("r", "r_if_sig", "P", "p_if_sig", "absolute_r"), 3)- Permutation of correlations

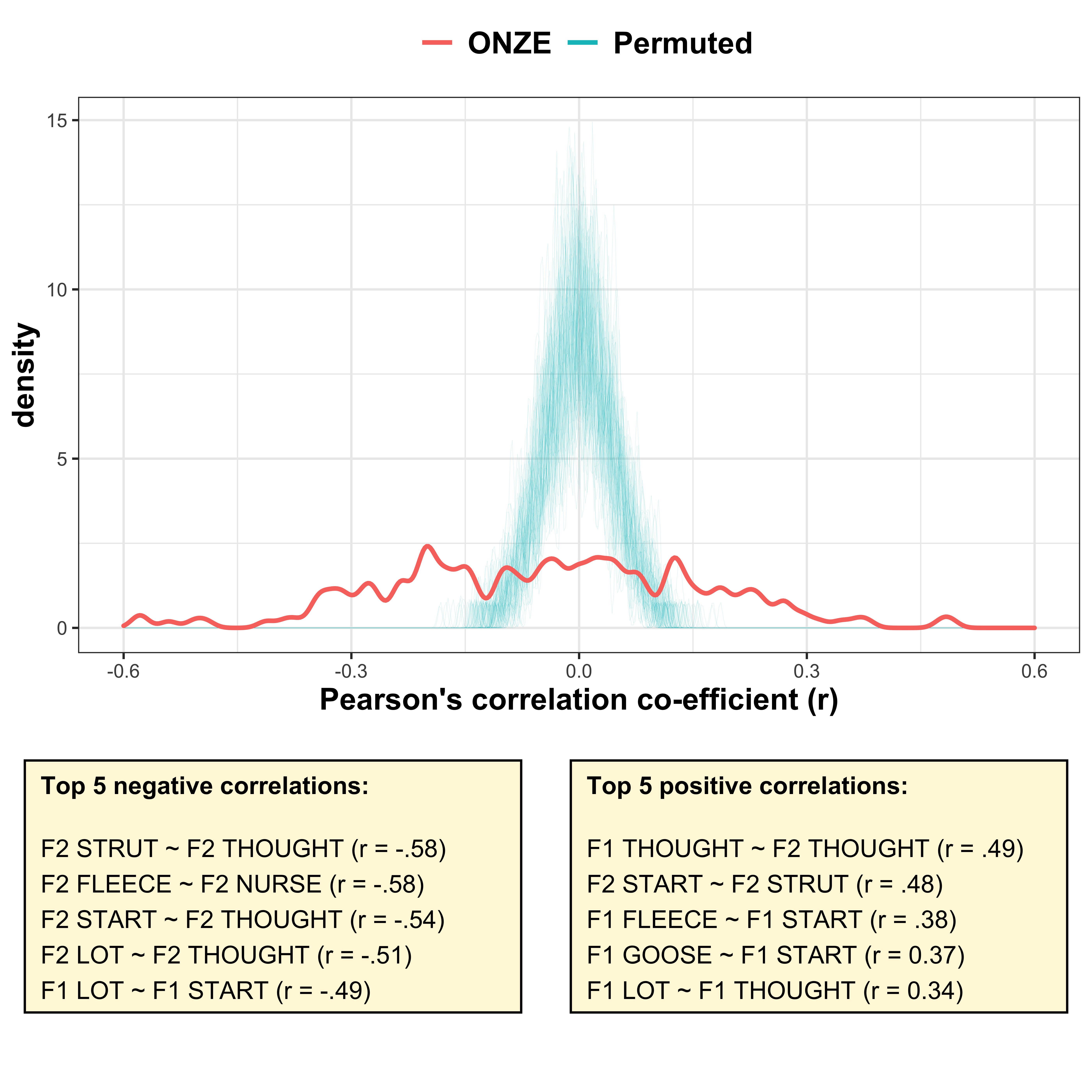

This is the plot we include in the manuscript.

We show the distribution of correlation co-efficients across the intercepts (plotted in red), highlighting that there are a range of positive/negative correlations, some stronger than others.

We permute the correlations 100 times (which is a process of shuffling the values around to remove any underlying structure). This distribution is plotted in blue and shows that the random correlations are generally between |1.5|. Thus, the correlations in the ONZE dataset are likely to represent actual co-variation between the variables.

correlations_permuted <- gam_intercepts.tmp[-1] %>%

formatted_cors(.) %>%

mutate(intercepts = "ONZE")

for (i in 1:100) {

permuted <- formatted_cors(apply(gam_intercepts.tmp[-1], 2L, sample)) %>% mutate(intercepts = i)

correlations_permuted <<- rbind(correlations_permuted, permuted)

}

correlations_permuted <- correlations_permuted %>%

mutate(Data = ifelse(intercepts == "ONZE", "ONZE", "Permuted")) %>%

filter(r < 1)

correlations_permuted_plot <- ggplot(correlations_permuted, aes(x = r, y = ..density.., colour = Data, group = intercepts)) +

# geom_histogram(alpha = 0.1, bins = 481) +

geom_line(aes(x = r, y = P),size = 0) +

geom_density(aes(colour = Data), adjust = 0.2, size = 0.01, alpha = 1, show.legend = FALSE) +

geom_density(data = correlations_permuted %>% filter(intercepts == "ONZE"), adjust = 0.2, colour = "#F8766D", size = 1, show.legend = FALSE) +

scale_x_continuous(limits = c(-0.6, 0.6)) +

xlab("Pearson's correlation co-efficient (r)") +

# facet_grid(~intercepts) +

theme_bw() +

theme(legend.position = "top",

legend.title = element_blank(),

axis.title = element_text(size = 14, face = "bold"),

legend.text = element_text(size = 14, face = "bold")) +

guides(colour = guide_legend(override.aes = list(size=1)))

positive_correlations <- ggplot(correlations_permuted, aes(x = r, y = ..density.., colour = Data, group = intercepts)) +

geom_density(size = 0) +

annotate("rect", xmin = -1, xmax = 0.57, ymin = 2, ymax = 10, fill = "cornsilk", colour = "black") +

annotate("text", label = "Top 5 positive correlations:", fontface = 2, x = -0.95, y = 9.5, hjust = 0, vjust = 1, size = 4) +

annotate("text", label = "F1 THOUGHT ~ F2 THOUGHT (r = .49)\nF2 START ~ F2 STRUT (r = .48)\nF1 FLEECE ~ F1 START (r = .38)\nF1 GOOSE ~ F1 START (r = 0.37)\nF1 LOT ~ F1 THOUGHT (r = 0.34)", x = -0.95, y = 7.5, hjust = 0, vjust = 1, size = 4) +

theme_nothing()

negative_correlations <- ggplot(correlations_permuted, aes(x = r, y = ..density.., colour = Data, group = intercepts)) +

geom_density(size = 0) +

annotate("rect", xmin = -1, xmax = 0.57, ymin = 2, ymax = 10, fill = "cornsilk", colour = "black") +

annotate("text", label = "Top 5 negative correlations:", fontface = 2, x = -0.95, y = 9.5, hjust = 0, vjust = 1, size = 4) +

annotate("text", label = "F2 STRUT ~ F2 THOUGHT (r = -.58)\nF2 FLEECE ~ F2 NURSE (r = -.58)\nF2 START ~ F2 THOUGHT (r = -.54)\nF2 LOT ~ F2 THOUGHT (r = -.51)\nF1 LOT ~ F1 START (r = -.49)", x = -0.95, y = 7.5, hjust = 0, vjust = 1, size = 4) +

theme_nothing()

correlations_permuted_plot1 <- plot_grid(correlations_permuted_plot, plot_grid(negative_correlations, positive_correlations, nrow = 1, ncol = 2), rel_heights = c(0.8, 0.4), nrow = 2, ncol = 1)

correlations_permuted_plot1

ggsave(plot = correlations_permuted_plot1, filename = "Figures/correlations_permuted_plot.png", width = 7, height = 7, dpi = 400)Principal Components Analysis (PCA)

In the following section we will run a PCA on the gam_intercepts.tmp data frame, i.e. the by-speaker intercepts from each of the 20 GAMMs.

PCA offers a neat analysis solution to assessing whether there is underlying structure in the dataset, by highlighting which variables co-vary together - these are called principal components (PCs). Moreover, it also allows us to explore the data at the by-speaker level, providing us with insights into who is on the margins of these PCs.

Running the PCA

#run the PCA on the intercepts data frame, note the intercepts are in columns 2:21 as column 1 is the speaker name

intercepts.pca <- princomp(gam_intercepts.tmp[, 2:ncol(gam_intercepts.tmp)],cor=TRUE)

#print a summary of the PCA, this will give the variance explained by each PC

summary(intercepts.pca)## Importance of components:

## Comp.1 Comp.2 Comp.3 Comp.4 Comp.5

## Standard deviation 1.8568236 1.7761654 1.4218609 1.27801065 1.11351321

## Proportion of Variance 0.1723897 0.1577382 0.1010844 0.08166556 0.06199558

## Cumulative Proportion 0.1723897 0.3301279 0.4312123 0.51287786 0.57487344

## Comp.6 Comp.7 Comp.8 Comp.9 Comp.10

## Standard deviation 1.0424970 1.00352469 0.95546926 0.90696297 0.87916274

## Proportion of Variance 0.0543400 0.05035309 0.04564608 0.04112909 0.03864636

## Cumulative Proportion 0.6292134 0.67956653 0.72521261 0.76634170 0.80498805

## Comp.11 Comp.12 Comp.13 Comp.14 Comp.15

## Standard deviation 0.80953378 0.77331213 0.73748412 0.72788478 0.67193061

## Proportion of Variance 0.03276725 0.02990058 0.02719414 0.02649081 0.02257454

## Cumulative Proportion 0.83775530 0.86765588 0.89485002 0.92134084 0.94391538

## Comp.16 Comp.17 Comp.18 Comp.19 Comp.20

## Standard deviation 0.61321385 0.55845231 0.47851298 0.372962769 0.25635209

## Proportion of Variance 0.01880156 0.01559345 0.01144873 0.006955061 0.00328582

## Cumulative Proportion 0.96271694 0.97831039 0.98975912 0.996714180 1.00000000#view eigen values, this will visualise the variance explained by each PC

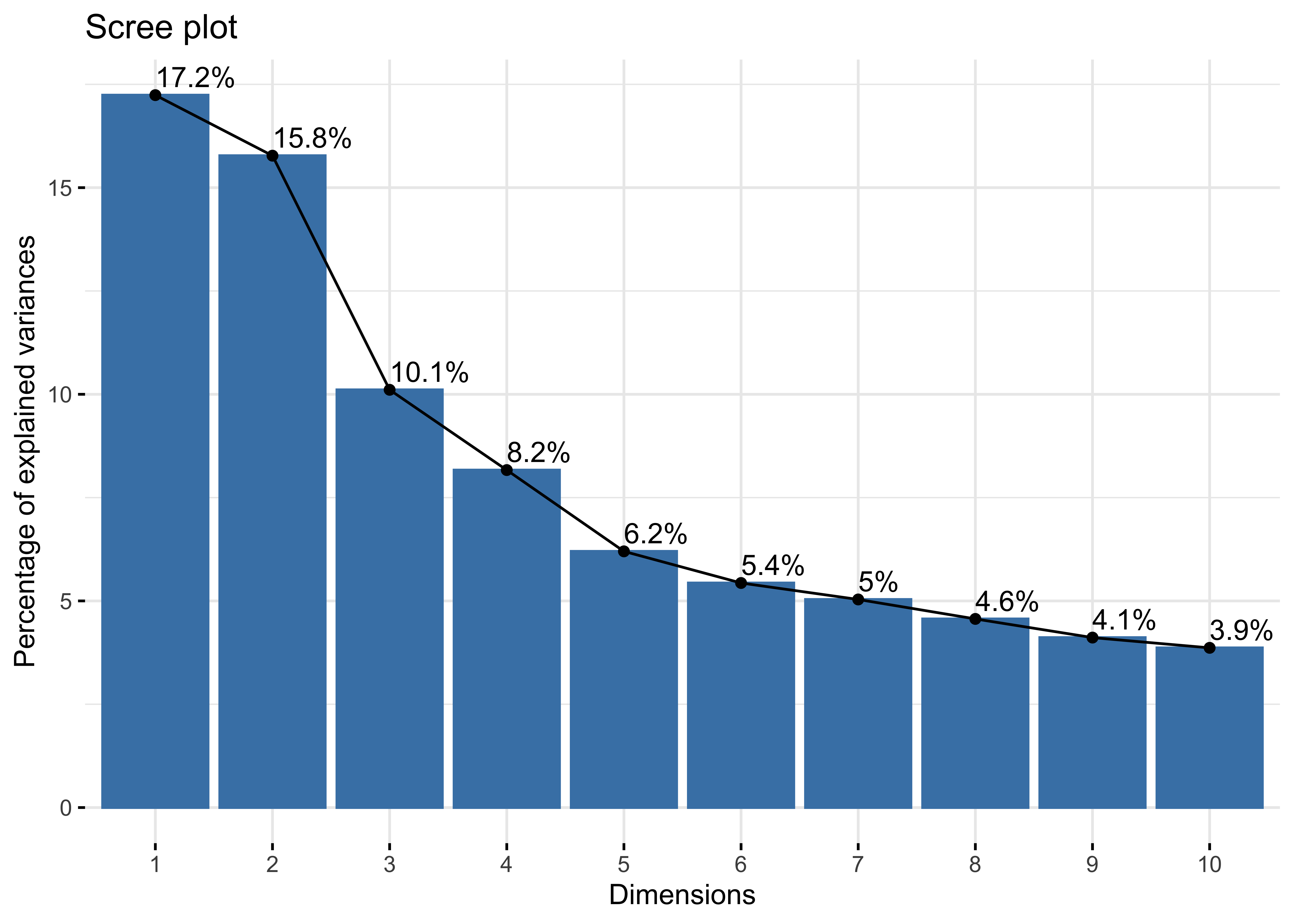

fviz_eig(intercepts.pca, addlabels = TRUE)

#create objects containing the loadings for each of the 3 main PCs

PC1_loadings <- intercepts.pca$loadings[,1]

PC2_loadings <- intercepts.pca$loadings[,2]

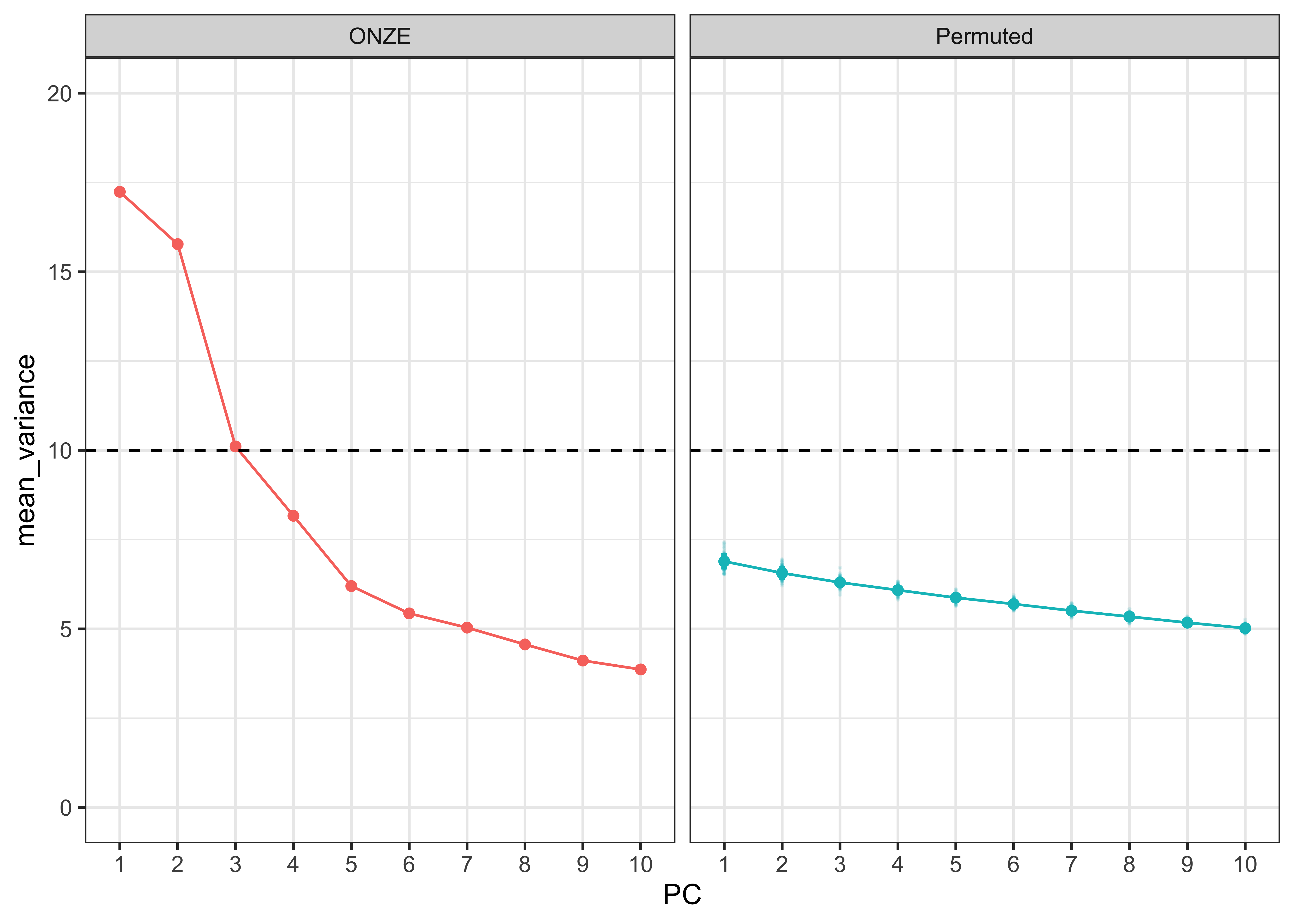

PC3_loadings <- intercepts.pca$loadings[,3]We can also permute the data again and run the PCA to compare how the ONZE intercepts compare to permuted intercepts. We can see a clear difference between the two data sets. The 4th PC from the ONZE intercepts does appear to explain more variance than the permuted intercepts, but we chose to focus on the first 3 PCs as each explains > 10% of the variance in the analysis (dashed horizontal line), which is commonly taken as a threshold for meaningfully interpretable PCs.

PCA_variance_permuted <- get_eigenvalue(intercepts.pca)[1:10, 2] %>%

data.frame() %>%

rename(Variance = 1) %>%

mutate(PC = paste0(1:10),

Permutation = "ONZE",

Data = "ONZE") %>%

select(PC, Variance, Permutation, Data)

for (i in 1:100) {

permuted <- apply(gam_intercepts.tmp[-1], 2L, sample)

permuted_pca <- princomp(permuted, cor = TRUE)

PCA_variance <- get_eigenvalue(permuted_pca)[1:10, 2] %>%

data.frame() %>%

rename(Variance = 1) %>%

mutate(PC = paste0(1:10),

Permutation = i,

Data = "Permuted") %>%

select(PC, Variance, Permutation, Data)

PCA_variance_permuted <<- rbind(PCA_variance_permuted, PCA_variance)

}

PCA_variance_permuted_plot <- PCA_variance_permuted %>%

group_by(Data, PC) %>%

summarise(mean_variance = mean(Variance),

sd = sd(Variance)) %>%

arrange(PC) %>%

mutate(PC = factor(PC, levels = c(1, 2, 3, 4, 5, 6, 7, 8, 9, 10)),

sd = ifelse(is.na(sd), 0, sd)) %>%

ggplot(aes(x = PC, y = mean_variance, colour = Data, group = Data)) +

geom_point(data = PCA_variance_permuted %>%

mutate(PC = factor(PC, levels = c(1, 2, 3, 4, 5, 6, 7, 8, 9, 10))), aes(x = PC, y = Variance), size = 0.1, alpha = 0.1) +

geom_errorbar(aes(ymin=mean_variance-sd, ymax=mean_variance+sd), width=.1, position=position_dodge(0.1)) +

geom_line() +

geom_point() +

geom_hline(yintercept = 10, colour = "black", linetype = "dashed") +

# geom_label_repel(aes(label = paste0(round(mean_variance, 2), "%")), show.legend = FALSE) +

scale_y_continuous(limits = c(0, 20)) +

facet_grid(~Data) +

theme_bw()+

theme(legend.position = "none")

PCA_variance_permuted_plot

ggsave(plot = PCA_variance_permuted_plot, filename = "Figures/PCA_scree.png", width = 7, height = 5, dpi = 300)#store these in a data frame for ease of plotting

PC1_loadings <- PC1_loadings %>%

as.data.frame() %>%#convert to a data frame

dplyr::rename(Loading = 1) %>% #rename the variable

mutate(variable = row.names(.), #add variable to identify which intercepts are representing each row

highlight = ifelse(Loading > 0.225 | Loading < -0.225 , "black", "gray"), #for plotting reasons, we want to highlight the variables that contribute the most to the PC, so if the loading is > |0.2| it will plotted in black, if not it will be gray)

PC1_loadings_abs = abs(Loading), #make the loadings absolute so they are comparable when plotting

direction = ifelse(Loading < 0, "red", "black"), #define which direction the loading sign was, i.e. red if negative, black if positive

PC = "PC1",

Vowel = substr(variable, 4, 10)) %>% #add variable to identify which PC the data is from

arrange(PC1_loadings_abs) #order the variables based on absolute loading value

#repeat this process for PC2

PC2_loadings <- PC2_loadings %>%

as.data.frame() %>%

dplyr::rename(Loading = 1) %>%

mutate(variable = row.names(.),

highlight = ifelse(Loading > 0.225 | Loading < -0.225 , "black", "gray"),

PC2_loadings_abs = abs(Loading),

direction = ifelse(Loading < 0, "red", "black"),

PC = "PC2",

Vowel = substr(variable, 4, 10)) %>%

arrange(PC2_loadings_abs)

#repeat this process for PC3

PC3_loadings <- PC3_loadings %>%

as.data.frame() %>%

dplyr::rename(Loading = 1) %>%

mutate(variable = row.names(.),

highlight = ifelse(Loading > 0.225 | Loading < -0.225 , "black", "gray"),

PC3_loadings_abs = abs(Loading),

direction = ifelse(Loading < 0, "red", "black"),

PC = "PC3",

Vowel = substr(variable, 4, 10)) %>%

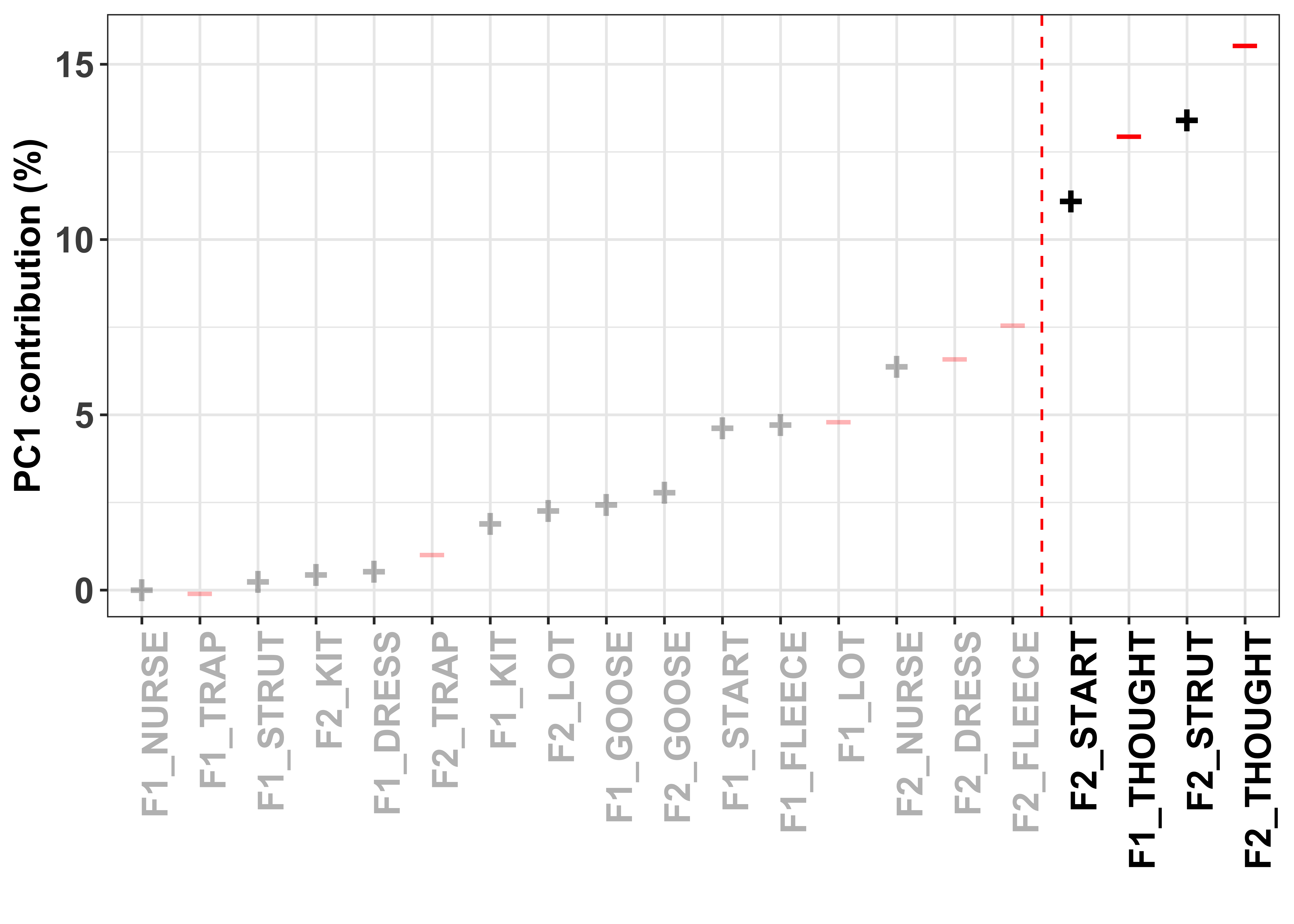

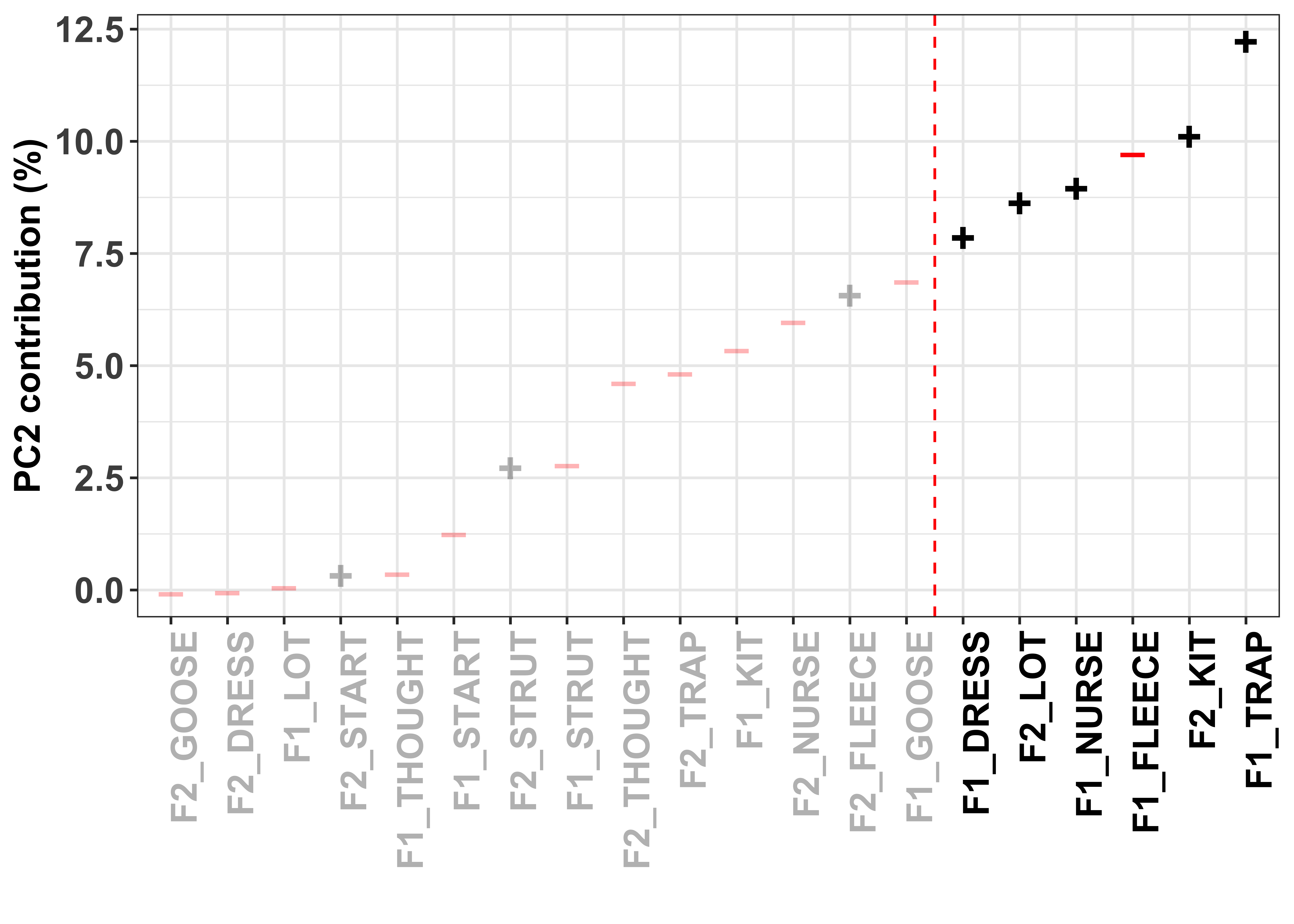

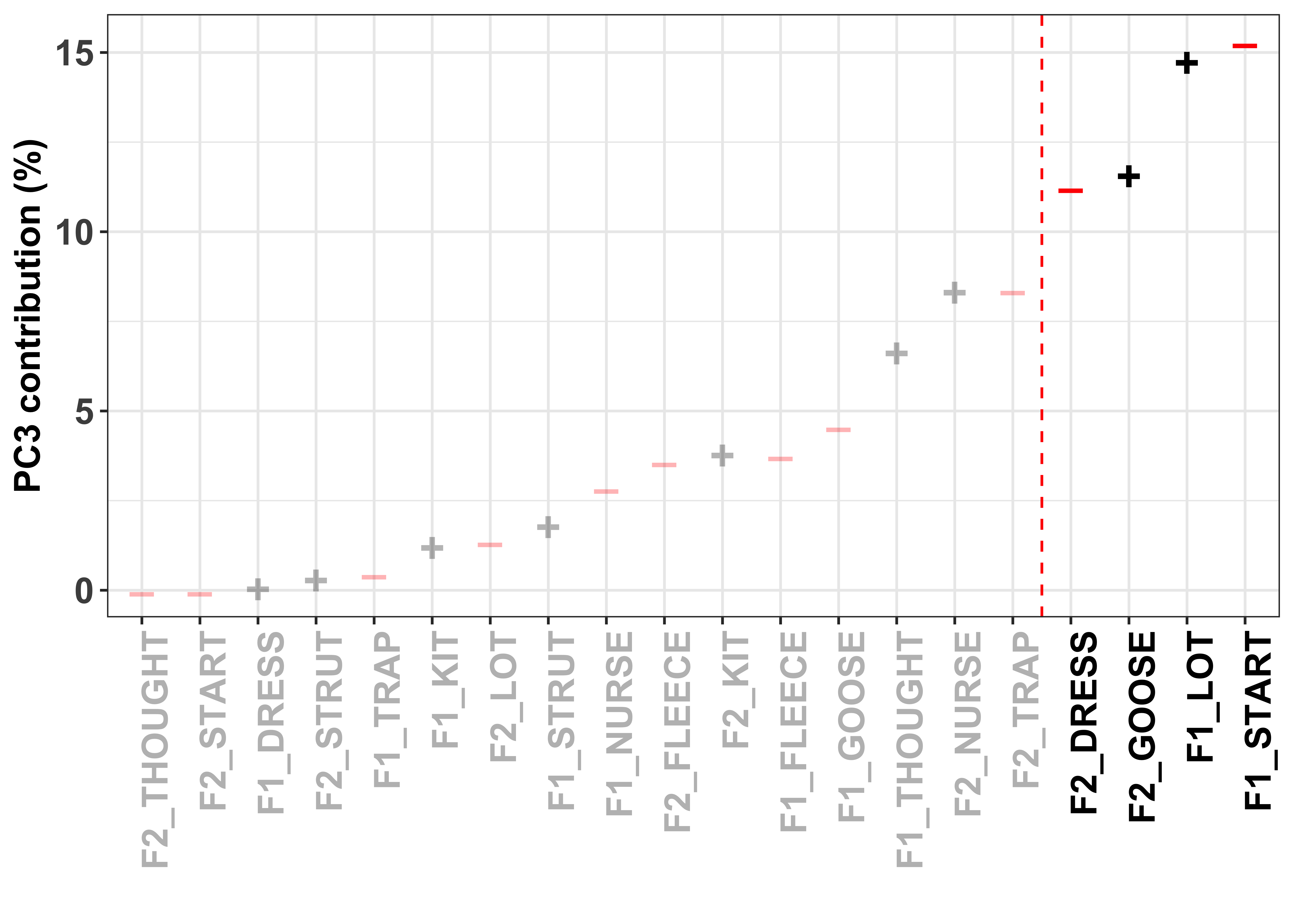

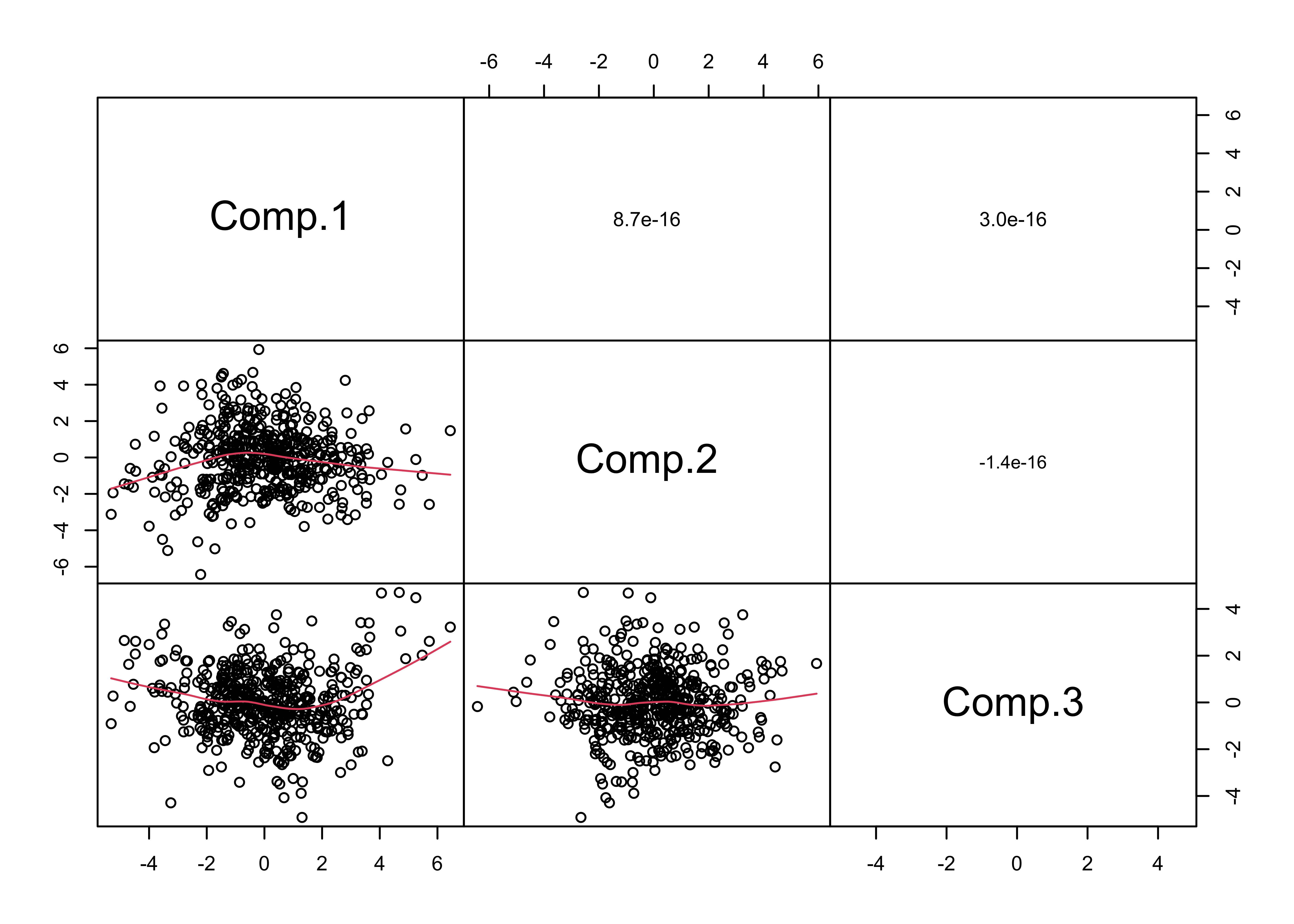

arrange(PC3_loadings_abs)PCs by Contribution

To visualise how the different variables contribute to the formation of the PC, we can look at their contribution values. These are simply the variable loading squared and then multiplied by 100, giving a value that is interpretable by means of a percent.

The red dashed line in these plots divides the variables on the basis of cumulative variance explained, whereby those variables to the right of the line collectively account for > 50% of the total variance. Thus, our (least arbitrary) way to define a cut-off point for ‘importance’ of the variables is to focus on those contributing > 50%.

The colour and sign of the dots in these plots is defined by the direction of the loadings in the PCA, i.e. - = negative and + = positive.

First we will wrangle the data from the PCA.

PC_loadings_contrib <- intercepts.pca$loadings[,1:3] %>% #get the loadings for PC1, PC2 and PC3

as.data.frame() %>%

cbind(get_pca(intercepts.pca, "var")$contrib[,1:3]) %>% #get the contributions

dplyr::rename(Loading.PC1 = Comp.1,

Loading.PC2 = Comp.2,

Loading.PC3 = Comp.3,

Contribution.PC1 = Dim.1,

Contribution.PC2 = Dim.2,

Contribution.PC3 = Dim.3) %>%

mutate(Variable = row.names(.),

Vowel = substr(Variable, 4, nchar(Variable))) %>%

dplyr::select(Variable, Vowel, Loading.PC1:Contribution.PC3) %>%

pivot_longer(Loading.PC1:Loading.PC3, names_to = "PC", values_to = "Loading") %>%

arrange(Vowel, Variable, PC) %>%

mutate(direction = ifelse(Loading < 0, "red", "black")) #define direction of the loadingsPC1

PC1_contrib <- PC_loadings_contrib %>%

select(-Contribution.PC2, -Contribution.PC3) %>%

filter(PC == "Loading.PC1") %>%

arrange(Contribution.PC1) %>%

mutate(Loading_abs = abs(Loading),

cumsum_PC1 = round(cumsum(Contribution.PC1), 4),

highlight = ifelse(cumsum_PC1 < 50, "grey", "black"),

highlight1 = ifelse(cumsum_PC1 < 50, 0.5, 1),

direction1 = direction,

direction_lab = ifelse(direction1=="red","–", "+"))

PC1_contrib_plot <- ggplot(PC1_contrib, aes(x=reorder(Variable, Contribution.PC1), y=Contribution.PC1)) + #have the variable name on the x axis and loading value on the y

geom_text(aes(alpha = highlight, label=ifelse(PC1_contrib$direction=="red","–", "+")), colour = PC1_contrib$direction, size = 6, fontface="bold", show.legend = FALSE) +

xlab("") + #remove the x axis title

ylab("PC1 contribution (%)") +

geom_vline(xintercept = 16.5, color = "red", linetype = "dashed") + #add a red dashed line to identify the 0.2 cut off

scale_alpha_manual(values=c(1, 0.3)) +

theme_bw() + #set the aesthetics of the plot

theme(axis.text.x = element_text(angle = 90, hjust = 1, colour = PC1_contrib$highlight, size = 14, face = "bold"),

axis.text.y = element_text(size = 14, face = "bold"),

axis.title = element_text(size = 14, face = "bold")) #modify the x axis variable names so they are rotated and highlighted

PC1_contrib_plot

# ggsave(plot = PC1_contrib_plot, filename = "Figures/PC1_contrib_plot.png", width = 8, height = 5, dpi = 300)PC2

PC2_contrib <- PC_loadings_contrib %>%

select(-Contribution.PC1, -Contribution.PC3) %>%

filter(PC == "Loading.PC2") %>%

arrange(Contribution.PC2) %>%

mutate(Loading_abs = abs(Loading),

cumsum_PC2 = round(cumsum(Contribution.PC2), 4),

highlight = ifelse(cumsum_PC2 < 50, "grey", "black"),

highlight1 = ifelse(cumsum_PC2 < 50, 0.5, 1),

direction1 = direction,

direction_lab = ifelse(direction1=="red","–", "+"))

PC2_contrib_plot <- ggplot(PC2_contrib, aes(x=reorder(Variable, Contribution.PC2), y=Contribution.PC2)) + #have the variable name on the x axis and loading value on the y

geom_text(aes(alpha = highlight, label=ifelse(PC2_contrib$direction=="red","–", "+")), colour = PC2_contrib$direction, size = 6, fontface="bold", show.legend = FALSE) + #add the dots to the plot, with the colour

xlab("") + #remove the x axis title

ylab("PC2 contribution (%)") +

geom_vline(xintercept = 14.5, color = "red", linetype = "dashed") + #add a red dashed line to identify the 0.2 cut off

scale_alpha_manual(values=c(1, 0.3)) +

theme_bw() + #set the aesthetics of the plot

theme(axis.text.x = element_text(angle = 90, hjust = 1, colour = PC2_contrib$highlight, size = 14, face = "bold"),

axis.text.y = element_text(size = 14, face = "bold"),

axis.title = element_text(size = 14, face = "bold")) #modify the x axis variable names so they are rotated and highlighted

PC2_contrib_plot

# ggsave(plot = PC2_contrib_plot, filename = "Figures/PC2_contrib_plot.png", width = 8, height = 5, dpi = 300)PC3

PC3_contrib <- PC_loadings_contrib %>%

select(-Contribution.PC1, -Contribution.PC2) %>%

filter(PC == "Loading.PC3") %>%

arrange(Contribution.PC3) %>%

mutate(Loading_abs = abs(Loading),

cumsum_PC3 = round(cumsum(Contribution.PC3), 4),

highlight = ifelse(cumsum_PC3 < 50, "grey", "black"),

highlight1 = ifelse(cumsum_PC3 < 50, 0.5, 1),

direction1 = direction,

direction_lab = ifelse(direction1=="red","–", "+"))

PC3_contrib_plot <- ggplot(PC3_contrib, aes(x=reorder(Variable, Contribution.PC3), y=Contribution.PC3)) + #have the variable name on the x axis and loading value on the y

geom_text(aes(alpha = highlight, label=ifelse(PC3_contrib$direction=="red","–", "+")), colour = PC3_contrib$direction, size = 6, fontface="bold", show.legend = FALSE) +

xlab("") + #remove the x axis title

ylab("PC3 contribution (%)") +

geom_vline(xintercept = 16.5, color = "red", linetype = "dashed") + #add a red dashed line to identify the 0.2 cut off

scale_alpha_manual(values=c(1, 0.3)) +

theme_bw() + #set the aesthetics of the plot

theme(axis.text.x = element_text(angle = 90, hjust = 1, colour = PC3_contrib$highlight, size = 14, face = "bold"),

axis.text.y = element_text(size = 14, face = "bold"),

axis.title = element_text(size = 14, face = "bold")) #modify the x axis variable names so they are rotated and highlighted

PC3_contrib_plot

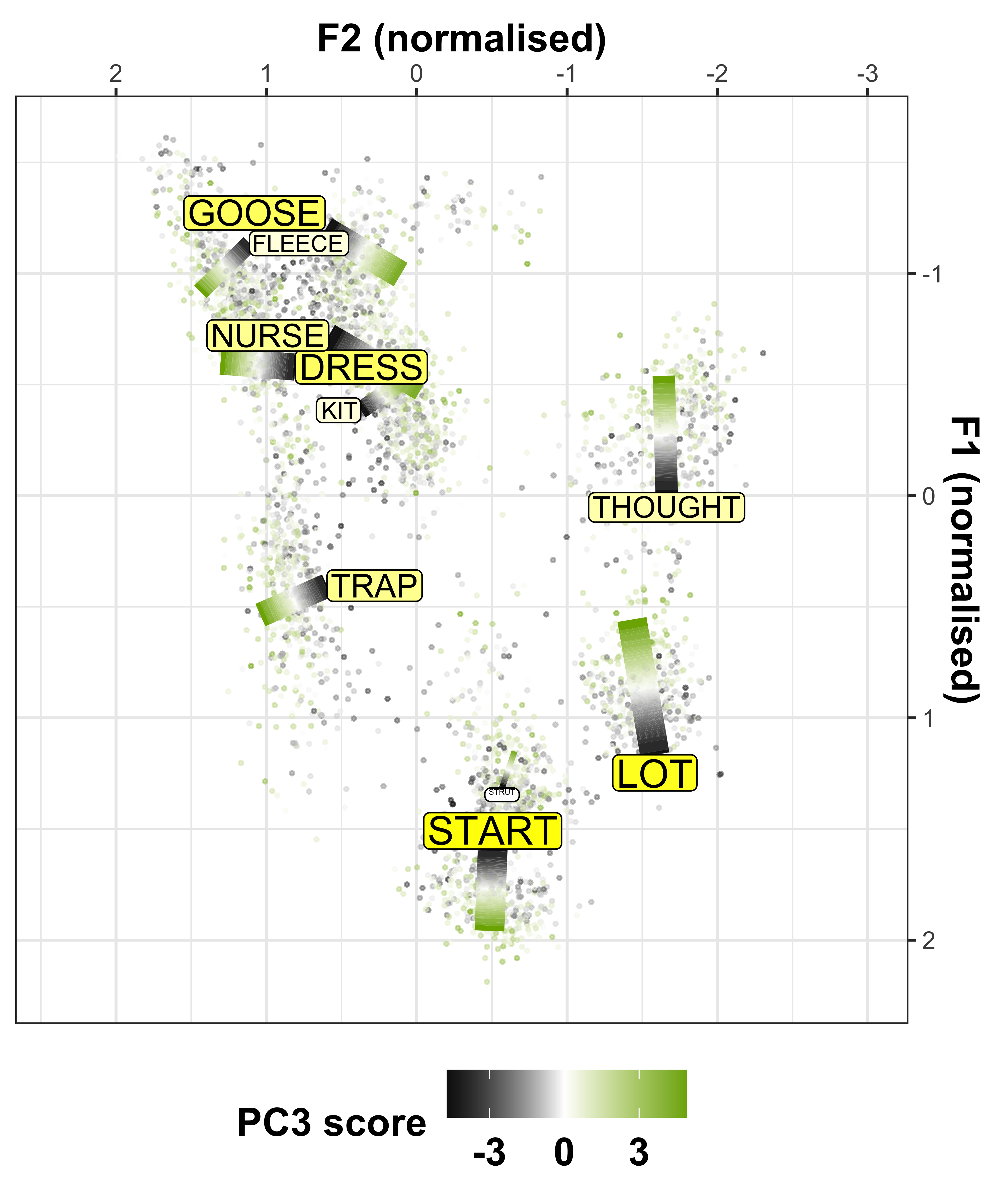

# ggsave(plot = PC3_contrib_plot, filename = "Figures/PC3_contrib_plot.png", width = 8, height = 5, dpi = 300)Visualisation in vowel space

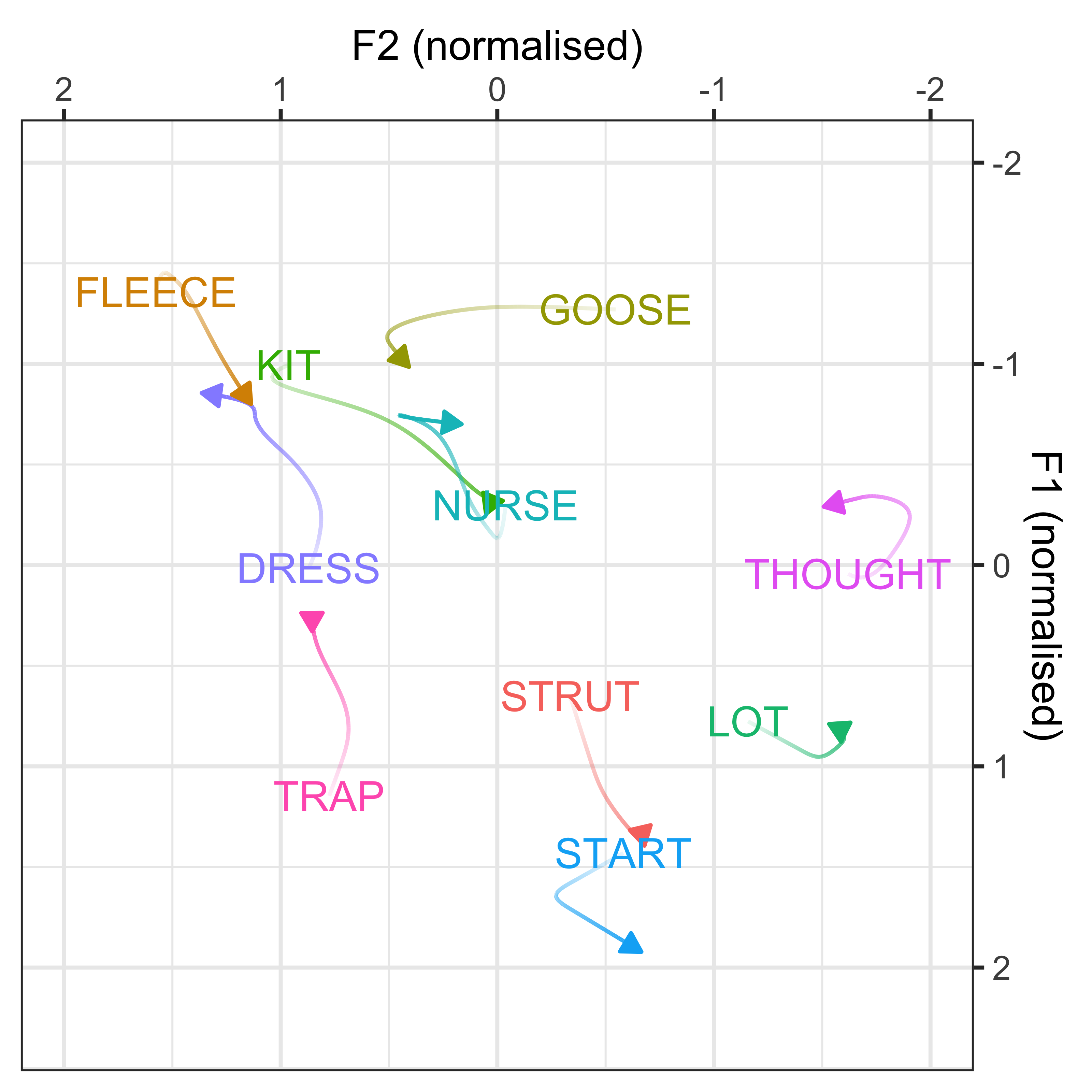

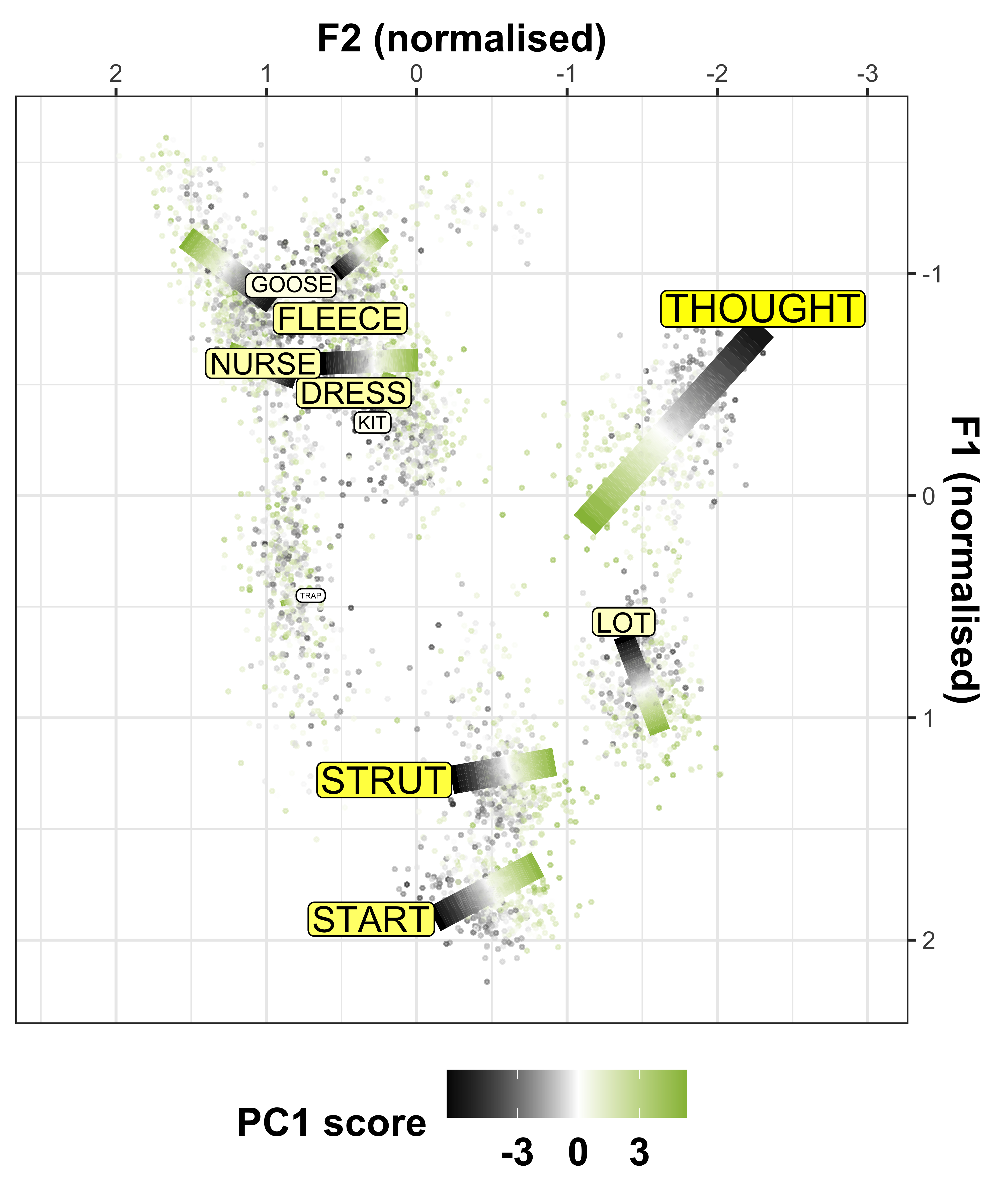

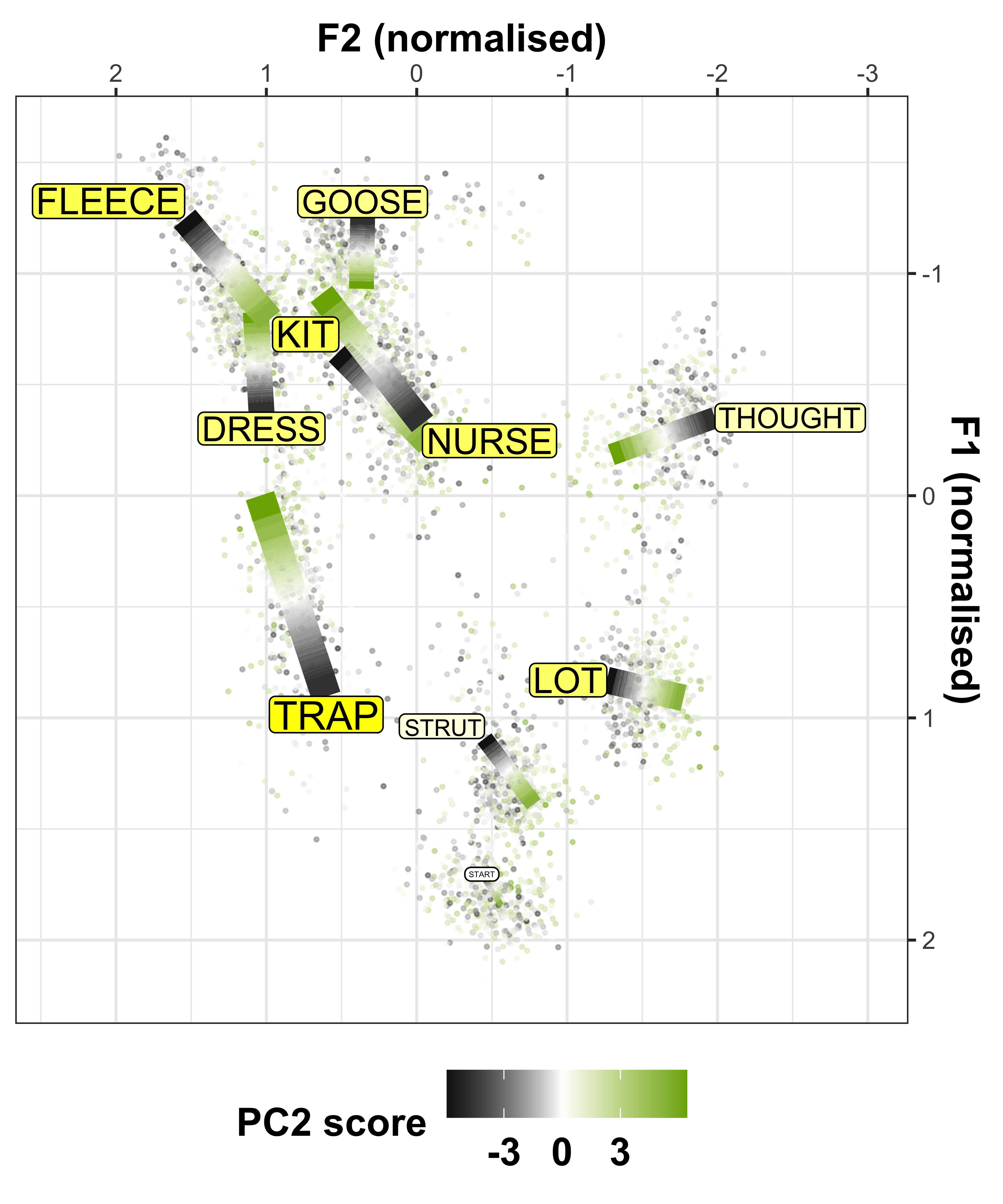

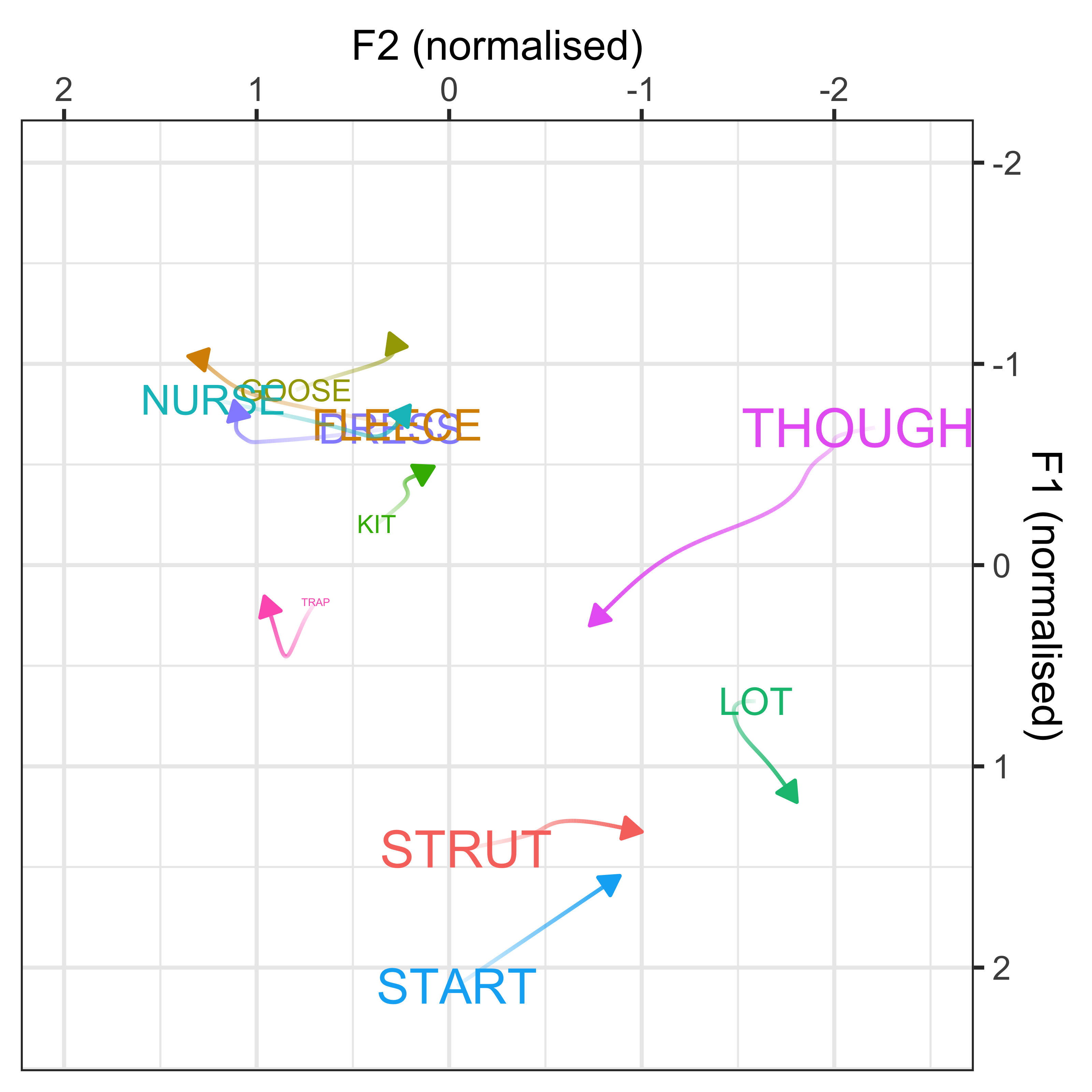

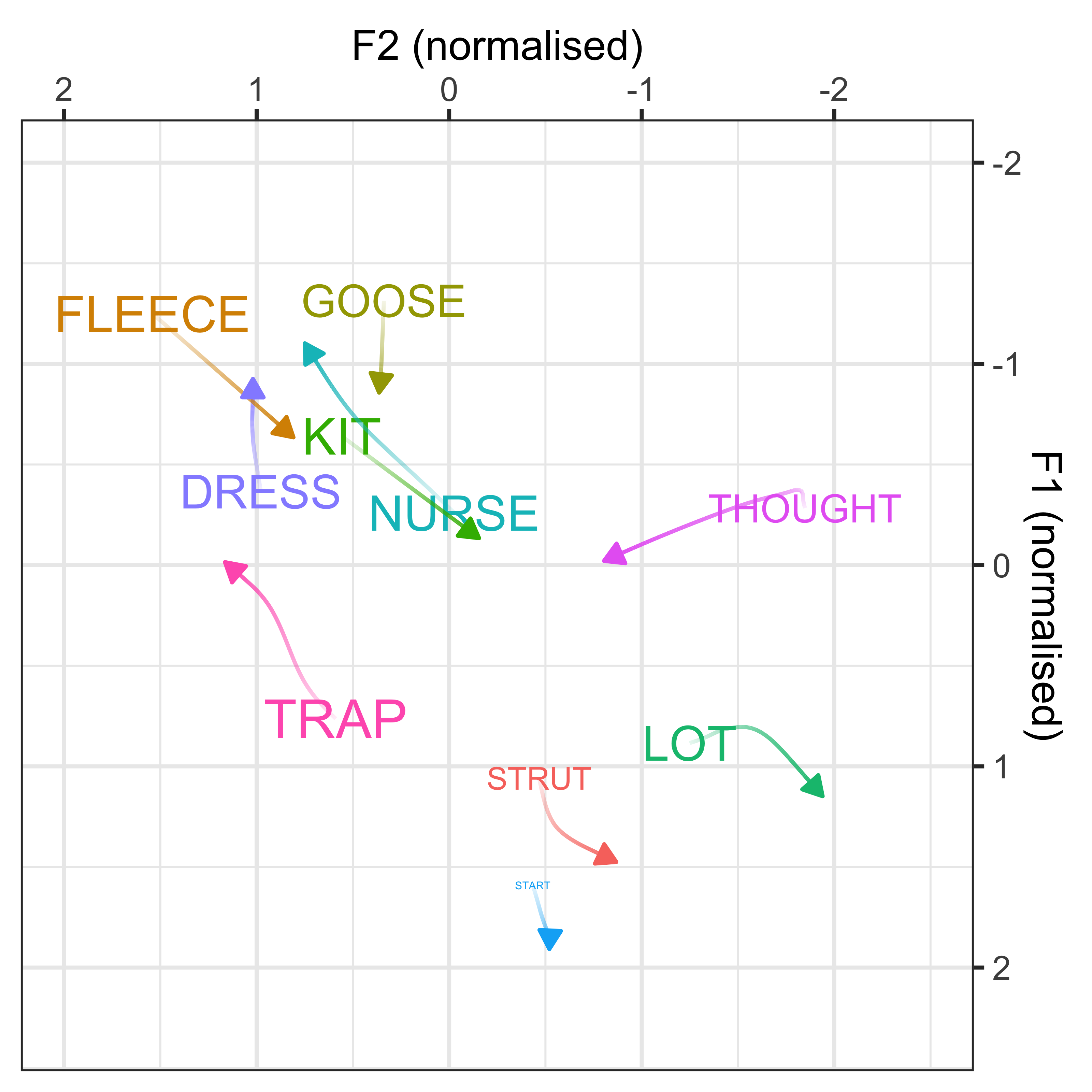

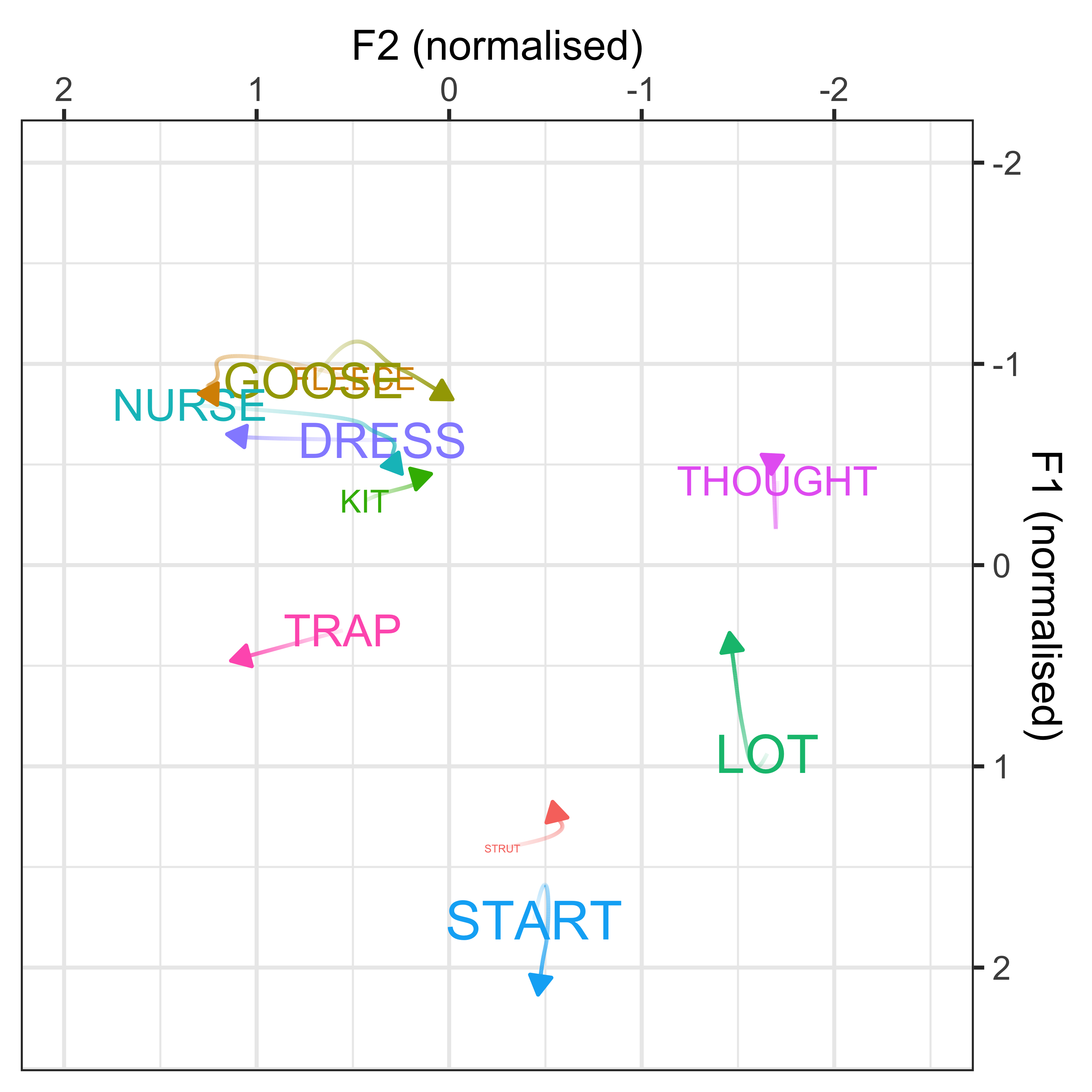

In order to understand how these variables are co-varying together, we can re-interpret the dot plots in terms of F1/F2 vowel space. This will allow us to explore our theoretically motivated interpretation that these PCs represent changes in F1/F2 over time, thus demonstrating that the PCs may be representing ‘leaders’ and ‘laggers’ of sound change.

We will plot how the vowel space in New Zealand English has changed over the course of the dataset (defined by year of birth), based on the GAMM modelling performed in earlier models.

We then visualise how the vowel spaces of the speakers in PC1, PC2 and PC3 also change (defined by the PCA scores), this interpretation will be driven by the weighting of the variable loadings on each PC (as shown in the dot plots). This will done in 3 different ways:

GAM smooths

Vowel plots

Animations

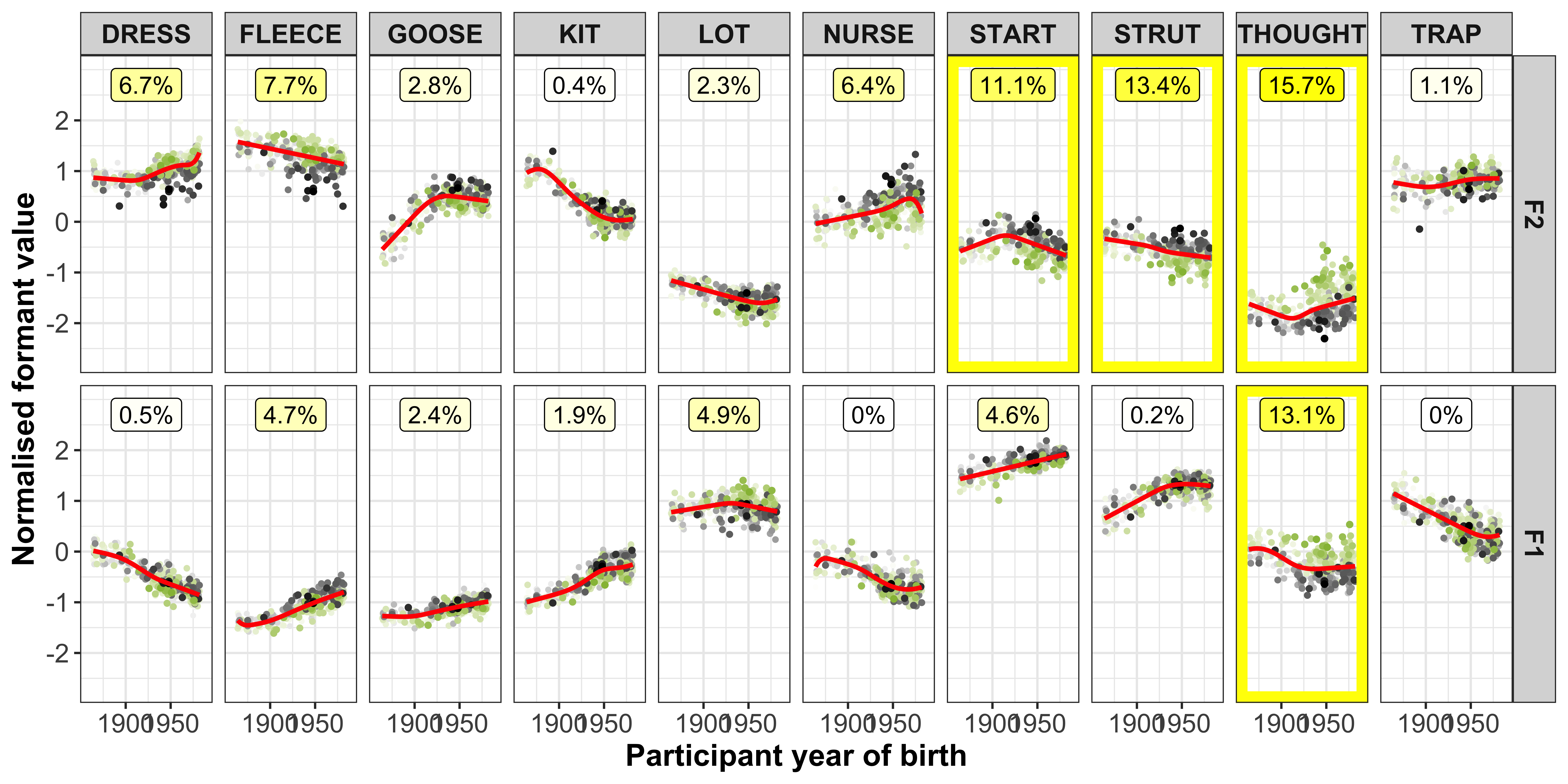

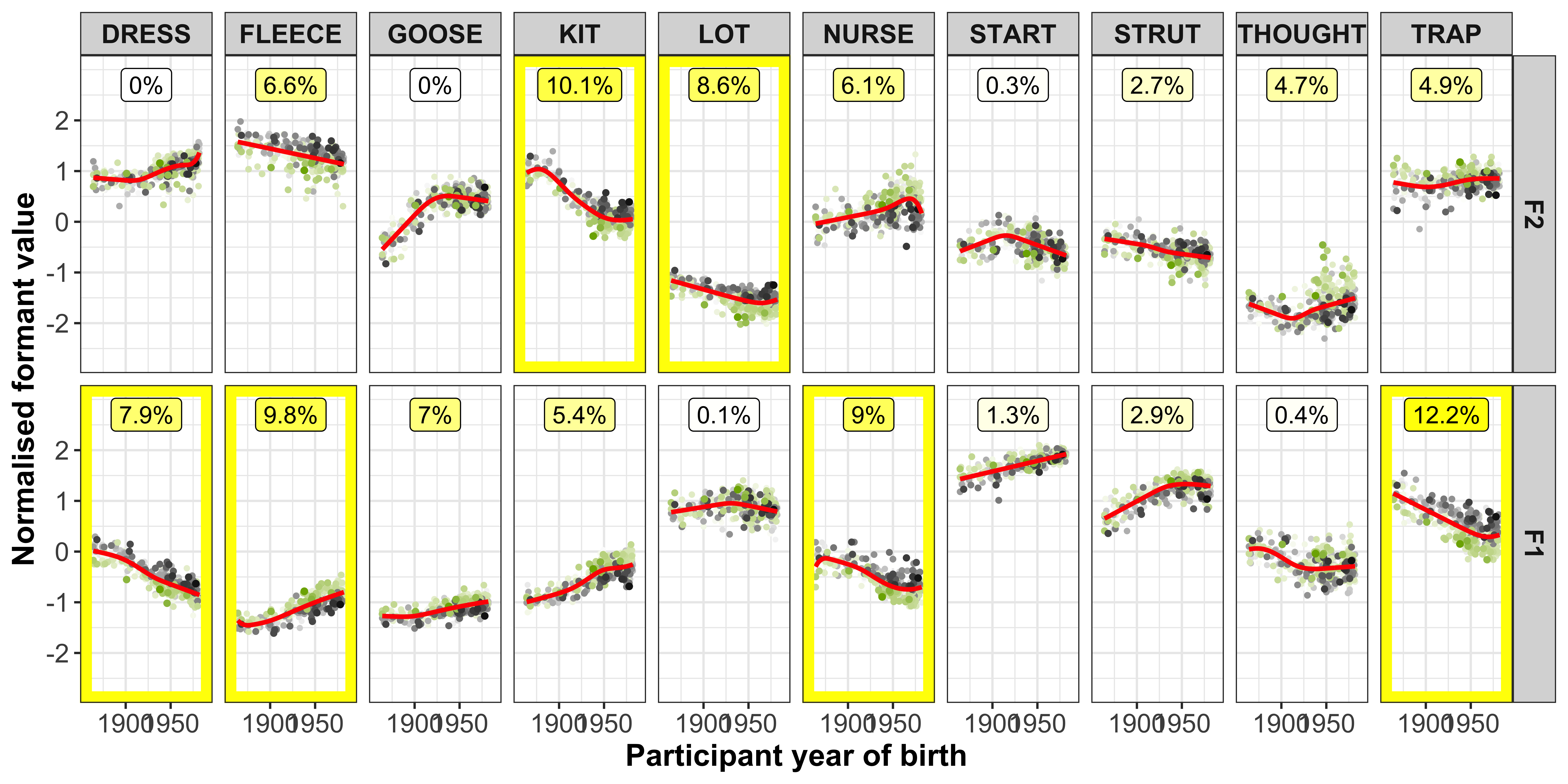

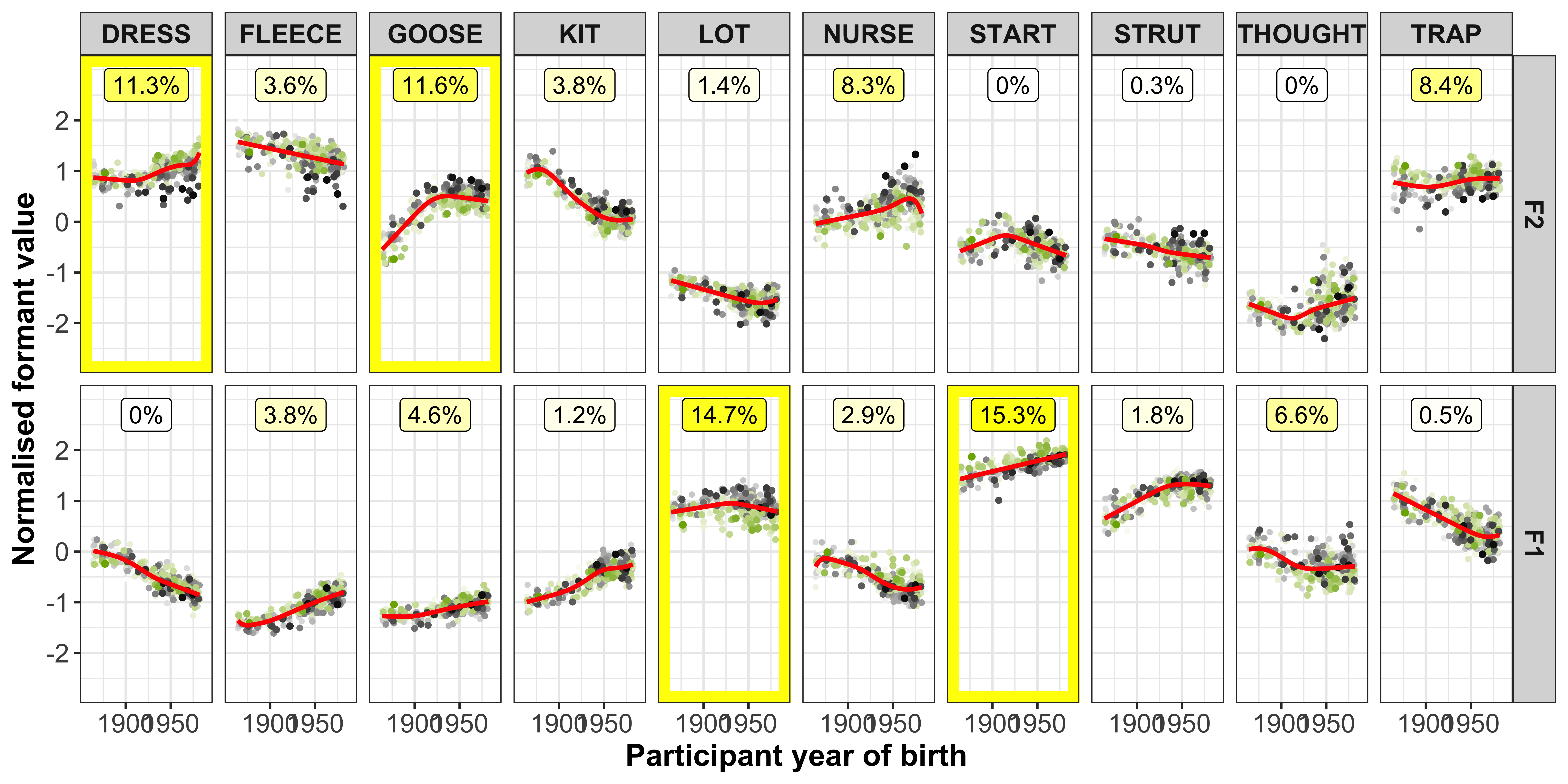

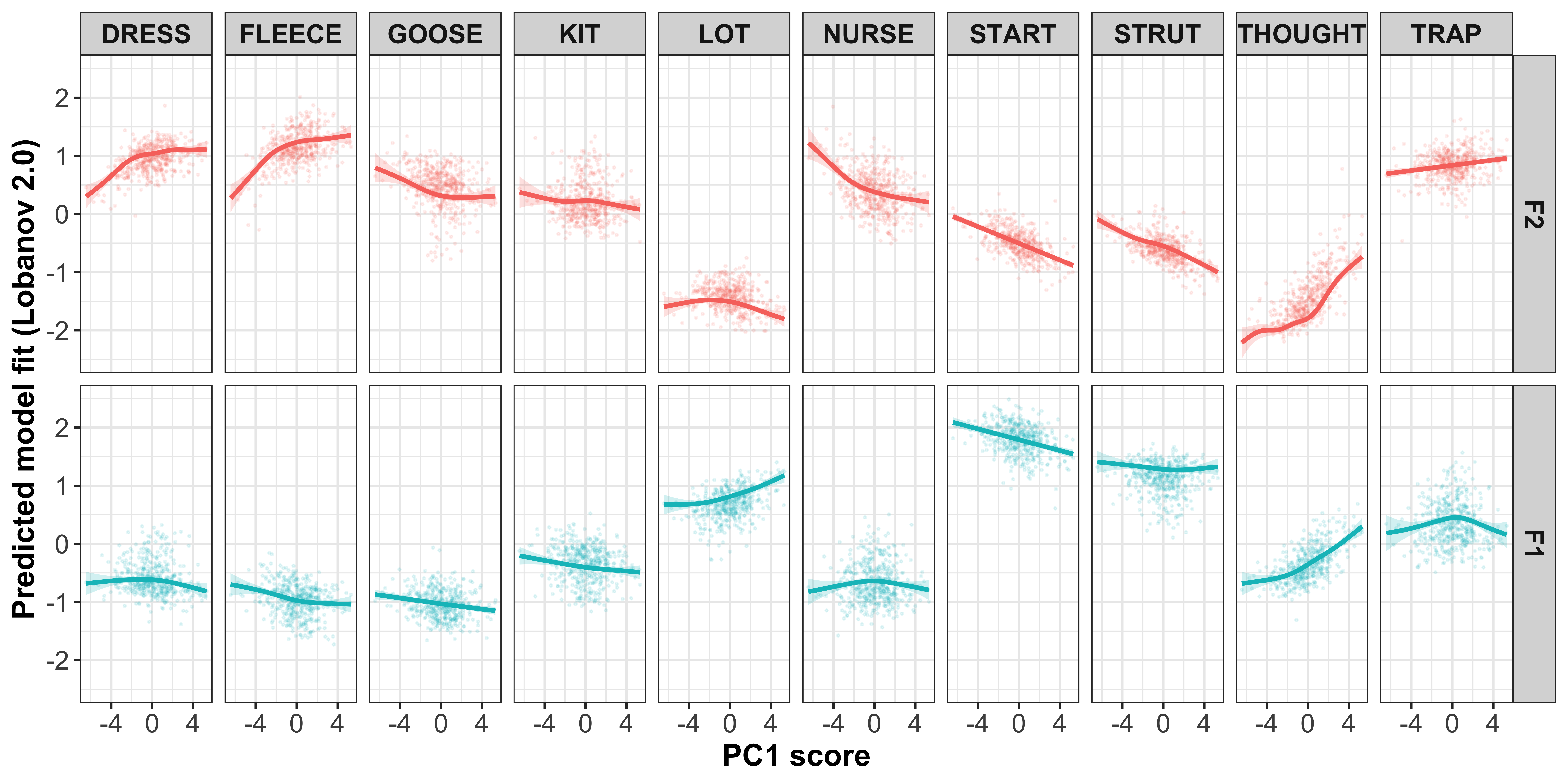

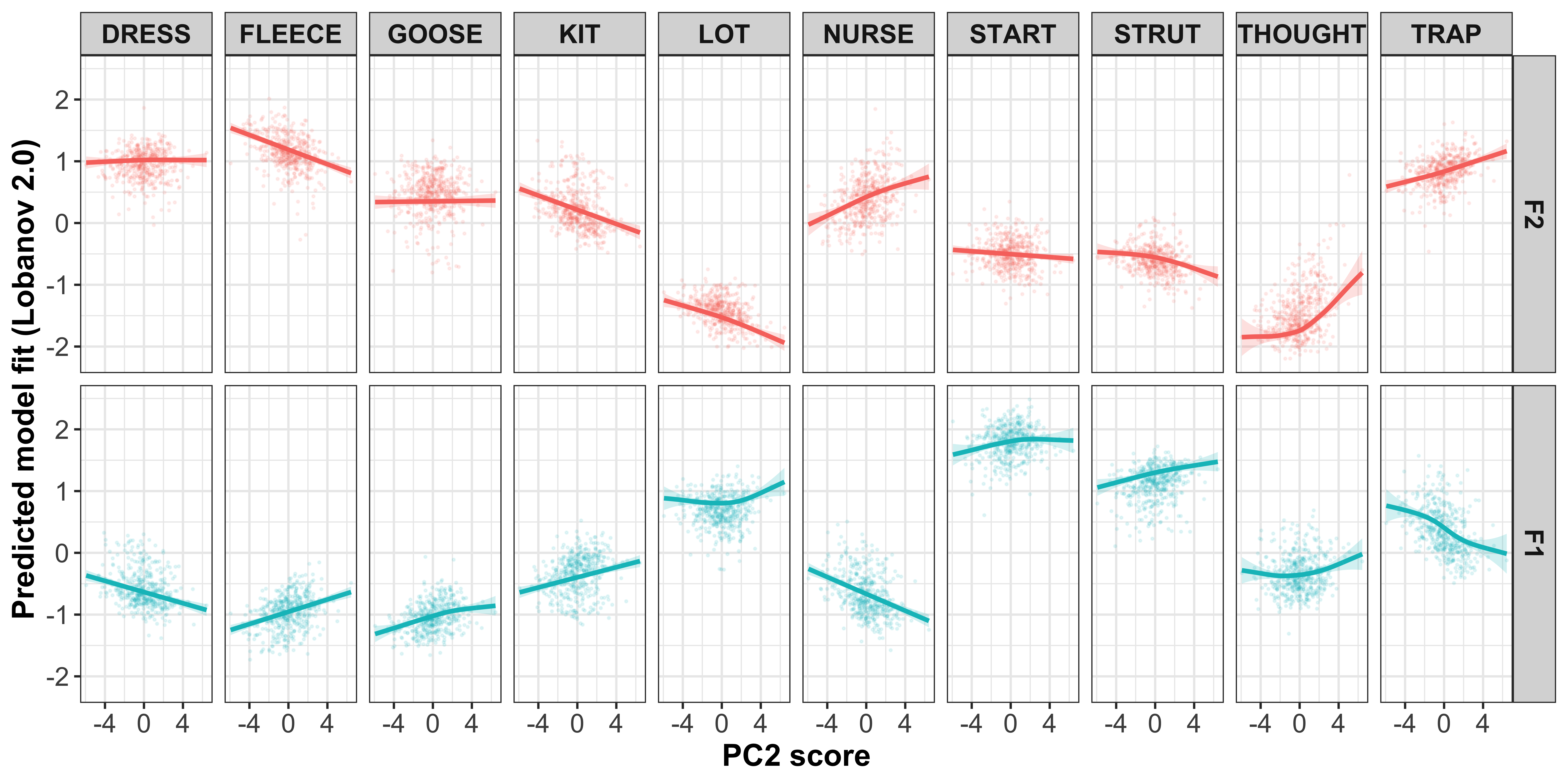

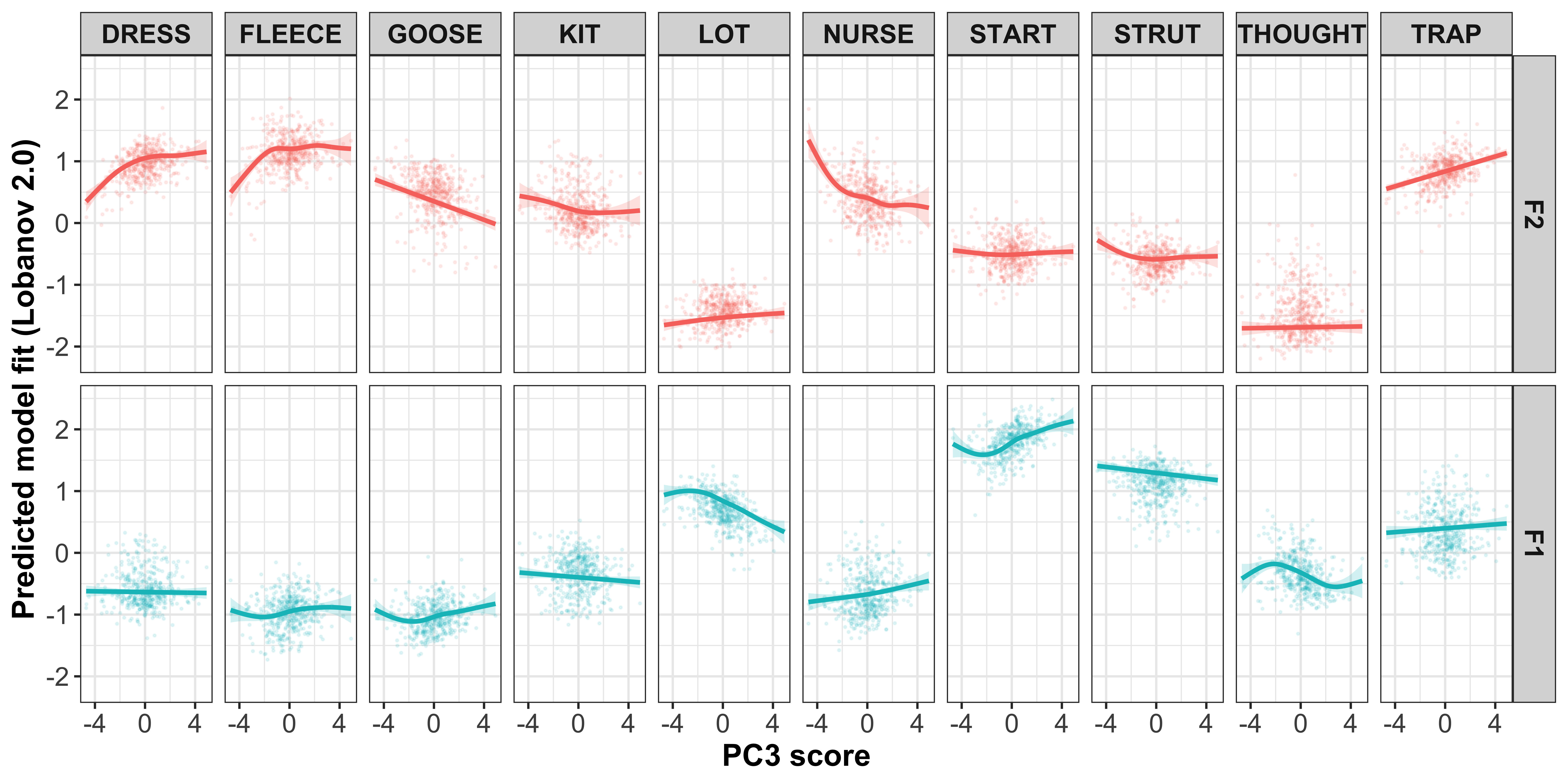

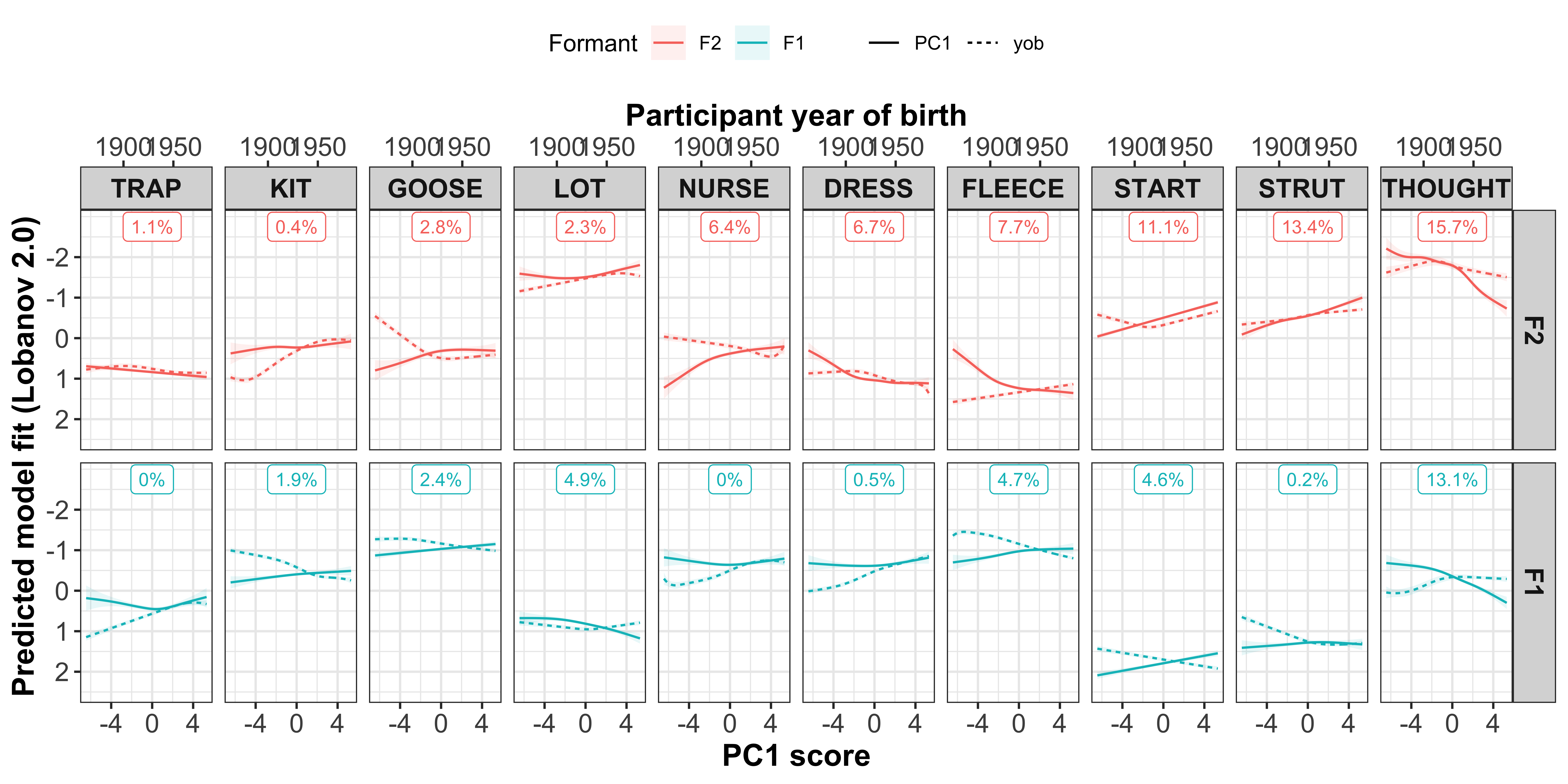

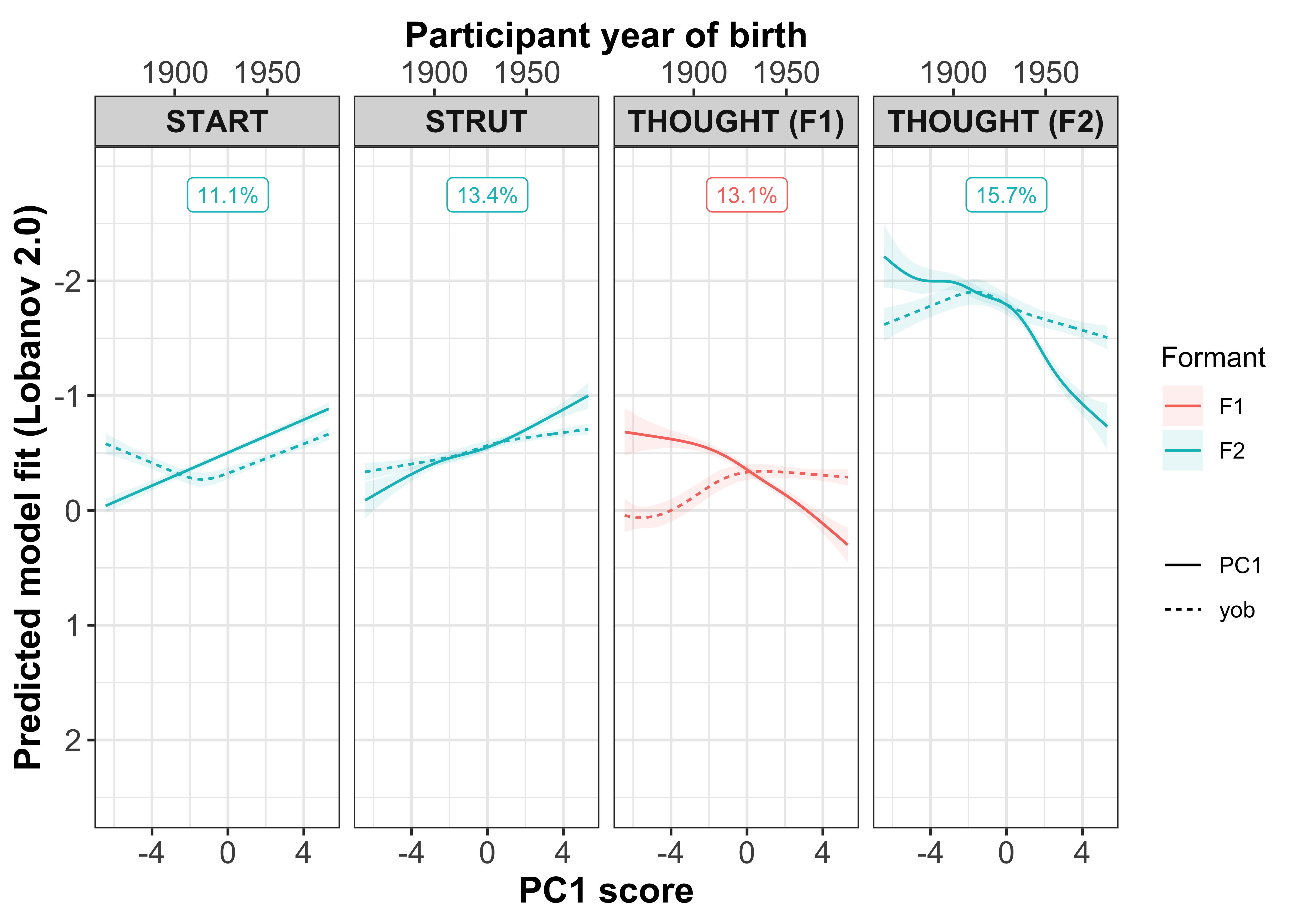

In order to generate these plots, we have to run more GAMMs predicting either F1 or F2 (normalised), by the PCA scores, this will provide us with predicted model values, that show the trajectories based on the PCA scores (i.e. going from negative to positive values).

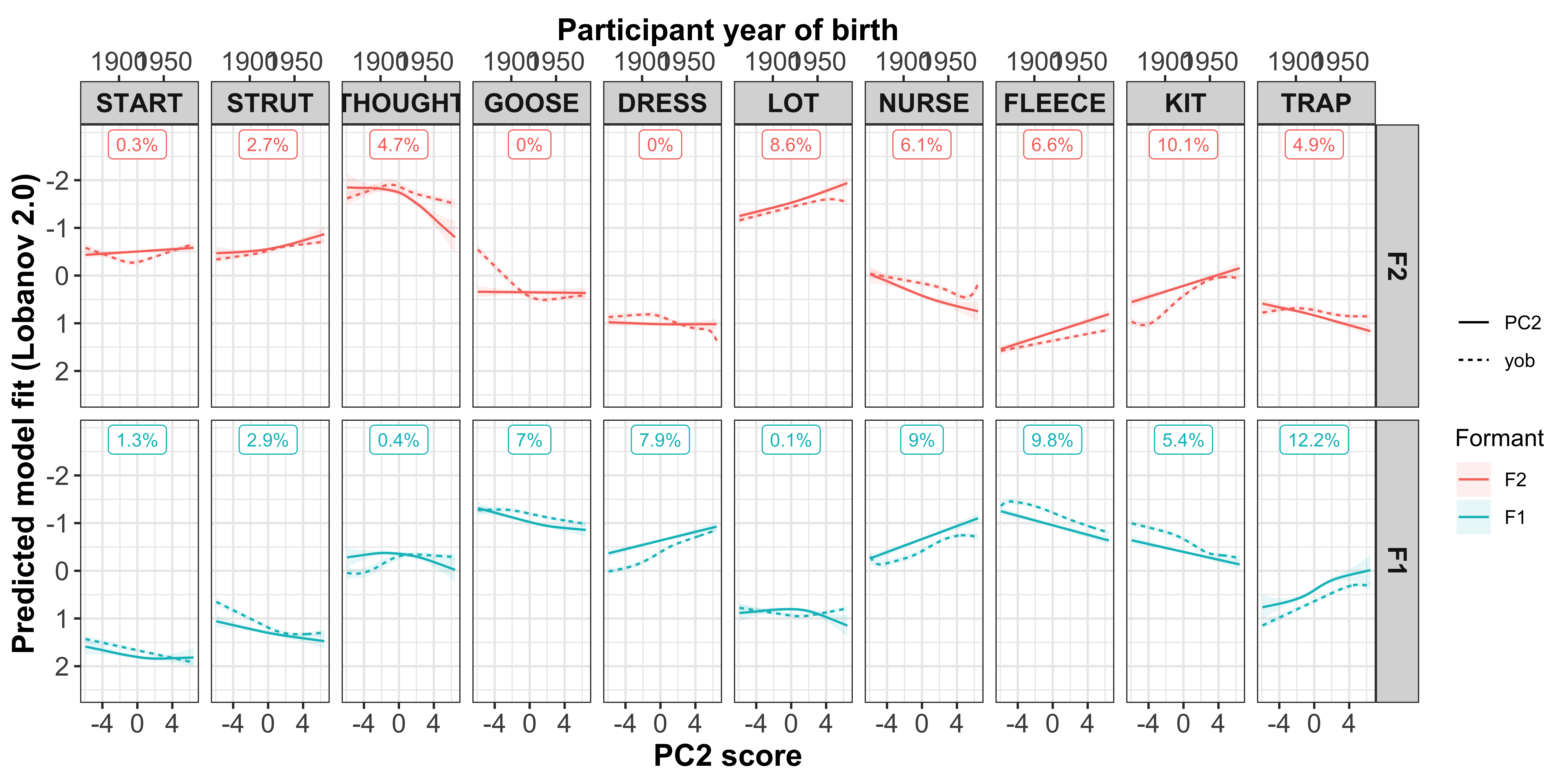

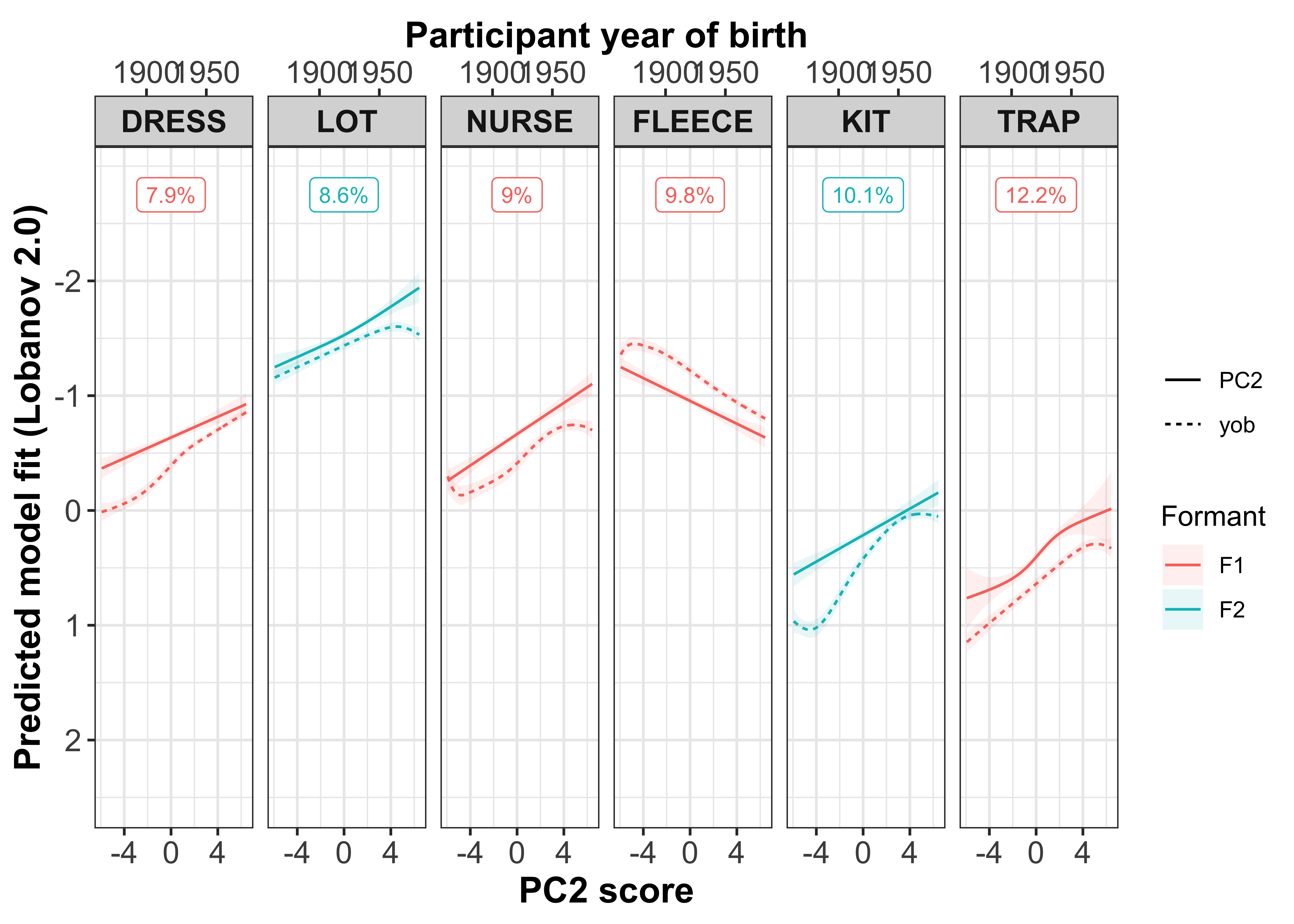

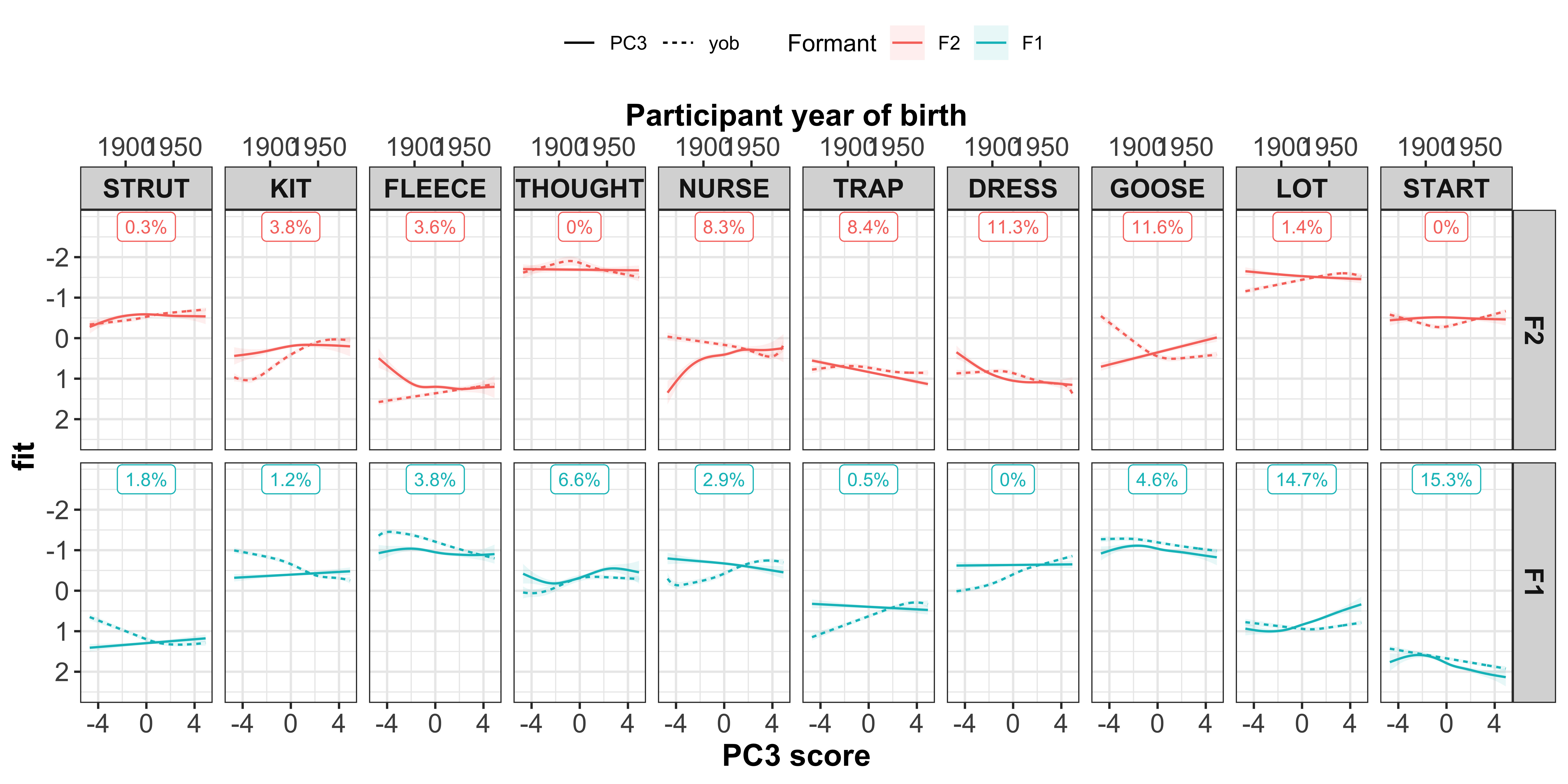

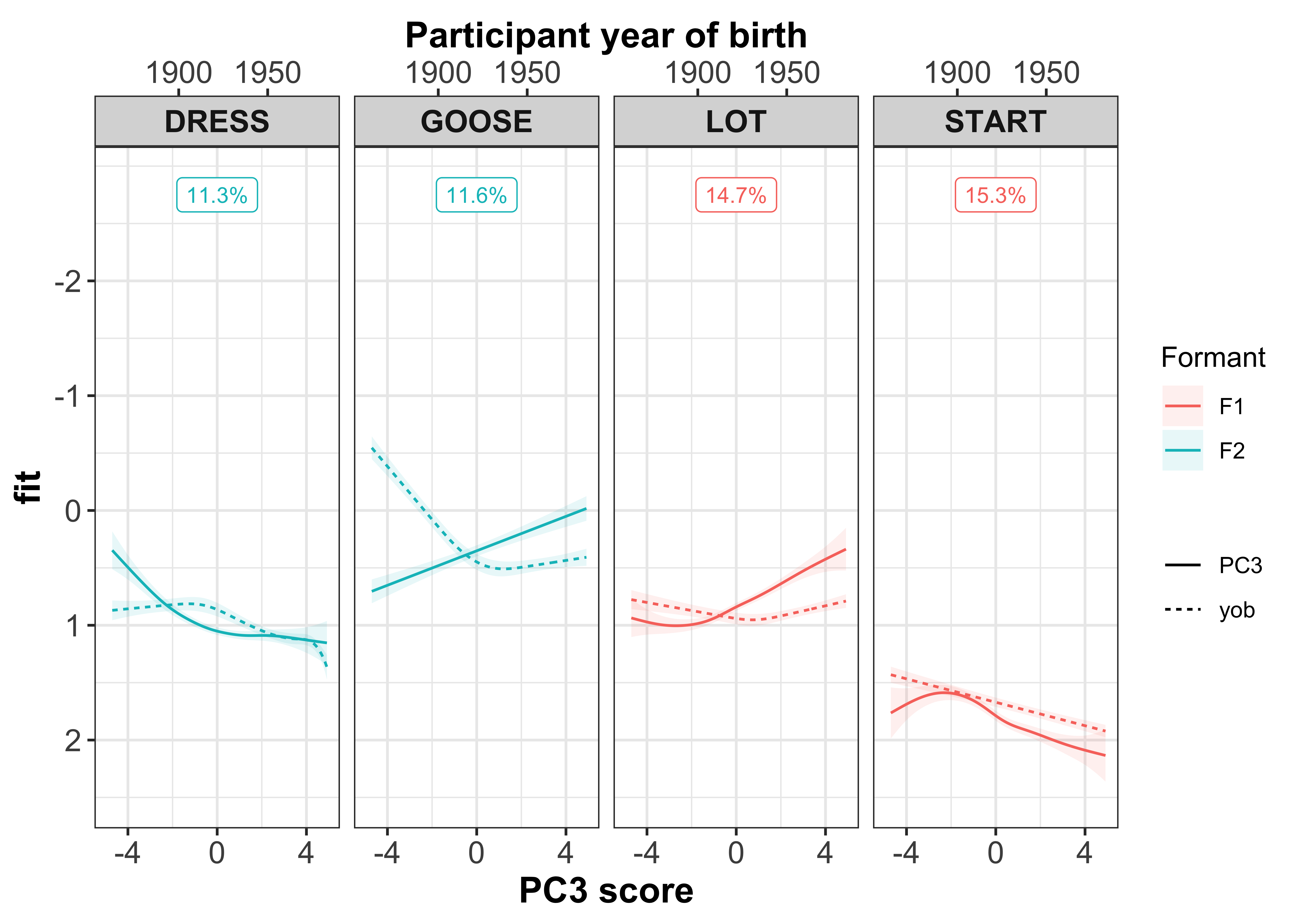

Note, the direction of the PCA scores (for PC1, PC2 and PC3) have been reversed in the figures, i.e. negative scores are switched to positive and vice versa. This is purely for visualisation purposes as it helps the interpretation in terms of change over time. This does not affect any underlying importance of the actual scores, the direction (+/-) is arbitrary in PCA and remains the same as long as all values are switched, which they have been.

Sound change

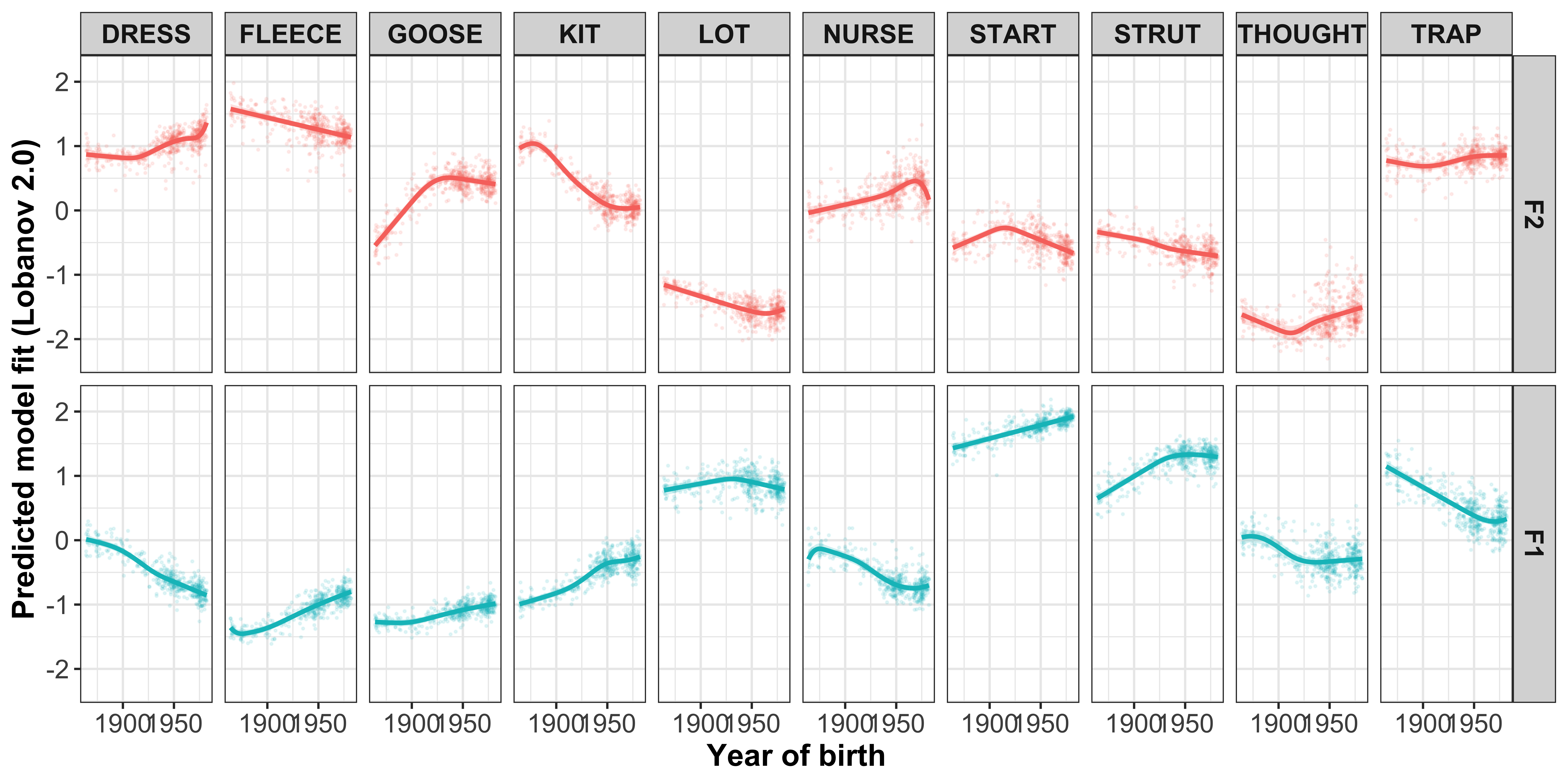

Visualisation by GAM smooth

Plotting change in normalised (Lobanov 2.0) F1 (red lines) and F2 (blue lines)

x axis = year of birth

y axis = normalised F1/F2

smoothed lines = GAM fit

year of birth

Load in the models and get the predicted values.

These steps are pre-run (they are big objects in R), but the resulting data can be found in the Data > Models folder, saved as mod_pred_yob_values.rds and mod_pred_yobgender_values.rds

mod_pred_yob_values <- readRDS("Data/Models/mod_pred_yob_values.rds")#make a long version of the intercepts

gam_intercepts.tmp_long <- gam_intercepts.tmp %>%

pivot_longer(F1_DRESS:F2_TRAP, names_to = "Vowel_formant", values_to = "Intercept") %>%

mutate(Formant = substr(Vowel_formant, 1, 2),

Vowel = substr(Vowel_formant, 4, max(nchar(Vowel_formant)))) %>%

left_join(vowels_all %>%

select(Speaker, participant_year_of_birth, Gender) %>% distinct()) %>%

left_join(mod_pred_yob_values %>% select(participant_year_of_birth, Vowel, Formant, fit, ll, ul))

#plot the gam smooths predicting F1/F2 per vowel and add the speaker intercepts values

sound_change_plot_smooth <- mod_pred_yob_values %>%

mutate(Formant = factor(Formant, levels = c("F2", "F1"))) %>%

ggplot(aes(x = participant_year_of_birth, y = fit, colour = Formant, fill = Formant)) +

geom_point(data = gam_intercepts.tmp_long %>% mutate(Formant = factor(Formant, levels = c("F2", "F1"))), aes(x = participant_year_of_birth, y = fit + Intercept), size = 0.25, alpha = 0.1) +

geom_line(size = 1) +

geom_ribbon(aes(ymin = ll, ymax = ul), alpha = 0.2, colour = NA) +

scale_x_continuous(breaks = c(1900, 1950)) +

xlab("Year of birth") +

ylab("Predicted model fit (Lobanov 2.0)") +

facet_grid(Formant~Vowel) +

theme_bw() +

theme(legend.position = "none", axis.text = element_text(size = 12), axis.title = element_text(size = 14, face = "bold"), strip.text = element_text(size = 12, face = "bold"))

sound_change_plot_smooth

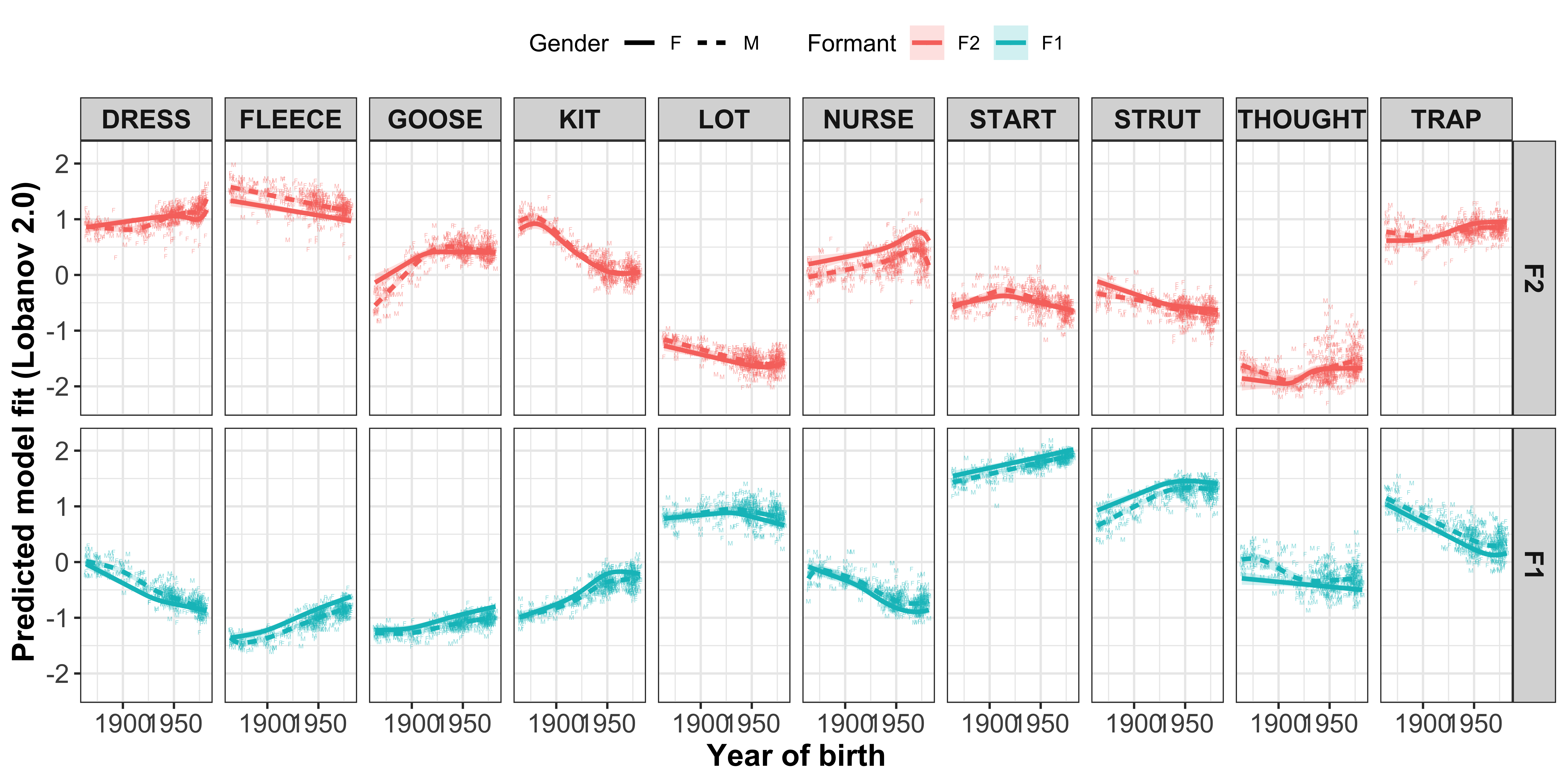

ggsave(plot = sound_change_plot_smooth, filename = "Figures/sound_change_gam.png", width = 12, height = 5, dpi = 600)year of birth and gender

mod_pred_yobgender_values <- readRDS("Data/Models/mod_pred_yobgender_values.rds")#plot the gam smooths predicting F1/F2 per vowel and add the per speaker mean values with F or M as the text

sound_change_plot_smooth_gender <- mod_pred_yobgender_values %>%

mutate(Formant = factor(Formant, levels = c("F2", "F1"))) %>%

ggplot(aes(x = participant_year_of_birth, y = fit, colour = Formant, linetype = Gender, label = Gender, fill = Formant)) +

geom_text(data = gam_intercepts.tmp_long %>%

mutate(Formant = factor(Formant, levels = c("F2", "F1"))), aes(x = participant_year_of_birth, y = fit + Intercept), size = 1, alpha = 0.5) +

geom_line(size = 1) +

geom_ribbon(aes(ymin = ll, ymax = ul), alpha = 0.2, colour = NA) +

scale_x_continuous(breaks = c(1900, 1950)) +

xlab("Year of birth") +

ylab("Predicted model fit (Lobanov 2.0)") +

facet_grid(Formant~Vowel) +

theme_bw() +

theme(legend.position = "top", axis.text = element_text(size = 12), axis.title = element_text(size = 14, face = "bold"), strip.text = element_text(size = 12, face = "bold")) +

guides(linetype=guide_legend(override.aes=list(fill=NA)))

sound_change_plot_smooth_gender

ggsave(plot = sound_change_plot_smooth_gender, filename = "Figures/sound_change_gam_gender.png", width = 10, height = 5, dpi = 600)Visualisation in F1/F2 space

We can convert the data from the above plot in (Lobanov 2.0 normalised) F1/F2 space, to see the changes in a more conventional vowel space plot

x axis = F2

y axis = F1

colours = lexical set of vowel

lines = trajectory of the F1/F2 in terms of year of birth (1857 - 1988)

vowel labels = start point of the vowel trajectory (oldest year of birth: 1857)

arrows = end point of the trajectory (youngest year of birth: 1988)

#transform data so there are separate columns for F1 and F2

sound_change_plot_data <- mod_pred_yob_values %>%

select(participant_year_of_birth, fit, Vowel, Formant) %>%

pivot_wider(names_from = Formant, values_from = fit) #transform the data to wide format so there are separate F1 and F2 variables

#make data frame to plot starting point, this will give the vowel labels based on the smallest year of birth coordinates

sound_change_labels1 <- sound_change_plot_data %>%

group_by(Vowel) %>%

filter(participant_year_of_birth == min(participant_year_of_birth))

#make data frame to plot end point, this will give the arrow at the end of the paths based on the largest year of birth coordinates

sound_change_labels2 <- sound_change_plot_data %>%

group_by(Vowel) %>%

top_n(wt = participant_year_of_birth, n = 2)

#plot

sound_change_plot <- sound_change_plot_data %>%

#set general aesthetics

ggplot(aes(x = F2, y = F1, colour = Vowel, alpha = participant_year_of_birth, group = Vowel)) +

#add year of birth change trajectories

geom_path(size = 0.5, show.legend = FALSE) +

#add end points (this gives the arrows)

geom_path(data = sound_change_labels2, aes(x = F2, y = F1, colour = Vowel, group = Vowel),

arrow = arrow(ends = "last", type = "closed", length = unit(0.2, "cm")),

inherit.aes = FALSE, show.legend = FALSE) +

#plot the vowel labels

geom_text(data = sound_change_labels1, aes(x = F2, y = F1, colour = Vowel, group = Vowel, label = Vowel), inherit.aes = FALSE, show.legend = FALSE) +

#label the axes

xlab("F2 (normalised)") +

ylab("F1 (normalised)") +

#scale the size so the path is not too wide

scale_size_continuous(range = c(0.2,1)) +

#reverse the axes to follow conventional vowel plotting

scale_x_reverse(limits = c(2,-2), position = "top") +

scale_y_reverse(limits = c(2.3,-2), position = "right") +

#set the colours

scale_color_manual(values = c("#9590FF", "#D89000", "#A3A500", "#39B600", "#00BF7D",

"#00BFC4", "#00B0F6", "#F8766D", "#E76BF3", "#FF62BC")) +

#add a title

# labs(title = "A) Change over time\n ") +

#set the theme

theme_bw() +

#make title bold

theme(plot.title = element_text(face="bold"))

sound_change_plot

ggsave(plot = sound_change_plot, filename = "Figures/sound_change_static.png", width = 5.5, height = 5.5, dpi = 300)Visualisation by animation

We can also recreate the above plot in 3 dimensional space, where the trajectories are animated.

x axis = F2

y axis = F1

colours = lexical set of vowel

movement = trajectory of the F1/F2 in terms of year of birth (1857 - 1988)

trails = the previous positions of the vowels

sound_change_plot_animation <- sound_change_plot_data %>%

#set general aesthetics

ggplot(aes(x = F2, y = F1, colour = Vowel, group = Vowel, label = Vowel)) +

geom_text(aes(fontface = 2), size = 5, show.legend = FALSE) +

# geom_point() +

geom_path() +

#label the axes

xlab("F2 (normalised)") +

ylab("F1 (normalised)") +

#reverse the axes to follow conventional vowel plotting

scale_x_reverse(limits = c(2,-2), position = "top") +

scale_y_reverse(limits = c(2.3,-2), position = "right") +

#set the colours

scale_color_manual(values = c("#9590FF", "#D89000", "#A3A500", "#39B600", "#00BF7D",

"#00BFC4", "#00B0F6", "#F8766D", "#E76BF3", "#FF62BC")) +

#add a title

labs(caption = 'Year of birth: {round(frame_along, 0)}') +

#set the theme

theme_bw() +

#make text more visible

theme(axis.title = element_text(size = 14, face = "bold"),

axis.text.x = element_text(size = 14, face = "bold"),

axis.text.y = element_text(size = 14, face = "bold", angle = 270),

axis.ticks = element_blank(),

plot.caption = element_text(size = 30, hjust = 0),

legend.position = "none") +

#set the variable for the animation transition i.e. the time dimension

transition_reveal(participant_year_of_birth) +

#add in a trail to see the path

# shadow_trail(max_frames = 100, alpha = 0.1) +

ease_aes('linear')

sound_change_plot_animation <- animate(sound_change_plot_animation, nframes = 200, fps = 5, rewind = FALSE, start_pause = 10, end_pause = 10, duration = 20)

# animate(sound_change_plot_animation, nframes = 200, fps = 5, rewind = FALSE, start_pause = 10, end_pause = 10, duration = 20, height = 800, width =800)

anim_save(sound_change_plot_animation, filename = "Figures/sound_change_animation.gif")

sound_change_plot_animationPC scores

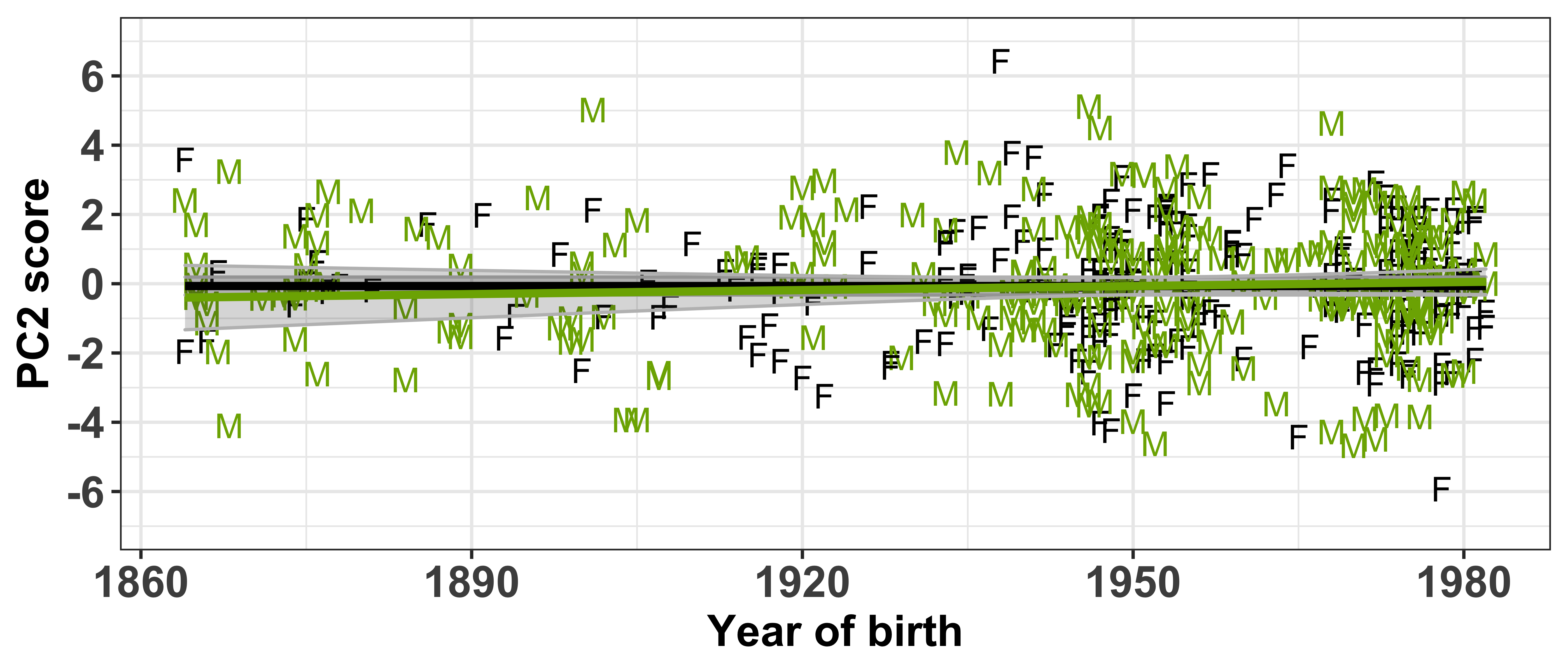

To understand how the PCs vary in terms of the co-variation between the vowel-formants, we want to inspect the PC scores. Within each of the PCs, there will be speakers who typify the co-variation seen in the dot plots. This is shown through the PC scores, with each speaker assigned a different score for each of the PCs. These scores can be interpreted in a similar way to the loadings - the larger your score (either +/-), the more that speaker contributes to the co-variation.

We can explore the PC scores to:

Assess the relationship between the scores and the intercepts

Visualise the vowel spaces of speakers with +/- scores

Understand how these speakers differ in terms of F1~F2 space for the vowel-formants contributing most to each PC

Inspect the relationship between the PC scores and directions of sound change

Test if the PC scores are independent of the socio-demographic variables included in the sound change GAMMs

Each speaker has a PC score within each of the PCs (which can be found in intercepts.pca$scores), thus we need to extract this information and combine it with the speaker’s social information (which can be found in vowels_all).

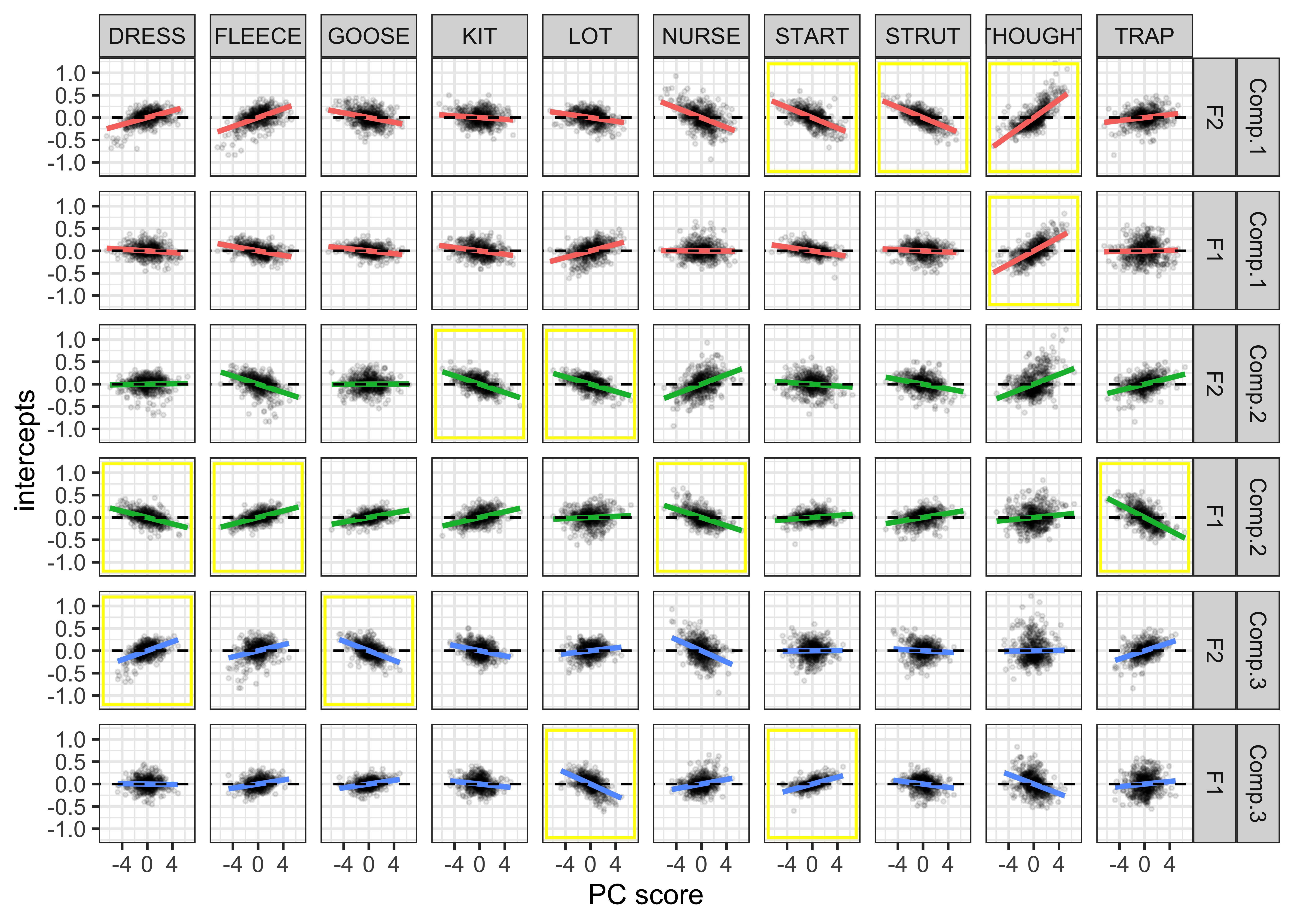

Relationship between intercepts and PC scores

First let’s visualise the relationship between the PC scores and the speaker intercepts. This will show us if speakers with +/- scores have +/- intercepts. Our prediction is that those with the largest PC scores will have large intercepts. When we are referring to ‘large’ here, we are talking about absolute values, where + and - values are important.

The vowel-formants that contribute most to a PC (highlighted by the yellow boxes in the plot below) are those we are most interested in and should show a clear slope in the regression fits.

#extract the PCA scores for the first 3 PCs from the PCA

PC_speaker_loadings <- as.data.frame(intercepts.pca$scores[,1:3]) %>% #the loadings are stored in the scores part of the intercepts.pca object

mutate(Speaker = gam_intercepts.tmp$Speaker) #create a Speaker variable with the speaker names

#get speaker's social information and combine it with the speaker intercepts and then the PC loadings

PC_speaker_loadings <- vowels_all %>%

dplyr::select(Speaker, Corpus, Gender, participant_year_of_birth) %>% #choose the variables of interest from vowels_all

distinct() %>% #make one row for each speaker

left_join(., gam_intercepts.tmp, by = "Speaker") %>% #combine the social information with the intercepts

left_join(., PC_speaker_loadings, by = "Speaker") #combine this with the PC loadings

#add the PCA scores from PC1, PC2 and PC3

vowels_all <- vowels_all %>%

left_join(PC_speaker_loadings[, c("Speaker", "Comp.1", "Comp.2", "Comp.3")])

PC_variable_loadings <- data.frame(intercepts.pca$loadings[,1:3]) %>%

mutate(name = row.names(.),

Formant = substr(name, 1, 2), #add extra variables

Vowel = substr(name, 4, max(nchar(name)))) %>%

rename(PC1_loading = 1,

PC2_loading = 2,

PC3_loading = 3)

#extract the PCA scores for the first 3 PCs from the PCA

PC_speaker_loadings1 <- as.data.frame(intercepts.pca$scores[,1:3]) %>% #the loadings are stored in the scores part of the intercepts.pca object

mutate(Speaker = gam_intercepts.tmp$Speaker) %>%

left_join(vowels_all %>% select(Speaker, participant_year_of_birth, Gender) %>% distinct())

PC = c(1:3)

gam_intercepts.tmp_long1 <- intercepts.pca$scores[,PC] %*% t(intercepts.pca$loadings[,PC]) %>%

data.frame() %>%

mutate(Speaker = gam_intercepts.tmp$Speaker) %>% #add speaker names

select(Speaker, 1:ncol(.)-1) %>% #reorder the variables

pivot_longer(F1_DRESS:F2_TRAP) %>% #make data long

left_join(gam_intercepts.tmp %>% pivot_longer(F1_DRESS:F2_TRAP, values_to = "intercepts")) %>% #add the original intercepts

mutate(Formant = substr(name, 1, 2), #add extra variables

Vowel = substr(name, 4, max(nchar(name))),

mod = paste0(Formant, "_intercepts")) %>%

left_join(PC_speaker_loadings %>% select(Speaker:participant_year_of_birth, Gender, Comp.1, Comp.2, Comp.3)) %>%

left_join(mod_pred_yob_values %>% select(participant_year_of_birth, fit, ll, ul, Vowel, Formant)) %>%

left_join(PC_variable_loadings) %>%

left_join(PC_variable_loadings %>% group_by(Vowel) %>% summarise(PC1_loading_max = max(abs(PC1_loading)), PC2_loading_max = max(abs(PC2_loading)), PC3_loading_max = max(abs(PC3_loading)))) %>%

mutate(intercepts1 = intercepts + fit,

Formant = factor(Formant, levels = c("F2", "F1"), ordered = TRUE))

gam_intercepts.tmp_long1_test <- gam_intercepts.tmp_long1 %>%

pivot_longer(Comp.1:Comp.3, names_to = "Comp", values_to = "Comp_score") %>%

left_join(., rbind(PC1_contrib %>% ungroup() %>% select(Variable, PC, highlight),

PC2_contrib %>% ungroup() %>% select(Variable, PC, highlight),

PC3_contrib %>% ungroup() %>% select(Variable, PC, highlight)) %>%

rename(name = Variable,

Comp = PC) %>%

mutate(Comp = gsub(x = Comp, "Loading.PC", "Comp.")))

ggplot(data = gam_intercepts.tmp_long1_test, aes(x = -Comp_score, y = intercepts, colour = Comp)) +

geom_point(size = 0.5, alpha = 0.1, colour = "black") +

# geom_point(aes(x = Comp_score, y = intercepts1), colour = "red", size = 0.2, alpha = 0.1) +

geom_smooth(method = "lm") +

# geom_smooth(aes(x = intercepts, y = intercepts1), colour = "red", method = "lm") +

geom_abline(slope = 0, linetype = 2) +

geom_rect(data = gam_intercepts.tmp_long1_test %>% filter(highlight == "black"), aes(xmin = -7, xmax = 7, ymin = -1.2, ymax = 1.2), alpha = 0, colour = "yellow") +

# scale_colour_viridis_c() +

xlab("PC score") +

facet_grid(Comp+Formant~Vowel) +

theme_bw() +

theme(legend.position = "none")

Regression models for intercepts ~ PC score

As the above plot is just a visualisation, we want to now run the regressions.

For each PC, we will run a regression for each vowel-formant, where we predict the speaker intercept by the speaker PC scores. We are not necessarily interested in the p values of these models as they are mainly for visualisation of the relationship.

mod_pred_PC1_values <- data.frame(intercepts = numeric(),

Comp.1 = numeric(),

residuals = numeric(),

fitted.values = numeric(),

residuals1 = numeric(),

fitted.values1 = numeric(),

mod = character())

for (i in levels(factor(gam_intercepts.tmp_long1$name))) {

lm1 <- lm(intercepts ~ Comp.1, data = gam_intercepts.tmp_long1, subset = name == i)

lm1_values <- cbind(lm1[["model"]], lm1[["residuals"]], lm1[["fitted.values"]]) %>%

rename(residuals = 3, fitted.values = 4) %>%

mutate(diff = residuals + fitted.values,

mod = i,

Formant = substr(i, 1, 2),

Vowel = substr(i, 4, nchar(i)))

lm2 <- lm(intercepts1 ~ Comp.1, data = gam_intercepts.tmp_long1, subset = name == i)

lm2_values <- cbind(lm2[["model"]], lm2[["residuals"]], lm2[["fitted.values"]]) %>%

rename(residuals1 = 3, fitted.values1 = 4) %>%

mutate(diff1 = residuals1 + fitted.values1)

lm1_values <- lm1_values %>%

left_join(lm2_values)

mod_pred_PC1_values <<- rbind(mod_pred_PC1_values, lm1_values)

}

mod_pred_PC1_values_plot <- mod_pred_PC1_values %>%

select(Vowel, Formant, Comp.1, intercepts, fitted.values, intercepts1, fitted.values1) %>%

pivot_wider(names_from = Formant, values_from = c(intercepts, fitted.values, intercepts1, fitted.values1)) %>%

group_by(Vowel) %>%

mutate(id = 1:481) %>%

left_join(gam_intercepts.tmp_long1 %>%

select(Speaker, Vowel, Formant, PC1_loading, PC1_loading_max, fit, intercepts, intercepts1, participant_year_of_birth) %>%

pivot_wider(names_from = Formant, values_from = c(fit, intercepts, intercepts1, PC1_loading)) %>%

distinct())

mod_pred_PC2_values <- data.frame(intercepts = numeric(),

Comp.2 = numeric(),

residuals = numeric(),

fitted.values = numeric(),

residuals1 = numeric(),

fitted.values1 = numeric(),

mod = character())

for (i in levels(factor(gam_intercepts.tmp_long1$name))) {

lm1 <- lm(intercepts ~ Comp.2, data = gam_intercepts.tmp_long1, subset = name == i)

lm1_values <- cbind(lm1[["model"]], lm1[["residuals"]], lm1[["fitted.values"]]) %>%

rename(residuals = 3, fitted.values = 4) %>%

mutate(diff = residuals + fitted.values,

mod = i,

Formant = substr(i, 1, 2),

Vowel = substr(i, 4, nchar(i)))

lm2 <- lm(intercepts1 ~ Comp.2, data = gam_intercepts.tmp_long1, subset = name == i)

lm2_values <- cbind(lm2[["model"]], lm2[["residuals"]], lm2[["fitted.values"]]) %>%

rename(residuals1 = 3, fitted.values1 = 4) %>%

mutate(diff1 = residuals1 + fitted.values1)

lm1_values <- lm1_values %>%

left_join(lm2_values)

mod_pred_PC2_values <<- rbind(mod_pred_PC2_values, lm1_values)

}

mod_pred_PC2_values_plot <- mod_pred_PC2_values %>%

select(Vowel, Formant, Comp.2, intercepts, fitted.values, intercepts1, fitted.values1) %>%

pivot_wider(names_from = Formant, values_from = c(intercepts, fitted.values, intercepts1, fitted.values1)) %>%

group_by(Vowel) %>%

mutate(id = 1:481) %>%

left_join(gam_intercepts.tmp_long1 %>%

select(Speaker, Vowel, Formant, PC2_loading, PC2_loading_max, fit, intercepts, intercepts1, participant_year_of_birth) %>%

pivot_wider(names_from = Formant, values_from = c(fit, intercepts, intercepts1, PC2_loading)) %>%

distinct())

mod_pred_PC3_values <- data.frame(intercepts = numeric(),

Comp.3 = numeric(),

residuals = numeric(),

fitted.values = numeric(),

residuals1 = numeric(),

fitted.values1 = numeric(),